Finally Solving TLGS Socket Descriptor Leak

After months of on and off work, I finally figured out what's causing TLGS' crawler to experience socket leak. I've a gemlog 4 months ago[1] detailing how I solved it. Well, kinda. I found a misuse of shared_ptr and timers causing references to TCP connections not released properly. Solving that drastically reduces how fast open socket count grows - that still makes no sense, but good enough for the time. Sockets should not be growing as I limit the crawler to 16 or 24 concurrent connections at a time in production.

And this growing of socket eventually causes the crawler to hang. Espically when it encounters pages like gemini://some.search.engine/known-hosts. Due to needing to check if URLs under it is crawable, the crawler needs to fetch robots.txt from literally every corner of Gemini. Making a hige amount of connections to all capsules (if no the robots.txt cache is too old). I'm forced to manually run the crawler and kill it if it stucks. Sometimes I forgot and the index doesn't get updated.

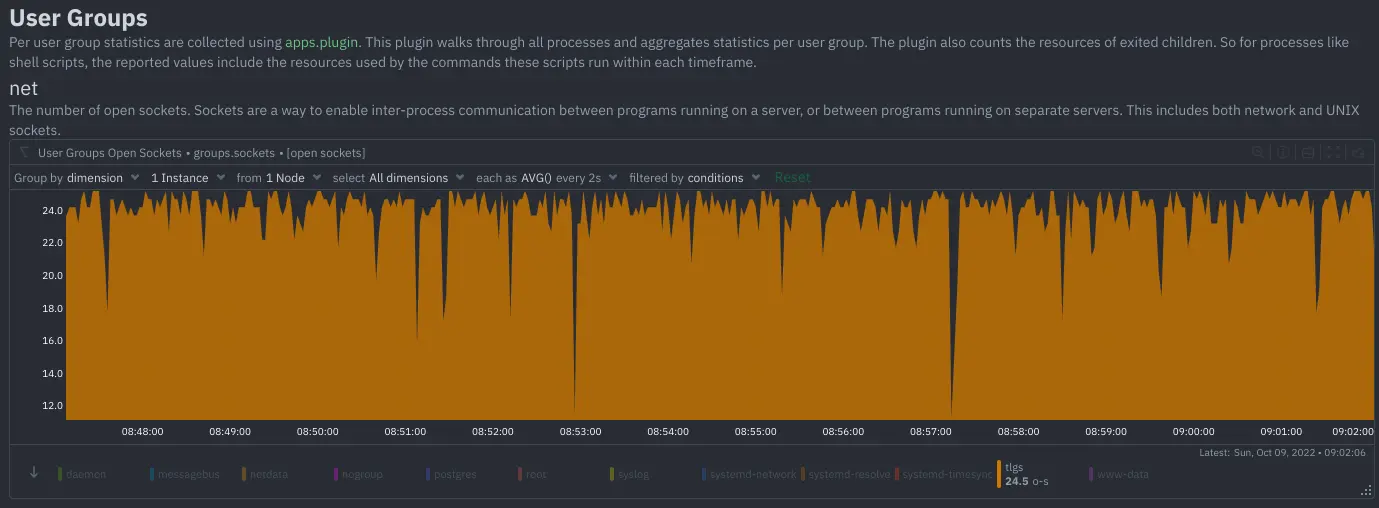

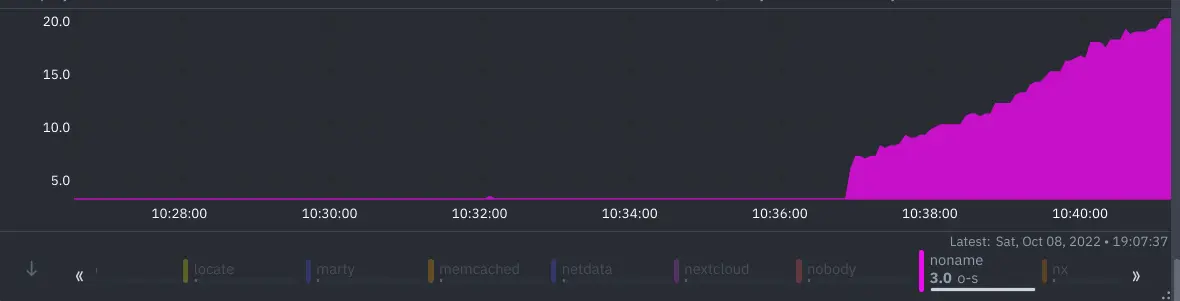

On a graph it looks like this (test run, not production):

And the crawler tech stack looks more or less like this.

Gemini Space

▲

│

┌─────────┘

│

┌───────┴──────────┐ ┌───────────┐ ┌─────────────┐

│Dremini (Gemini) │ │ │ │ │

│ ┌──────────┘ │ │◄─────────►│ PostgreSQL │

│ │ ┌──────────┘ │ │ │

│ │ │ Drogon (DB/HTTP) │ └─────────────┘

└───────┘ └──────────────────────┘

┌────────────────────────────────┐

│ trantor (TCP, event, DNS) │

└────────────────────────────────┘

┌────────────────────────────────┐

│ Linux/Any UNIX │

└────────────────────────────────┘

Initial Investigation

First some observations. Netdata[2] is installed on my VPS so I can see the resource and database usage. I start fining that somehow, when there's more database transactions, the socket count growth slows. I'm speculating something related to packet response time or broken connection. It's possible that some libraru is acting incorrectly when there's no data from TCP. Nothing concrete to go on though.

So I let the crawler run and accumulate sockets. Then lsof and netstat to try and figure out which host is causing the trouble. Something weird happens. lsof outputs a massive 800 sockets opened, which both Netdata and ls /proc/<pid>/fd/ | grep socket | wc -l agrees. But netstat -tulnap | grep =pid> does not. That only shows ~20 sockets. Even adding in all sockets in the CLOSE_WAIT, FIN_WAIT category across the system, it's no where close to 200 let alone 800.

> ls /proc/248298/fd/ -l | grep socket | wc -l

823

> netstat -tulnap | grep 248298 | wc -l

25

This makes no sense.

Digging into Trantor

Trantor[3] is the networking library and event system that my stack uses. The trantor::Socket class is responsible for socket RAII, I added logging to the class ctor/dtor and... scraping throgh the logs.. it is! Every Socket is closed. How come there's a leak then? Maybe something else that uses sockets is leaking? I tried switching the c-ares[4] based resolver to using gethostbyname. Not that either. Socket count is still grows.

That left me confused, again. DB connections in Drogon is only created on startup. It can't be creating on the fly. Nor there's other use of sockets that I can think of.

Switched back to using c-ares. Maybe something else is going on with the TcpConnectionImpl class? Some code dup() the socket? Or close() failed? I ran the crawler under strace and uses some awk script to clean up the output. Nope. Nothing. I've asked the other maintainers of Trantor and Drogon. No one knows what's possibly causing trouble.

System tuning

This looks like some system level issue to me now. Some searcing on the web later. People are suggesting this could be erphemeral port exhaustion. A solution is to increase the range of erphemeral ports and enable port recycling. Tried the following commands, doesn't help at all.

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w net.ipv4.tcp_tw_reuse=1

Simplifying to solving the problem

I'm back to square one. The only thing I know is there's less issue when the crawler runs fast. Maybe there's some compiler bug in C++'s coroutine implementation? Leading to some sockets not closed when fetching robots.txt? I stopped the crawler from fetching robots.txt at all. Socket count still grows. Does the act of fetching robots.txt causes socket count grow too? I dumped all hosts TLGS knows and got some code to fetch all robots.txt. Yes, socket count grows. And is faster then when atually crawling. Real weird..

As a temporary solution to the hanging on known-hosts page. I dumped all hosts into a text file and let the crawler chew on them them first. Which I can then kill later to reset the scoket count. This leads to a intresting observation. Crawling all homepage of known hosts leads to much faster socket count growth. Same as just fetching robots.txt. Armed with this information, I copied the entirty of Dremini's Gemini client into Drogon. And enable all debug logging. I'm now able to see the exact sequence of operations that happened while fetching pages. It looks like this:

20221008 03:16:02.327352 UTC 4049482 INFO ======================== gemini://signals.pollux.casa/ ======================== - main.cc:507

20221008 03:16:02.328971 UTC 4049482 TRACE [ares_sock_createcallback_] sockfd=7 type=UDP - AresResolver.cc:244

20221008 03:16:02.328999 UTC 4049482 TRACE [ares_sock_statecallback_] sockfd=7 read=1 write=0 - AresResolver.cc:258

20221008 03:16:02.329093 UTC 4049482 TRACE [run] Start to run... - HttpAppFrameworkImpl.cc:454

20221008 03:16:02.330903 UTC 4049482 TRACE [createListeners] thread num=1 - ListenerManager.cc:98

20221008 03:16:02.331586 UTC 4049482 TRACE [onQueryResult] onQueryResult 0 - AresResolver.cc:173

20221008 03:16:02.331619 UTC 4049482 TRACE [TcpClient] TcpClient::TcpClient[GeminiClient] - connector - TcpClient.cc:75

20221008 03:16:02.334069 UTC 4049482 TRACE [connect] TcpClient::connect[GeminiClient] - connecting to 86.221.250.139:1965 - TcpClient.cc:110

20221008 03:16:02.334118 UTC 4049482 TRACE [createNonblockingSocketOrDie] sock=11 - Socket.h:46

20221008 03:16:02.334176 UTC 4049482 TRACE [connect] connecting - Connector.cc:88

20221008 03:16:02.334201 UTC 4049482 TRACE [connecting] connecting:11 - Connector.cc:146

20221008 03:16:02.334250 UTC 4049482 TRACE [createHandlerInstance] create handler class object: 0xAAAAECB02B80 - HttpBinder.h:138

20221008 03:16:02.334262 UTC 4049482 TRACE [createHandlerInstance] create handler class object: 0xAAAAECB02B80 - HttpBinder.h:138

20221008 03:16:02.551427 UTC 4049482 TRACE [TcpConnectionImpl] MTLS: 0 - TcpConnectionImpl.cc:1940

...

Scrolling through the logs. I find 2 things. 1st is that I'm creating DNS resolvers for each request. Which is not efficient. I hacked some quick code in (since this test program is single threaded anyway) to reuse the same resolver. Socket count grows as expected, but now the log is shorter and cleaner. Next, I notice the file descriptor ID that createNonblockingSocketOrDie returns is getting hiher and higher down the log. That is the socket leak. I thought to myself.

Looking at the requests made one by one - focusing on the socked fd. I found exactly where a socket is not closed.

...

20221008 06:15:48.553071 UTC 4085256 INFO ======================== gemini://sam.bossley.us/ ======================== - main.cc:502

20221008 06:15:48.557350 UTC 4085256 TRACE [onQueryResult] onQueryResult 0 - AresResolver.cc:172

20221008 06:15:48.557399 UTC 4085256 TRACE [TcpClient] TcpClient::TcpClient[GeminiClient] - connector - TcpClient.cc:75

20221008 06:15:48.559816 UTC 4085256 TRACE [connect] TcpClient::connect[GeminiClient] - connecting to 45.63.57.207:1965 - TcpClient.cc:110

20221008 06:15:48.559880 UTC 4085256 TRACE [createNonblockingSocketOrDie] sock=14 - Socket.h:46

20221008 06:15:48.559940 UTC 4085256 TRACE [connect] connecting - Connector.cc:91

20221008 06:15:48.559974 UTC 4085256 TRACE [connecting] connecting:14 - Connector.cc:151

20221008 06:15:51.559857 UTC 4085256 TRACE [~TcpClient] TcpClient::~TcpClient[GeminiClient] - connector - TcpClient.cc:80

20221008 06:15:51.560437 UTC 4085256 DEBUG [operator()] Got exception from gemini://sam.bossley.us/: Timeout - main.cc:519

20221008 06:15:51.560473 UTC 4085256 INFO ======================== gemini://tlgs.one/ ======================== - main.cc:502

20221008 06:15:51.561144 UTC 4085256 TRACE [onQueryResult] onQueryResult 0 - AresResolver.cc:172

20221008 06:15:51.561231 UTC 4085256 TRACE [TcpClient] TcpClient::TcpClient[GeminiClient] - connector - TcpClient.cc:75

20221008 06:15:51.561512 UTC 4085256 TRACE [connect] TcpClient::connect[GeminiClient] - connecting to 23.88.52.182:1965 - TcpClient.cc:110

20221008 06:15:51.561568 UTC 4085256 TRACE [createNonblockingSocketOrDie] sock=15 - Socket.h:46

...

20221008 06:15:52.582482 UTC 4085256 INFO ======================== end gemini://tlgs.one/ ======================== - main.cc:504

20221008 06:15:52.582504 UTC 4085256 DEBUG [operator()] Got response from gemini://tlgs.one/ - main.cc:507

20221008 06:15:52.582524 UTC 4085256 INFO ======================== gemini://gemi.dev/ ======================== - main.cc:502

20221008 06:15:52.582740 UTC 4085256 TRACE [handleClose] to call close callback - TcpConnectionImpl.cc:984

20221008 06:15:52.582887 UTC 4085256 TRACE [~Socket] Socket deconstructed:14 - Socket.cc:245

20221008 06:15:52.583173 UTC 4085256 TRACE [onQueryResult] onQueryResult 0 - AresResolver.cc:172

20221008 06:15:52.583235 UTC 4085256 TRACE [TcpClient] TcpClient::TcpClient[GeminiClient] - connector - TcpClient.cc:75

...

Normally Dremini will attempt to close the current socket on the next chance avaliable after handling the response. In my simple test code is right while the DNS client resolving and nothing else to do. But in the log. I don't see socket closing after request to sam.bossley.us timed out. The socket ID increased and never dropped back to 14 again. That's the smoking gun. But why? trantor::Socket is a RAII class. There should be no chance of a socket leak. Seaching for createNonblockingSocketOrDie in the codebase. This turns up.

void Connector::connect()

{

int sockfd = Socket::createNonblockingSocketOrDie(serverAddr_.family());

errno = 0;

int ret = Socket::connect(sockfd, serverAddr_);

...

Everything makes sense now. Trantor uses a reactor model based on epoll on Linux. It doesn't create the abstraction before it makes sense to add to epoll. Thus, if the TCP client gets destroyed before the connection is established. The socket lingers and never gets closed. This is before trantor::Socket is constructed. No wonder why dumping constructor and destructor logs didn't help. I ended up manually tracking the state of the socket and close it if Connector destructs before the connection is established. Here's the pull request[5]:

Conclusion

This bug is stupid. But a lesson well learned. Every bit of information I collected is correct. It is a socket. But netstat doesn't show it because nothing is connected on the other end. Nor it gets closed because no IO channel is attached. It also makes snese why faster pages is non-issue. Because it never triggers timeout. Trust the data.

Now socket count stays very flat. Job well done 😊