Setting up vits-models to generate Waifu voices on demand

This post is quite different comparent to my usual content. It's "easy" compared to dealing with TLS and what not. But it took me some time to figure out stupid encoding issues. So I decided to write it down for future reference.

I got my hands on LLaMA and I'm trying to build an automatic translation bot and throw it into VRChat for assist in language exchange (credit to my friend Pichu for the idea). Thinking about it. Why not make the voice as quite as possible? It fits the weeb colture there. The standard TTS tools, even Festival can only do standard, boring speech. After a few seconds googling, I found a plethora of deep learning based voice synthesis models on HuggingFace. vits-models have a huge collection of voices.

So I need a few things

- Host the synthesis model on my server

- Build a client to send some text to the server and get back the audio

Getting the demo application running is easy. Clone, install dependencies, run. By defaylt, it will run on port 7860. important: You migh need to edit pretrained_models/info.json to change which voices are available. By default it loads everything. And will take well above 8GB of RAM. Also it runs on the CPU by default. You can change that by adding --device cuda to the app.py command.

git clone https://huggingface.co/spaces/sayashi/vits-models

cd vits-models

pip install -r requirements.txt

python app.py

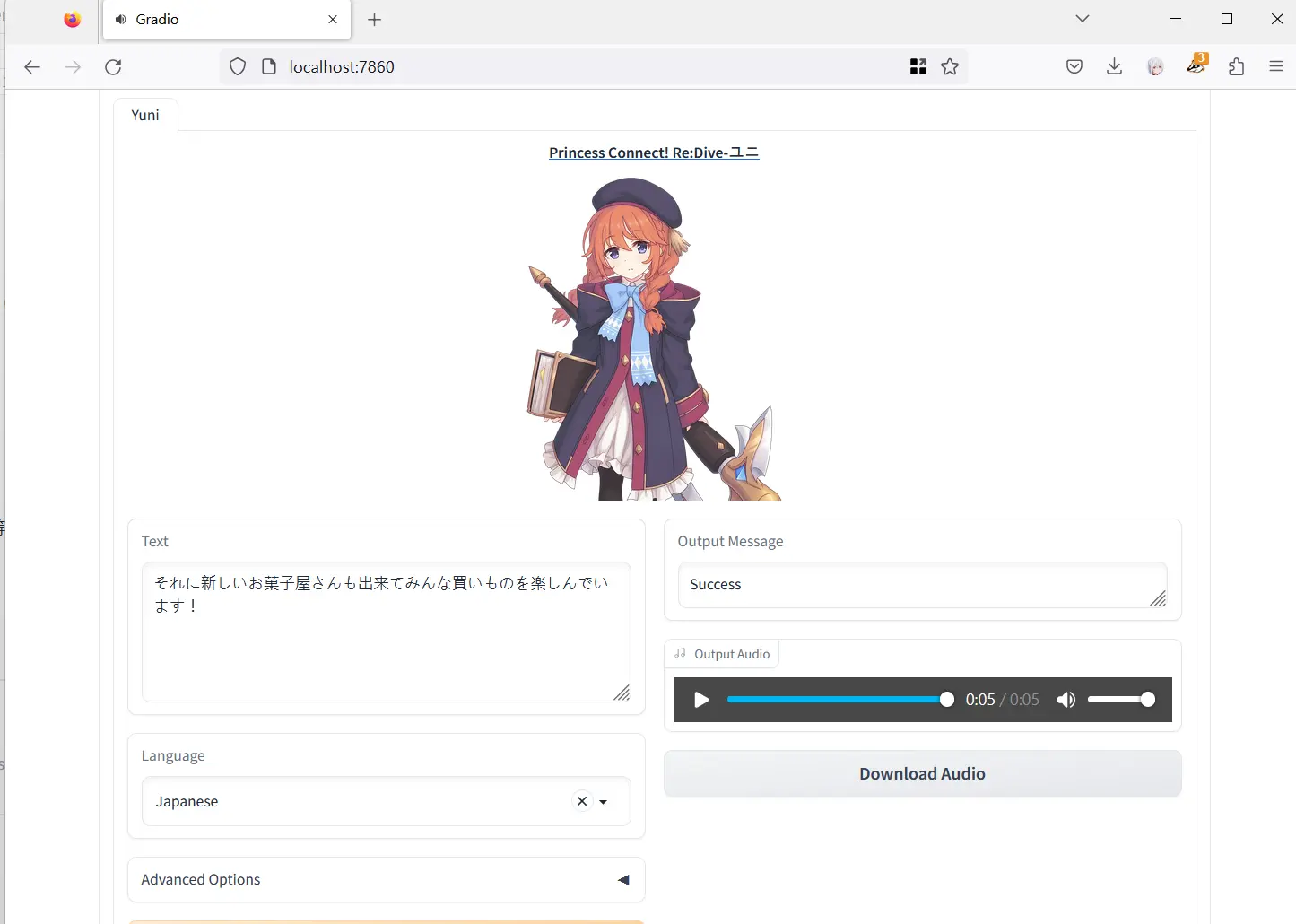

Now you can go to http://localhost:7860 and play with it.

Synthesis result form the default demo text

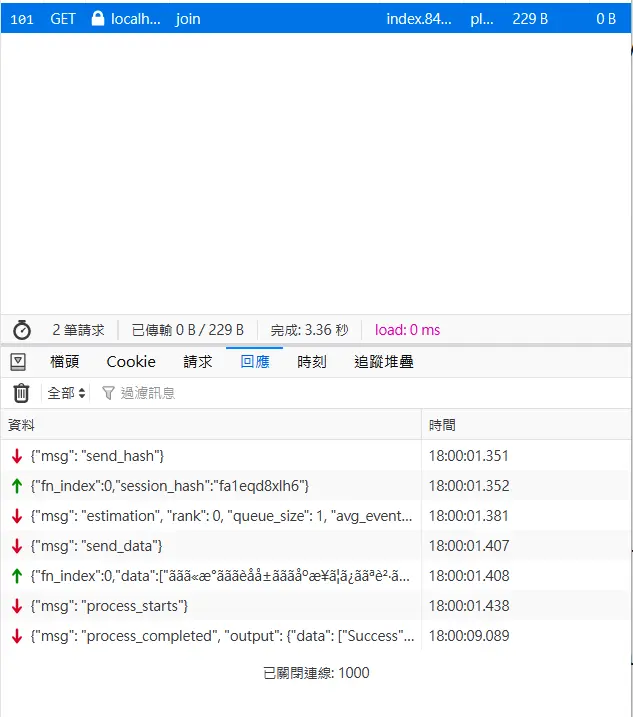

Wow, that's damn impressive. Next step is to build a client. However, the API is undocumented. Staring at dev tools make me think it's not too complicated. The only thing the webpage does after clicking the "Generate" button is open a websockets connection to ws://localhost:7860/queue/join and sends some JSON message. I don't see any session ID in the request header so I guess session_hash could be some random string. In the final message, it has data:audio/wav;base64,UklGRiRIAwBXQVZFZm10IBAAAAABAAE... which obviously is the base64 encoded result in wav format.

Those are easy to deal with. But what the heck is ããã«æ°ã.. after the server asking for data...? Horurs went by. I tried different things, SHIFT-JIS, Unicode codepoints, comparing against UTF-8 (that was a nice try, looks very similar), but nothing worked. Until I gave up and sent plain UTF-8. It freaking worked. I spent hours trying and the server can just handle plain UTF-8. Welp.

Just for the sake of documentation. The dance between the client and the server is as follows:

- Server asks for a session hash

- Client sends the

fn_indexandsession_hash.fn_indexis the index of the voice in thepretrained_models/info.jsonfile.session_hashis a random string. - Server replies how much work is in it's quque, how long you need to wait and etc.. this event can be ignored if you don't need it

- Server sends "send_data" event

- Client sends the text to be synthesized along with the

session_hash - Data is in the format

[text, language, noise_scale, noise_scale_w, length_scale, <IDK what this is>] - Server replies "process_starts" event

- Server sends "process_completed" along with the base64 encoded wav file

With that understanding, making a client is easy - I'm using C++ and Drogon. The full code is available on Gist. First create a Websocket client and setup the message handler.

auto client = WebSocketClient::newWebSocketClient("ws://127.0.0.1:7860");

client->setMessageHandler([](std::string &&message,

const WebSocketClientPtr &client,

const WebSocketMessageType &type)

{

// only care about actual data

if(type != WebSocketMessageType::Text)

return;

auto json = nlohmann::json::parse(message);

auto msg = json["msg"];

static std::string session_hash = "some-random-string";

if(msg == "send_hash") {

// send our session to the server

nlohmann::json json;

json["session_hash"] = session_hash;

json["fn_index"] = 0;

client->getConnection()->send(json.dump());

}

else if(msg == "send_data") {

// send the text to be synthesized along with other parameters

std::string text = "天気がいいですね。";

nlohmann::json json;

json["fn_index"] = 0;

json["session_hash"] = session_hash;

json["data"] = {text, "Japanese", 0.6, 0.668, 1, false};

json["event_data"] = nullptr;

client->getConnection()->send(json.dump());

}

else if(msg == "process_completed") {

// grab the base64 encoded wav file and save it to disk

auto data = json["output"]["data"];

auto b64_begin = data[1].get<std::string>().find(",");

auto b64 = data[1].get<std::string>().substr(b64_begin + 1);

auto decoded = utils::base64DecodeToVector(b64);

std::ofstream file("out.wav", std::ios::binary);

file.write((char*)decoded.data(), decoded.size());

app().quit();

}

return;

});

Then just connect to the server and wait for the result.

auto req = HttpRequest::newHttpRequest();

req->setPath("/queue/join");

co_await client->connectToServerCoro(req);

Compile run and you should get a file called out.wav in the same directory as the executable. Here I made it say "天気がいいですね。"

And the AI reading off the first 2 paragraphs of the Japanese Wikipedia page for "Japan"

日本国、または日本は、東アジアに位置する民主制国家。首都は東京都。

全長3500キロメートル以上にわたる国土は、主に日本列島および千島列島・南西諸島・伊豆諸島・小笠原諸島などの弧状列島により構成される。大部分が温帯に属するが、北部や島嶼部では亜寒帯や熱帯の地域がある。地形は起伏に富み、火山地・丘陵を含む山地の面積は国土の約75%を占め、人口は沿岸の平野部に集中している。国内には行政区分として47の都道府県があり、日本人と外国人が居住し、日本語を通用する。

Yup. That works. Now I can keep pushing my projects further! Full code is available on Gist.