Inexhaustive List of AI Models that works on RK3588

Armed with experience converting scikit-learn to RKNN. I spent the past few days trying to get Waifu2x (Anime image upscaling) and endup defeated. Either RKNN got stuck submitting commands or I get the mysterious message W RKNN: [07:11:36.064] Output(Deconvolution2DFunction_0): size_with_stride larger than model origin size, if need run OutputOperator in NPU, please call rknn_create_memory using size_with_stride. and got garbage output. Yet that API is not exposed nor I can find the symbol in the shared library. Heck not even if try to run SRCNN, something much simpler. Something is really weird. Yet searching only shows people running YOLOv8 also facing the same issue without solution.

I decid to put waifu2x to the side and see what else works on RK3588. Turns our it's not that bad after all. I've listed a few interesting models that I've tried below.

Visual side of CLIP

CLIP is a model that generates unified image and text embeddings. Useful for matching a search query to images. I grabbed the ONNX models from jina-ai/clip-as-service. RKNN converted and ran them like a charm!

> wget https://clip-as-service.s3.us-east-2.amazonaws.com/models-436c69702d61732d53657276696365/onnx/RN50/visual.onnx

> python

>>> from rknn.api import RKNN

>>> rknn = RKNN()

>>> rknn.config(target_platform='rk3588')

>>> rknn.load_onnx(model='visual.onnx')

>>> rknn.build(do_quantization=False)

>>> rknn.export_rknn('visual.rknn')

To run the model, I used the following code

from rknnlite.api import RKNNLite

rknn = RKNNLite()

# Copied to dev board

rknn.load_rknn('visual.rknn')

rknn.init_runtime()

out = rknn.inference(inputs=[<image>])

After some loose benchmarking, the NPU is actually quite good at 0.04353s per image. Beating my Threadripper 1900X at 0.0833s per image.

| | RK3588 NPU | RK3588 CPU | Threadripper 1900X |

| time s/img | 0.04353 | 0.352s | 0.083307433873415 |

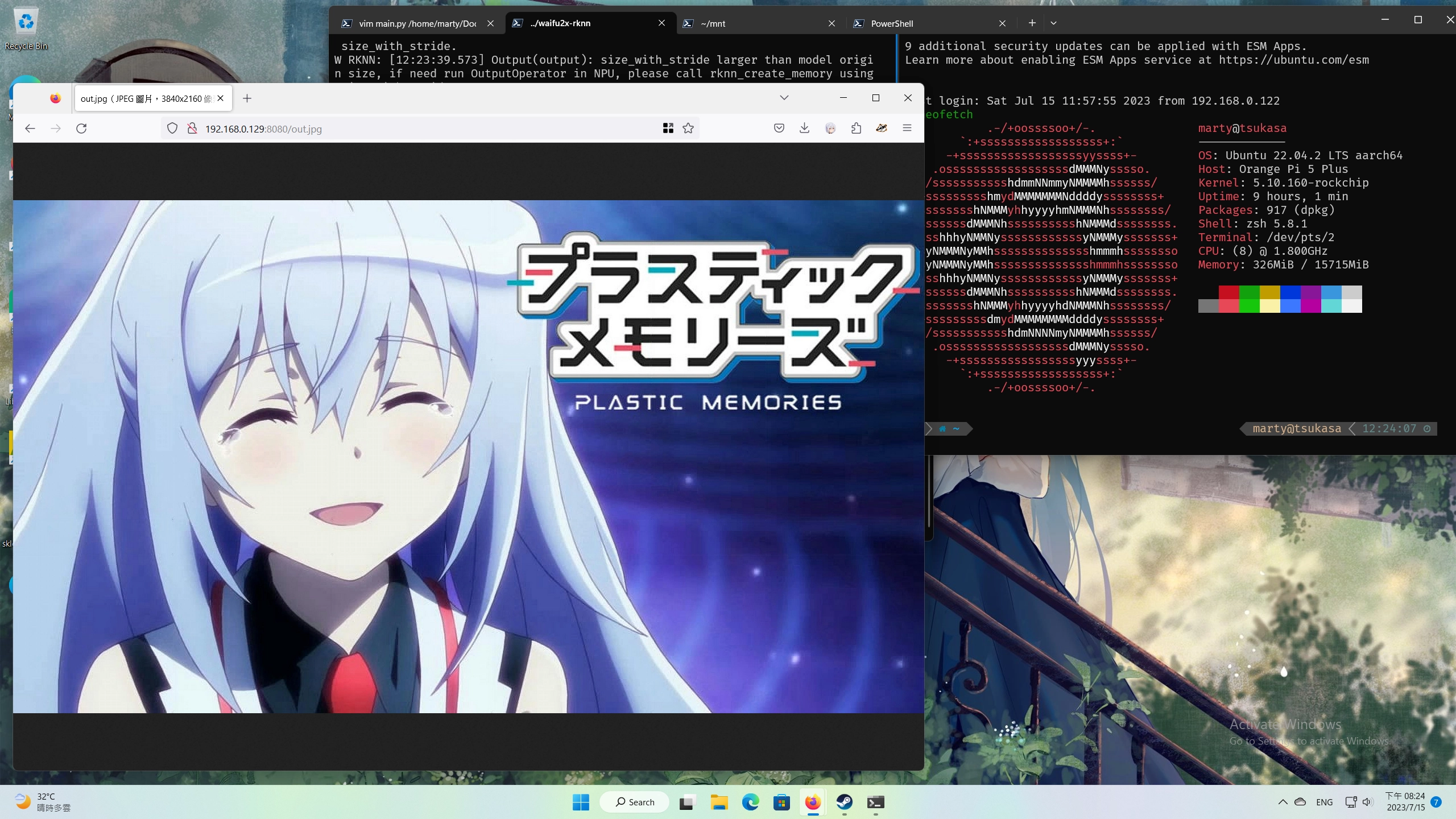

Subpixel Supersesolution (Wenzhe Shi, et el. 2016)

For some unknown reason, although Waifu2x and SRCNN failed spectacularly. This model I found on ONNX model zoo legit works, although it still produces the same memory warning. But magically it works. This got me really excited that there might be a way to get Waifu2x to work after all. However hope is short lived. The original model only upscales the Luma channel (does simple bicubic interpolation for the chromas). I tried to modify the model to upscale all 3 channels. But output became garbage again once I do so.

I have uploaded the working conversion and inference script to GitHub on the off chance that someone might find it useful.

Fast Neural Style Transfer

I am speechless when this turned out working. Like, what the actual hell. I can't have Waifu2x but I can have this? Where both outputs 3 channels? dfshliuhgfds;lhsdf!!!!!!!!! Anyway, this works. The conversion process is the same load ONNX, build, export. And the inference code can be easily modified from the example:

from rknnlite.api import RKNNLite

from PIL import Image

import numpy as np

rknn = RKNNLite()

rknn.load_rknn('./mosaic-8.rknn')

rknn.init_runtime()

img = Image.open('./test.jpg').resize((224, 224))

img = img.convert('RGB')

img = np.array(img)

output = rknn.inference(inputs=[img])[0]

print(output.shape)

output = np.clip(output, 0, 255)[0].astype('uint8').transpose((1, 2, 0))

out_img = Image.fromarray(output)

out_img.save('./out.jpg')

The ONNX files can be found in the following link. I didn't bother to benchmark this one as I don't think anyone is going to use it an a production setting.

ArcFace

Another entry from ONNX model zoo. ArcFace is a face recognition model. It takes in a face image and outputs a 512 dimensional vector. And checked that the output is the same as the original model. If you are trying to run this model, remember to use the float16 version. The int8 won't convert correctly.

# arcFace.py

from rknnlite.api import RKNNLite

import onnxruntime as ort

from PIL import Image

import numpy as np

rknn = RKNNLite()

rknn.load_rknn('./arcfaceresnet100-8.rknn')

rknn.init_runtime()

model = ort.InferenceSession('./arcfaceresnet100-8.onnx')

img = Image.open('./test.jpg')

img = img.resize((112, 112))

img = img.convert('RGB')

img = np.array(img).astype(np.float32)/255.0

img = img.transpose((2, 0, 1))[np.newaxis, ...]

output = rknn.inference(inputs=[img])[0][0]

output2 = model.run(None, {'data': img})[0][0]

# print the cosine similarity of RKNN and ONNX's output

print(f"Cosine Sim: {np.dot(output, output2) / (np.linalg.norm(output) * np.linalg.norm(output2))}")

# print L2 distance of RKNN and ONNX's output

print(f"MSE Distance: {np.linalg.norm(output - output2) / output.shape[0]}")

❯ python arcface.py

I RKNN: [13:41:00.012] RKNN Runtime Information: librknnrt version: 1.5.0 (e6fe0c678@2023-05-25T08:09:20)

I RKNN: [13:41:00.012] RKNN Driver Information: version: 0.8.5

I RKNN: [13:41:00.014] RKNN Model Information: version: 4, toolkit version: 1.5.0+1fa95b5c(compiler version: 1.5.0 (e6fe0c678@2023-05-25T08:18:57)), target: RKNPU v2, target platform: rk3588, framework name: ONNX, framework layout: NCHW, model inference type: static_shape

Cosine Sim: 0.9856818318367004

MSE Distance: 0.0015965377679094672

RKNN also runs MUCH faster than ONNX runtime. 0.034089s vs 0.81188s.

| | RK3588 NPU | RK3588 CPU |

| time s/img | 0.034089 | 0.81188s |

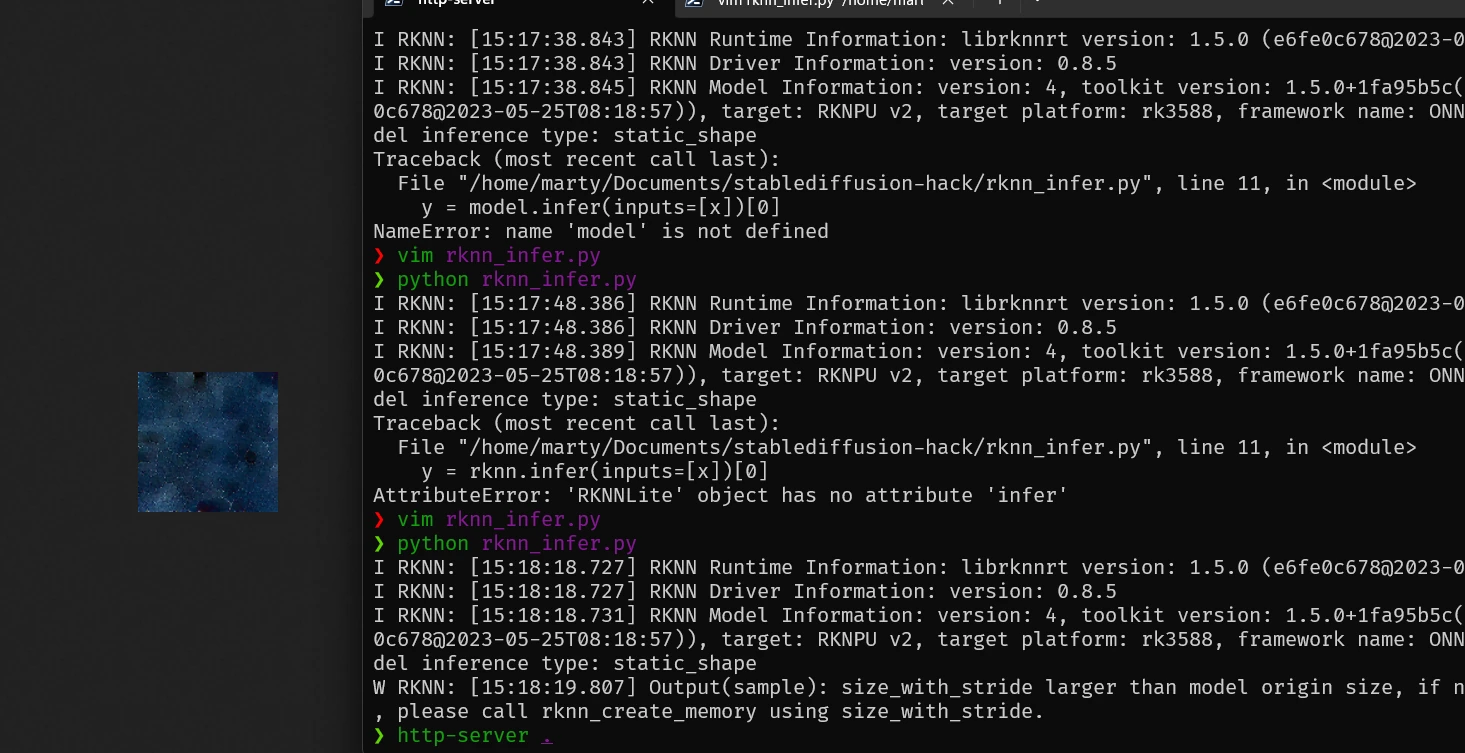

(Not useful on it's own) VAE Encoder/Decoder of Stable Diffusion

I'm able to get a part of Stable Diffusion to run on the RK3588. Namely the VAE Encoder. And I bet the UNet part if I can figure out the exact input dimensions. However, that's not the problem here. The main issue is language processing in SD uses muti headed attention. And neither the CPU ONNX runtime nor RKNN supports it. Thus there is no easy way to get the full model running on the RK3588. You might be able to offload that part to PyTorch and get it running that way.

Again, confirmed result to be almost the same as the original model.

I think I might be able to hack together something that runs Stable Diffusion on RK3588. Judging from how slow stable diffusion is on GPUs already, running on a RK3588 is going to be SLOW. But isn't my motto "if it shouldn't run something, I'll run it anyway"?

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- martin \at clehaxze.tw

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df