Benchmarking RK3588 NPU matrix multiplication performance

My goal with my RK3588 dev board is to eventually run a large language model on it. For now, it seems the RWKV model is the easiest to get running. At least I don't need to somehow get MultiHeadAttention to work on the NPU. However, during experimenting with rwkv.cpp, which uses pure CPU for inference on the RK3588, I noticed that quantization makes a huge difference in inference speed. So much so that INT4 about 4x faster then FP16. Not a good sign. It indicates to me that memory bandwidth is the bottleneck. If so, the NPU may not make much of a difference.

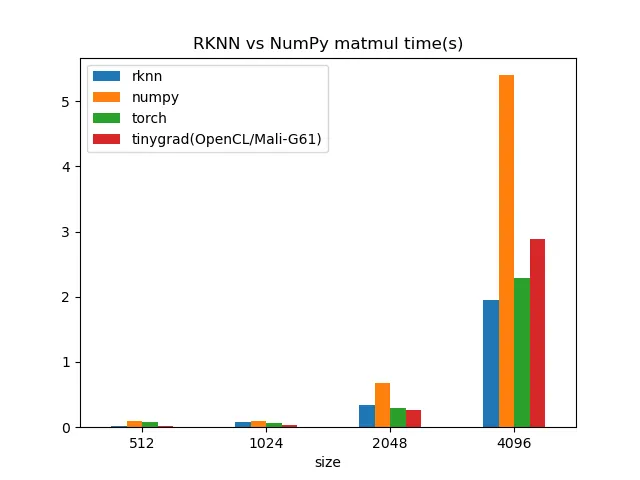

So, benchmarking time! First question I need answer is how fast the NPU can do matrix multiplication. According to the spec, the NPU on RK3588 can do 0.5 TFLOPS at FP16 under matrix multiplication. (Marketing materials says 6TOPS, but that only applies to INT4 and is doing convolution). Let's compare that against some baseline numbers like NumPy and PyTorch.

First, as of RKNN2 v1.5.0. The only way to get the NPU to do stuff is via ONNX. The low-level matrix multiplication API is completely broken. So I wrote a quck script that generates ONNX models that multiplies an input with a random matrix. Then the model exported to RKNN.

# part of genmatmul.py

def make_matmul(M, N, K):

matmul_node = helper.make_node(

'MatMul',

inputs=['A', 'B'],

outputs=['C'],

)

b_mat = np.random.randn(N, K).astype(np.float32)

b_node = helper.make_node(

'Constant',

inputs=[],

outputs=['B'],

value=helper.make_tensor(

name='B',

data_type=onnx.TensorProto.FLOAT,

dims=b_mat.shape,

vals=b_mat.flatten(),

),

)

graph = helper.make_graph(

nodes=[b_node, matmul_node],

name='matmul',

inputs=[

helper.make_tensor_value_info(

'A',

onnx.TensorProto.FLOAT,

[M, N],

),

],

outputs=[

helper.make_tensor_value_info(

'C',

onnx.TensorProto.FLOAT,

[M, K],

),

],

)

model = helper.make_model(graph, producer_name='onnx-matmul')

onnx.checker.check_model(model)

model = version_converter.convert_version(model, 14)

return model

With the files generated, I can now load the model into RKNN and run it. According to np.show_config(), my installation uses LAPACK for BLAS, which is quite slow. The CPU on the RK3588 contains 2 Cortex-A73 and 6 Cortex-A53. I aslo tried running with TinyGrad, when has OpenCL support. However, it seems TinyGrad is not optimized enough to beat PyTorch (running on the CPU!!).

According to the result. RKNN is indeed the fastest in the bunch. However, there's a big caveat, RKNN internally runs at FP16 even though the original ONNX graph is FP32. Yet non of the other libraries does matrix multiplication at FP16. Thus this result is more like comparing oranges to tangerines. They are similar, but not quite the same. Also, according to the graph, the NPU only starts winning PyTorch and TinyGrad somewhere between N = 2048 and 4096. I have to assume this is some overhead in RKNN. Which I really hope they can resolve by fixing the low-level API.

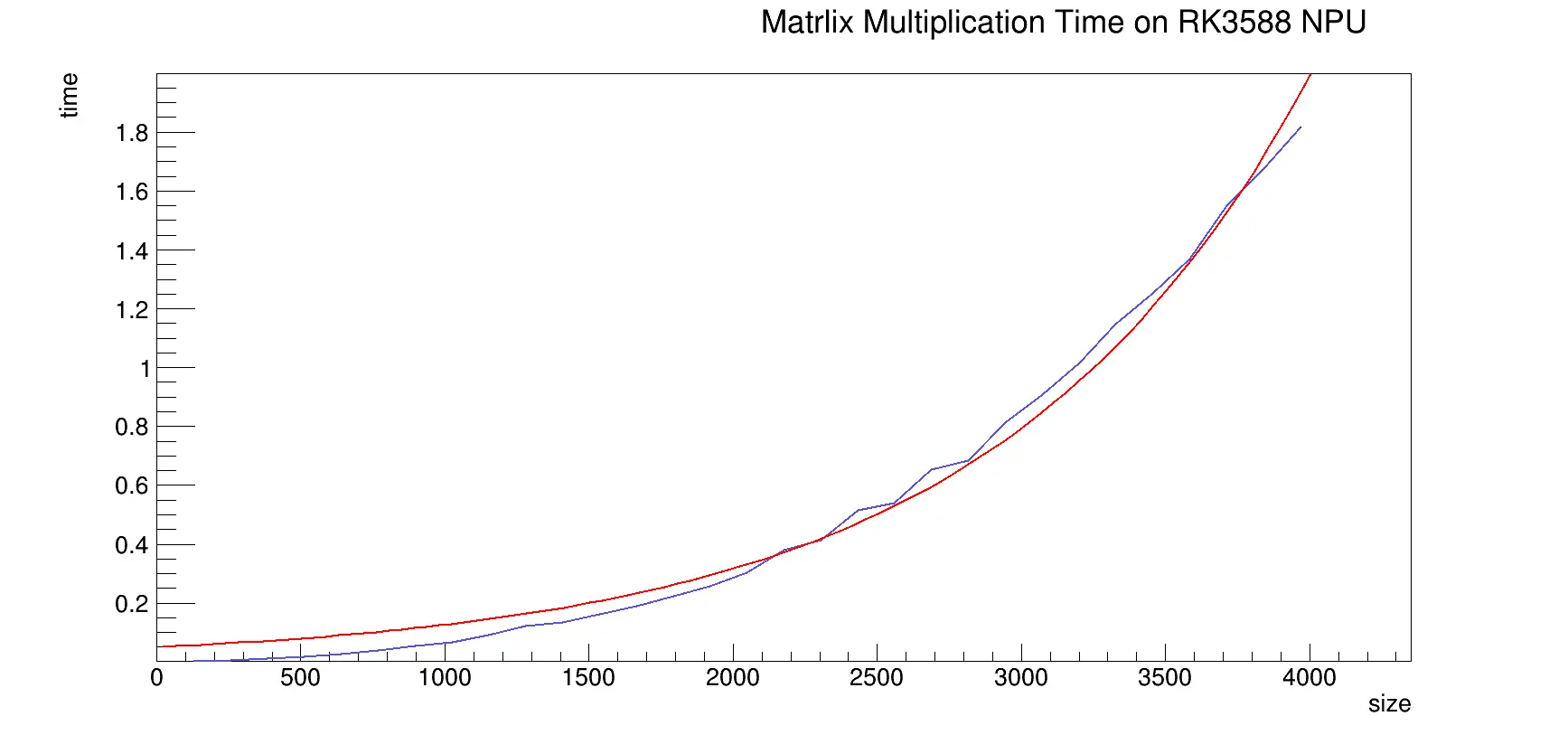

Another question I'm asking myself. How well does the NPU scale with matrix size? To answer this, I generated a bunch of ONNX models with different matrix sizes. Starting from 128 and increment by 128 until 4096. The blue line is the measured time it takes to run matrix multiplication on the NPU. And the red line is simply the blue line fitted with a exponential curve (since matrix multiplication is ~O(n^3)).

And the fit log from ROOT:

****************************************

Minimizer is Minuit / Migrad

Chi2 = 0.0840229

NDf = 29

Edm = 5.32403e-09

NCalls = 68

Constant = -2.98728 +/- 0.0811982

Slope = 0.000918918 +/- 2.29814e-05

For me, this graph shows 2 properties that I'm very intrigued with.

- There's no sudden jump in time. The NPU doesn't seem to cache anything. Likely it does chunked fetches from memory. And a local buffer holds it as long as needed.

- However, there is a few steps in the curve. Maybe they are using different strategies for different matrix sizes.

- For piratical sizes, the NPU takes really little time. Good news for me trying to run RWKV.

- Matrix multiplication doesn't scale well. We will run into issues if we try to widen language models.

That's everything I care enough to test. See ya.