Using llama-cpp-python server with LangChain

Very quick and short one. I was trying to make something with LangChain. I already had a server running LLaMA 2 Chat using llama-cpp-python. It happens to provide a OpenAI like API. There must be a way to abuse the code to make it work with LangChain. Yet half and hour of Googling turned up nothing. So I decided to read the code.

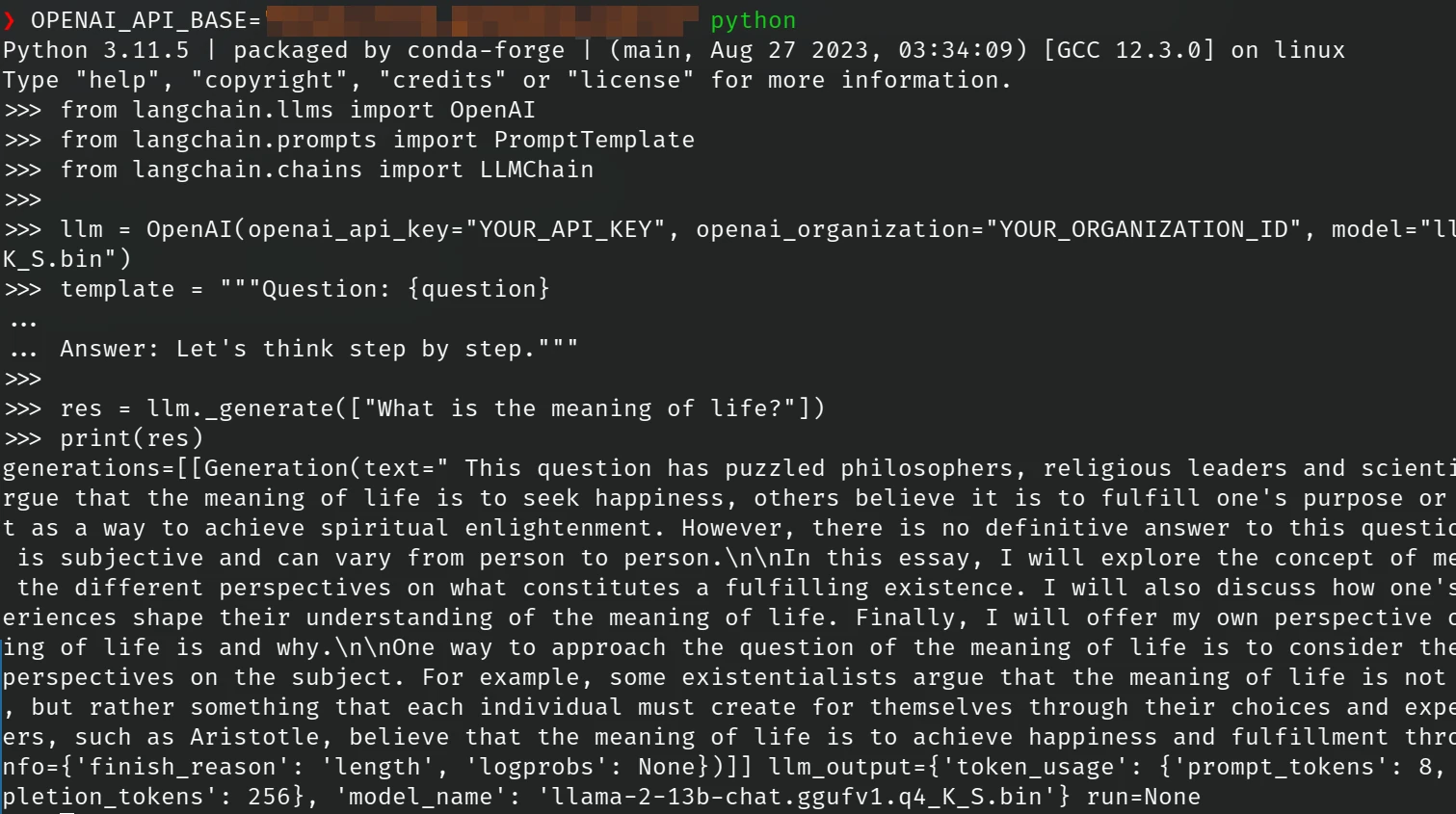

And... it's easy. The openai python package reads a OPENAI_API_BASE environment variable to know where to send the requests. So, just set it to the LLaMA server and you're good to go. Also since llama-cpp-python doesn't to authentication, you can just leave the OPENAI_API_KEY to be antyhing. As long as it makes the Python package happy.

export OPENAI_API_BASE="http://llm.your.domain.com/v1"

That's all. Hope this helped someone searching and can't find anything.

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- marty1885 \at protonmail.com

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df