Status Report: Building the HTTP/2 Client for Drogon

I left my last job by the start on November, 2023 and have a week of free time before I join the new one. With time on hand, I decided to finish a feature request I made years ago in Drogon. A HTTP/2 client - And server, but that'll happen later. I never expected to get it almost finished in like 5 days. The protocol looks duanting at first. It requires you to implement your own flow control and multiplexing. But it's actually not that bad. The protocol is designed in a way to be very diffifult to desync. And the flow control is in fact, very simple. Totally not like the complicated TCP states and backup algorithms.

Here is my PR for the client:

This post also serves as a way for me to re-learn the protocol. Gives me a chance to make sure I didn't mess up something critical. But I will try to not board you with the the finest details. Read the RFCs if you care about them.

Evolution to HTTP/2

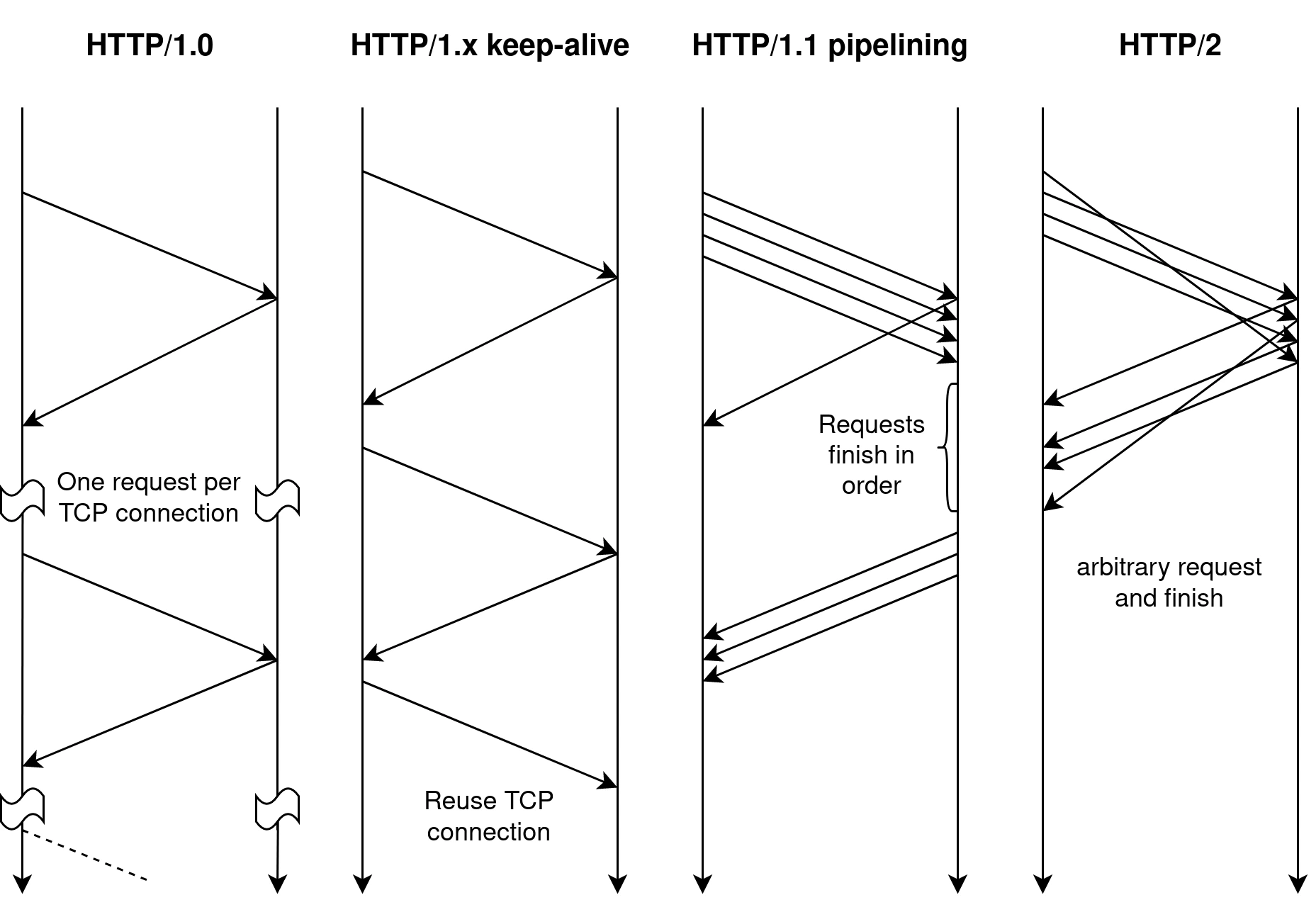

HTTP 1.1 has served us for a really, really long time. And we have stuck with it until 2015. That is, until webpages gets too complicated for HTTP/1.1. Web pages started to load more assets. And we started doing XHR requests. They all made a flaw of HTTP/1.1 apparent - head-of-line blocking. HTTP/1.1 was disigned as a document transportation protocol. You click on a link and you download one of a few things. Maybe a HTML, maybe a image. Or a HTML then 3 images and 1 CSS. But that's it. Modern web pagess can easily have 40+ requests just to load the required assets. That's not even counting the XHR calls which JS frameworks like React and Vue loves to make.

If this is such an issue, why isn't HTTP/2 invented earlier? Surely we would have benefited when ping was like 100ms in own town. I think there's 2 major reasons. First, in the very early days XHR is not a thing. And secondly, the network speed was much slower, so much so that networking was the limiting factor. Even when it's not, making a connection pool is good enough.

Before we get ourselves into HTTP/2. Let's see how the OG HTTP 1.0 and 1.1 works.

HTTP/1.0

The earliest HTTP/0.9 and HTTP/1.0 are in essences, the "purest" form of HTTP. They are designed to transport request for a single document and no more. You click on a link, you download a document. You click on another link, you download another document. Weather it be HTML, a image, or a PDF. The following is an example of the most barebone HTTP/1.0 request.

GET /index.html HTTP/1.0

That's it. It is splitted into 4 parts. First is the method, which is GET in this case. Then the path, /index.html. Then the protocol version, which is HTTP/1.0. And finally, the empty body. Which is represented by a empty line.

Sending this request to a server will result in a response. Which is also very simple. The following is what I get from my blog's reverse proxy:

❯ nc clehaxze.tw 80

GET /index.html HTTP/1.0

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:26:29 GMT

Content-Type: text/html

Content-Length: 169

Connection: close

Location: https://nina.clehaxze.tw/index.html

<html>

<head><title>301 Moved Permanently</title></head>

<body>

<center><h1>301 Moved Permanently</h1></center>

<hr><center>nginx/1.24.0</center>

</body>

</html>

Well, I've setup redirecting to HTTPS so it redirects. Nevertheless after sending the empty line, you can see that the server responds with HTTP/1.1 and the status code 301. Then headers describing the response. And finally, a simple HTML body. Responding a HTTP/1.1 to a HTTP/1.0 request is a bit not compliant to RFC. But most clients will handle it just fine.

One thing to note in the above example is the Connection: close header, this is not included in the HTTP/1.0 standard. By default HTTP/1.0 connections will close after the response is fully sent. This makes sense as it is only designed to send 1 document at a time. To request for multiple documents, just open connections one after another. Along with the TCP 3-way handshake each time. However, we all know by now, this is slow. Thus, later on the Connection: keep-alive header was introduced. Which instructs the server to keep the connection open after the response is sent. Avoiding the need to repeatedly create new connections. This is the default behavior in HTTP/1.1 too.

To demostrate this, I'll send 3 requests to my server. Each after the previous one is responded. You can see that instead of closing the connection, the server keeps it open. And responds to the next request.

❯ nc clehaxze.tw 80

GET /index.html HTTP/1.0

Connection: keep-alive

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:51:40 GMT

...

GET /index.html HTTP/1.0

Connection: keep-alive

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:51:40 GMT

...

GET /index.html HTTP/1.0

Connection: keep-alive

Date: Sun, 26 Nov 2023 02:51:40 GMT

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

...

HTTP/1.1

In practice, HTTP/1.1 is a simple upgrade to HTTP/1.0 - it enables keep-alive connections by default and adds optional support for pipelining (though some server still f**k this up thus clients don't default enable it). To put it very bluntly. Requests are simply lines of text. And as the connections are kept open. The client can dump multiple requests and wait for the server to respond. In the following example, I send 3 requests to my server without any delay. And the responds with 3 responses. All in the same connection. (Also, it now requires a Host header to support virtual hosting)

❯ nc clehaxze.tw 80

GET /index.html HTTP/1.1

Host: clehaxze.tw

GET /index.html HTTP/1.1

Host: clehaxze.tw

GET /index.html HTTP/1.1

Host: clehaxze.tw

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:48:14 GMT

...

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:48:14 GMT

...

HTTP/1.1 301 Moved Permanently

Server: nginx/1.24.0

Date: Sun, 26 Nov 2023 02:48:14 GMT

...

HTTP/2

Even with both keep-alive and pipelining, HTTP/1.1 still has problems. The biggest one being head-of-line blocking. Say the client wants to download 3 files. One JavaSript and two CSS. But unfortunately, the JavaScript is 20MB and CSS is a mere 5KB - the client can't know in advance. With pipelineing, the client will send all 3 requests at once to reducing latency. Anyway, the client send the request for the JavaScript first then the two CSS. The server then starts responding. And it runs into a problem, the CSS cannot start transferring until JavaScript is done. Both client and server are stuck waiting for 20MB of data. Imagine a worse sinario, one of the requests is an AJAX call that on the backend is some ancient VAX/VMS machine. And it takes 10 seconds to finish. Even if you have 500Mbps internet, you are still stuck waiting for the VMS machine.

Browsers sort-of mitigate this by opening multiple connections. IIRC, Chrome opens 6 connections per domain. Then schedules the requests to different connections based on it's purpose. This is not ideal however. It does mitigate. But as it is not solving outright, the problem can show up when connections are strurated.

Solution? HTTP/2. See, HTTP/1.x are text based protocol and pipelining depends on the order of the responses. HTTP/2 solves it by being a binary protocol and introducing multiplexing. It allows multiple requests to be sent in a single connection, concurrently. While sending, each request is assigned a stream ID. And the server will respond with the same stream ID. Furthermore, both responses and requests are split into frames. Each frame is tagged with the stream ID. Enabling the server to respond to requests in any order without fear of ambiguity.

Besides multiplexing, HTTP/2 also introduces header compression. The HPACK algorithm is designed to be secure against side-channel attacks. And avoids the need to send the same headers over and over again. This is a huge improvement in performance. Especially when you are sending a lot of requests to the same domain.

HTTP/2 concepts

Frames

As TCP is a stream based protocol. HTTP/2 needs to split the stream into frames to multiplex. The format can be found in RFC 7540 Section 4.1. The following is a diagram of the frame format. (RFC9113 deprcates RFC7540. But it doesn't have the frame format diagram).

+-----------------------------------------------+

| Length (24) |

+---------------+---------------+---------------+

| Type (8) | Flags (8) |

+-+-------------+---------------+-------------------------------+

|R| Stream Identifier (31) |

+=+=============================================================+

| Frame Payload (0...) ...

+---------------------------------------------------------------+

Each frame starts with a 24 bit length field. Followed by an 8 bit type field and an 8 bit flags field. Then 31 bits of stream ID. The MSB of the steam ID field is reserved. Finally the payload. The length field represents the length of the payload. Thus the actual frame length is 9 bytes longer than the length field. The type field is used to identify the type of the frame. The the RFC, the following types are defined:

+----------------------------------------------------------------------------------+

| Type | Meaning |

+----------------------------------------------------------------------------------+

| DATA | Carries request or response body |

| HEADERS | Carries header for request and response |

| PRIORITY | Carries stream priority information |

| RST_STREAM | Terminates streams |

| SETTINGS | Parameters for stream or connection |

| PUSH_PROMISE | Used to initiate server push |

| PING | Used to test liveness of connection |

| GOAWAY | Notify shutdown of connection |

| WINDOW_UPDATE | Update the flow-control window |

| CONTINUATION | Continues the HEADERS or PUSH_PROMISE header block |

| | if it is too large to fit into a single frame |

+----------------------------------------------------------------------------------+

Each frame type has it's own format. And some frames have optional fields that are only present when certain flags (in the flags field) are set. For example, the DATA frame has a padding field that is only present when the PADDED flag is set. The following is a diagram of the DATA frame format from RFC 7540 Section 6.1. The Pad Length field is marked with a ? to indicate that it is optional. IT ONLY EXISTS WHEN THE PADDED FLAG IS SET. If the flag is not set, that entire field is omitted. Not just ignored during parsing.

+---------------+

|Pad Length? (8)| <-- Only exists when the PADDED flag is set

+---------------+-----------------------------------------------+

| Data (*) ...

+---------------------------------------------------------------+

| Padding (*) ...

+---------------------------------------------------------------+

HTTP/2 headers, Pseudo headers and CONTINUATION frames

HTTP/1 puts it's methods and path in the request line. But HTTP/2 doesn't have one. Instead of adding a frame for the request line, it jams them into the header and call them pseudo headers. These pseudo headers are prefixed with a :. For example, the following HTTP/1.1 request will be translated into a HTTP/2 request.

HTTP/1.1 Request HTTP/2 Request

GET /index.html HTTP/1.1 :method: GET

Host: clehaxze.tw -> :scheme: https

User-Agent: DrogonCleint :authority: clehaxze.tw

:path: /index.html

user-agent: DrogonClient

Here, HTTP/2 also demands an optimization. Headers in HTTP/1.x are case insensitive. But most servers will send in mixed case as that's what historically servers do. HTTP/2 demands ALL headers to be in lowercase.

Here's when a common vulnerability comes in. HTTP/1.x uses \r\n to separate headers. But HTTP/2, as a binary protocol, uses a length filed. Thus, it is possible to encode a \r\n in the header value. If the server is not careful and is running as a reverse proxy. It is possible to inject a header that becomes two when translated to HTTP/1:

HTTP/2 Request HTTP/1.1 Request

... ...

my-header: my-value\r\nX-Forwarded-For: localhost my-header: my-value

X-Forwarded-For: localhost

If your backend trusts the X-Forwarded-For header. BOOM, people can now spoof their IP to your backend. Furthermore, the same method can be used to inject a entire request. Be very careful and do check your headers.

HTTP/2 supports headers of arbitrary sizes. In the very rare case that the encoded header is larger then the negotiated maximum frame size. The peer must break it up into a HEADERS frame and one or more CONTINUATION frames. This is a major pain as to not mix up the encoder/decoder state. The RFC requires that the CONTINUATION frame must be sent immediately after HEADERS. And the implementation must not send any other stream's HEADERS or CONTINUATION frames in between. The peer also had validate too! This rule applies to both sending and receiving.

Personally I hate this design. The minimum maximum frame size is 16KB in RFC. Considering HPACK already compresses the headers. It's a lot of headers. When that amount of headers is sent you are better off senting in the body so flow control and assist. Not to mention this reduces the concurrency of the protocol.

Life cycle of a HTTP/2 request

Unlike HTTP/1.x, where you simply dump the request and wait for the response. HTTP/2 expects the client and server to manage their own stream state and flow control. To initiate a request, the client sends a HEADERS with a new stream ID. By RFC, all client initiated streams must have an odd stream ID (servers uses even IDs). And stream IDs must be monotonically increasing.

If the header is too large to fit into a single frame, the client can split it into multiple CONTINUATION frames. This is unlikely since the bare minimum both client and server must support. Generally, the client notifies the server it has finished sending the header by setting the END_HEADERS flag in the HEADERS or CONTINUATION frame. Followed by one or more DATA frame that contains the request body. When done, the client sets the END_STREAM flag in the last DATA frame. This tells the server that the request is done. And the server can start sending the response.

The same thing happens when the server sends it's reponse. The server sends HEADERS then DATA frames to transmit the response. And sets the END_STREAM flag in the last DATA frame. When the client found it have received both END_HEADERS and END_STREAM flags. It knows that the response is done. And the stream is considered fully closed.

You might find it weird that there's an explicit END_HEADERS flag. Isn't the transition from HEADERS/CONTINUATION to DATA frame enough? Well, no. HTTP/2 supports trailers, which is a not very well known feature already in HTTP/1.1. It allows the server to send additional headers after the response body. This is useful for headers that is difficult to calculate before the response is sent. For example, the hash of a very large file, maybe used for ETag.

Note that END_STREAM can only be sent when all headers and data are sent. But END_HEADERS can be sent before all headers are sent. You cannot have a stream with END_STREAM but not END_HEADERS. This complicates the state machine a bit. Also HTTP/2 provides an optimization, the HEADERS frame supports both END_STREAM and END_HEADERS flags. This allows the client to send 1 frame if the body is empty.

Flow control

Due to HTTP/2 multiplexing on top of TCP. The TCP congestion control algorithm is no longer sufficient. Thus, HTTP/2 introduces it's own flow control. It is designed to be simple and easy to implement. Each stream as well as the overall connection has it's own flow control window. Each byte in the DATA frame payload will decrease the window (both connection and stream-level) by 1. When the window reaches 0, the sender must stop sending DATA frames. And wait for the receiver to send a WINDOW_UPDATE frame to increase the window. This way the receiver can control the rate of the sender.

It is important to note that HTTP/2 flow control is only applies to DATA frames. All other frames are not flow controlled. This prevents critical frams like WINDOW_UPDATE itself from being blocked.

Error handling

As a binary protocol. HTTP/2 must prevent the client and the server getting out of sync. Otherwise it is very difficult to resync later on. For example, the frame format puts the length field at the beginning. This allows the receiver to know how many bytes to read exactly with doing any parsing. Even when an unsupported frame is received, the receiver can still read the length field and skip the entire frame. But that's not the only thing that can desync the client and server. The header compression state, SETTINGS appication, flow control, etc. Can all desync if there's a bug in one of the implementations. Thus, HTTP/2 defines a CONNECTION_ERROR state. When a CONNECTION_ERROR is encountered, the receiver must send a GOAWAY frame to notify the other party, which hopefully helps them to debug the issue. And the connection is killed.

For simpler errors, like the Content-Length header not matching the actual body length. The receiver can send a RST_STREAM frame to terminate the stream. But the overall connection is kept alive.

Starting HTTP/2

Obviously we need a way to tell the server that we want to use HTTP/2. Without changing the server port or the URL scheme. HTTP does provide the 101 Switching Protocols status code. But it adds an round trip and all the messy stuff that comes with switching when there's pipelined requests. So instead, HTTP/2 uses the ALPN TLS extension. It allows the client to give the server a list of protocols it supports. And the server will pick one from the list. If the server picks HTTP/2, that information is included in the TLS handshake. And the client will know to use HTTP/2. In OpenSSL, this is done by calling SSL_CTX_set_alpn_protos and SSL_CTX_set_alpn_select_cb. And in Botan, this is done by passing a std::vector<std::string> during construction of the TLS::Client object and implementing tls_server_choose_app_protocol for the server.

After the TLS handshake is done, the client will send a HTTP/2 connection preface. This is a fixed string of bytes that is used to identify the connection as HTTP/2. It's purpose is to make sure that the server actually supports HTTP/2. In case a HTTP/1.1 server somehow is on the other end.

PRI * HTTP/2.0

SM

The above is the connection preface. It is designed to look like HTTP/1.x enough to trigger a invalid request response and not crash crappy implementations/middle boxes. This is also the only place where HTTP/2.0 is mentioned. Elsewhere everywhere it's just "HTTP/2. The PRI SM supposed to be a reference to the NSA's PRISM program. Eternally solidifying in the HTTP/2 standard reminding us that we are being watched.

Once that is sent, both parties officially starts speaking HTTP/2. All frame and stream management begins. The RFC requires the 1st frame both client and server sends to be a SETTINGS frame to notify the other party of the settings it supports. But both parties are allowed to start sending requests/push before replying an ACK to the SETTINGS frame. After the required SETTINGS frame is sent, everything is fair game.

Implementing the HTTP/2 client

Now I'll share the implementation details. Since I'm building the client for Drogon, there's not much to say about the stack and style. Threading and networking is handled by trantor. And the client is not expected to be MT-safe. That is handled by upper layers. I spend like half a day - being drunk to have the energy to - dissect the existing HTTP client and isolate the HTTP/1.x specific parts into it's own class. Then restructure the actual threading and timer logic. So that the HTTP/2 client can be dropped in dynamically based on the ALPN result.

Before writing the HTTP/2 client, I setup a few design goals for myself. Being:

- Memory safe C++ unless otherwise forces unneeded allocations

- Efficent and fast

I also decide to completely ignore the following features as I don't think anyone using Drogon will need them:

- Server push

- Trailer headers

- Prioritization

- h2c

Parsing and Serializing the HTTP/2 frames

First, ofc, I started with parsing the frames. The API I eventually end up with the following. On success, it returns an object T that contains the parsed frame. On failure, it returns std::nullopt. The flags parameter is used to tell the parser what is read from the frame header. I would love to use std::expected here. But I'm limited to C++17. That would enable a cleaner and better error handling. But it'll suffice.

std::optional<T> T::parse(ByteStream &payload, uint8_t flgas);

The ByteStream is a simple wrapper around trantor::MsgBuffer. It provides several portable functions to read bytes from the buffer. As well as automated bounds checking. Though effort is made to make sure these bounds checking are never triggered. I'm still debating myself if the bounds checking should be enabled in release builds. For example, the function parsing the WINDOW_UPDATE frame looks like this:

std::optional<WindowUpdateFrame> WindowUpdateFrame::parse(ByteStream &payload,

uint8_t flags)

{

if (payload.size() != 4)

{

LOG_TRACE << "Invalid window update frame length";

return std::nullopt;

}

WindowUpdateFrame frame;

// MSB is reserved for future use

auto [_, windowSizeIncrement] = payload.readBI31BE();

frame.windowSizeIncrement = windowSizeIncrement;

return frame;

}

Serialization works basically the same way. Here I leverage the same abstraction to enable batched writes. The API looks like the following. I have debated myself if the flags parameter should be passed by reference or just returned. Ultimatelly I decided to pass it by reference as it makes the API cleaner. By I can't figure out a clean semantics for return. std::optional seems wrong as it conveys the wrong meaning. And std::pair just lends me back into the ugly Golang style.

bool T::serialize(OByteStream &payload, uint8_t &flags) const;

In the same spirit, the frame data is sanity checked before being serialized. For example:

bool WindowUpdateFrame::serialize(OByteStream &stream, uint8_t &flags) const

{

flags = 0x0;

if (windowSizeIncrement & (1U << 31))

{

LOG_TRACE << "MSB of windowSizeIncrement should be 0";

return false;

}

stream.writeU32BE(windowSizeIncrement);

return true;

}

Batched write is done by having a object-scoped OByteStream object. Then hold on sending until I'm done processing incoming data or the current request. One complication being to reduce the amount of copy and allocation. HTTP/2 frame format puts the length field at the beginning. But I can't know the length until I'm done serializing. Not without writing separate logic to predict the length. Which is prone to bugs. So instead, the code writes a dummy header first. Then overwrite it with the actual header after the payload is serialized.

static void serializeFrame(OByteStream &buffer,

const H2Frame &frame,

int32_t streamId)

{

// write dummy frame header

size_t baseOffset = buffer.size();

buffer.writeU24BE(0); // Placeholder for length

buffer.writeU8(0); // Placeholder for type

buffer.writeU8(0); // Placeholder for flags

buffer.writeU32BE(streamId);

// Write the frame payload

...

if(type == FrameType::WindowUpdate)

ok = frame.windowUpdateFrame.serialize(buffer, flags);

...

// Now with the length of the serialized payload, patch the header

auto length = buffer.buffer.readableBytes() - 9 - baseOffset;

buffer.overwriteU24BE(baseOffset + 0, (int)length);

buffer.overwriteU8(baseOffset + 3, type);

buffer.overwriteU8(baseOffset + 4, flags);

}

Sending requests

Sending requests in HTTP/2 is much complicated compared to HTTP 1.x due to reasons mentioned above. It's made even more complicated because of flow control. The client must keep track of the flow control window and resume sending when the window is open. What I end up doing is basically. a) Send the HEADERS and CONTINUATION frames. b) If body exists, send DATA frames until running out of either stream or connection window. c) upon receiving WINDOW_UPDATE for stream. Check the connection window. If it's open, resume sending DATA frames. d) If receiving a WINDOW_UPDATE for connection. Round robin the streams and check if they are blocked. If so, resume sending DATA frames.

That's the simplest yet complete scheme I can come up with.

Another complication is that Drogon doesn't only have 1 type of requests. There's 2. One for normal, data in memory request. Another for sending files. The latter won't give you a pointer when .body() is called. Instead it stores a list of files and my client must read then mult-part encode them. Special code path must be made to handle this. There's also a missed optimization here. Now, both HTTP 1.x and 2 client will encode the entire file into memory before sending. This is not ideal nor necessary in HTTP/2. Though being more complicated. It's beneficial to only read and encode the file in chunks that the sending window allows. This will save a lot of memory when uploading large files.

Yikes!

// Special handling for multipart because different underlying code

auto mPtr = dynamic_cast<HttpFileUploadRequest *>(stream.request.get());

if (mPtr)

{

// TODO: Don't put everything in memory. This causes a lot of memory

// when uploading large files. Howerver, we are not doing better in 1.x

// client either. So, meh.

if (stream.multipartData.readableBytes() == 0)

mPtr->renderMultipartFormData(stream.multipartData);

auto &content = stream.multipartData;

// CANNOT be empty. At least we are sending the boundary

assert(content.readableBytes() > 0);

auto [amount, done] =

sendBodyForStream(stream, content.peek(), content.readableBytes());

if (done)

// force release memory

stream.multipartData = trantor::MsgBuffer();

return {offset + amount, done};

}

auto base = stream.request->body().data();

auto [amount, done] = sendBodyForStream(stream, base + offset, size - offset);

return {offset + amount, done};

Error handling

Another aspect of the client is error handling. All errors that desync the client and server must be treated as a complete connection error no further frames should be processed and the connection must be aborted (optionally, after completing existing streams). But there's also errors that only affects the stream. Turns out most kinds of error are connection errors. Like a frame with invalid size, too many SETTINGS frame, out of order HEADERS/CONTINUATION frame, etc. The only stream error I can think of is either the peer sent a RST_STREAM frame, the total size of DATA frame not matching the Content-Length header (if exists).

Internally, this is 2 APIs. killConnection and responseErrored. Named after what they actually do. Later on they are renamed to connectionErrored and streamErrored to be more consistent with the RFC. The former sends a GOAWAY frame with debug message, closes the connection and aborts all streams. The latter simply makes the stream error out.

Stream ID exhaustion

Another very edge edge case being stream ID exhaustion. As the stream ID must be monotonically increasing. It is possible eventually overflow the 31 bit integer. The RFC requires the client to reconnect when that happens. Managing that correctly is very hard. As it is possible to still have streams in flight when the ID is exhausted. The "perfect" solution is to buffer requests and hold on until existing streams are done and reconnected. Among all possible edge cases. That's too complicated so I did the next best thing. Around stream ID reaching 2^31 - 8000. The client will start looking for a window to reconnect (when all streams are done). And reconnect when it finds one. If the client can't find a window. When it eventually overflows, the entire connection and in flight streams are aborted.

Breaking API changes

Drogon allows the user to set the HTTP request version per request. This used to work as mixing HTTP/1.0 and 1.1 is fine. But it's not in HTTP/2. After talking to the Drogon developers. We decided to break the API and set the version udring client construction.

static HttpClientPtr newHttpClient(

const std::string &hostString,

trantor::EventLoop *loop = nullptr,

bool useOldTLS = false,

bool validateCert = true,

std::optional<Version> targetVersion = std::nullopt);

// Usage

auto client = HttpClient::newHttpClient("https://clehaxze.tw", loop, false, true, Version::kHttp2);

Note that this is a hint rather then a demand. The client will still try to negotiate HTTP/2 if it is using TLS. But if the server doesn't support HTTP/2. It'll fallback to HTTP/1.1. If you wish to use HTTP/1.0, you can set the version to Version::kHttp10.

Optimizations not yet implemented

Some inefficiency still exists in the client. But I believe they are minor. First is the batched send I mentioned above. Now it's coded to send buffered data once onRecvMessage or sendRequestInLoop ends. However, theses calls can and will be nested when max concurrency is reached. Thus, the client will call send() multiple times. Each time sending partial data. This can be optimized by having a variable to track how deep we are and only send when the depth is 0.

Another is the round robin scheme. For now, I store all streams that needs to be resumed in a std::unordered_map to be able to lookup by stream ID. This limits me to always start the map.begin() when the connection window is reopened but stream have been kept open. This can lead to a large body consuming all the connection window. And the client will not be send other streams until the large body is done. But should be rare.

Finally is the memory consumption issue when uploading large files. I mentioned this above. But I don't see people using it much. Any we aren't doing better in HTTP 1.x client either. So, meh.

Tools for Debugging

Here I quickly note 2 tools being insurmountably helpful during development. First is the swiss army knife - Wireshark. I think every network/protocol engineer knows this tool. It's a packet analyzer that can decode a lot of protocols. Even when the protocol is wrapped in TLS, you can supply it with the session key and it'll decrypt the traffic for you. It is insanely useful when I need help checking frame formats are correct.

Next is nghttpd. With the -v flag. It tells you all the frame it receives and sends. It's very useful to see what my client is doing. And when I do screw up, it sends error message in the GOAWAY frame. Nginx can fufill the same purpose and is what I use first. But it does not generate the debug message leading to me basically guessing what's wrong.

❯ nghttpd 8844 server.key server.crt -v --echo-upload

IPv4: listen 0.0.0.0:8844

IPv6: listen :::8844

[ALPN] client offers:

* h2

* http/1.1

SSL/TLS handshake completed

The negotiated protocol: h2

[id=1] [ 1.855] send SETTINGS frame <length=6, flags=0x00, stream_id=0>

(niv=1)

[SETTINGS_MAX_CONCURRENT_STREAMS(0x03):100]

[id=1] [ 1.882] recv SETTINGS frame <length=6, flags=0x00, stream_id=0>

(niv=1)

[SETTINGS_ENABLE_PUSH(0x02):0]

[id=1] [ 1.882] recv (stream_id=1) :method: GET

[id=1] [ 1.882] recv (stream_id=1, sensitive) :path: /s

[id=1] [ 1.882] recv (stream_id=1) :scheme: http

[id=1] [ 1.882] recv (stream_id=1) :authority: clehaxze.tw:8844

[id=1] [ 1.882] recv (stream_id=1) user-agent: DrogonClient

[id=1] [ 1.882] recv HEADERS frame <length=39, flags=0x05, stream_id=1>

; END_STREAM | END_HEADERS

(padlen=0)

; Open new stream

[id=1] [ 1.882] send SETTINGS frame <length=0, flags=0x01, stream_id=0>

; ACK

(niv=0)

[id=1] [ 1.882] send HEADERS frame <length=69, flags=0x04, stream_id=1>

; END_HEADERS

(padlen=0)

; First response header

:status: 404

server: nghttpd nghttp2/1.58.0

date: Sat, 02 Dec 2023 05:27:04 GMT

content-type: text/html; charset=UTF-8

content-length: 147

[id=1] [ 1.882] send DATA frame <length=147, flags=0x01, stream_id=1>

; END_STREAM

[id=1] [ 1.882] stream_id=1 closed

Deployment and testing

As of writing this post, the HTTP/2 client has been used in production on drogon.org querying data from GitHub. I've ran some tests against it and no major issue found. It'll be reviewed by other Drogon developers before being merged. And I'm reading the RFC again after again to make sure I didn't miss anything.

- Runs on drogon.org

- ASan and UBSan clean

- Runs good on OpenBSD's harsh allocator

- Nghttpd didn't complain

- Large downloads from Nginx works

- Tested against wild websites and CDNs

- Works on big endian machines (s390x)

Work in progress testing:

- Check against the RFC

- Stress test

- Fuzzing

I'm hopefull I can get this merged in a little bit more then a month. Hopefully after Christmas.

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- marty1885 \at protonmail.com

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df