Tenstorrent first thoughts

I've looked into alternative AI accelerators to continue my saga of running GGML on lower power-consumption hardware. The most promising - and the only one that ever replied to my emails - was Tenstorrent. This post is me deeply thinking about if buying their hardware for development is a good investment.

The Tenstorrent architecture

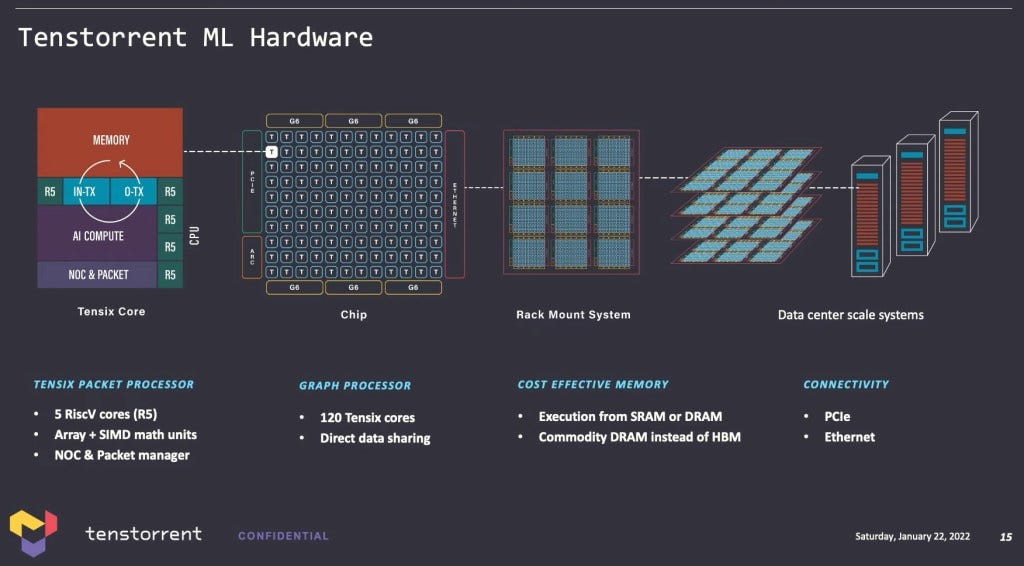

Unlike GPUs, where a single program runs on across cores (I mean the actual shader engines, not the SIMT threads). Tenstorrent has a more complex architecture. It consists a grid of 12x12 tiles. Each tile is called a Tansix core. Which contains 5 RISC-V RV32IMC cores, 2 connected to the NoC for data movement and 3 for actual compute. Tech Tansix core also contains shared SRAM and a tensor engine to carry out the heavy lifting. The following diagram is from their talks. Used under fair use.

I can's stress how different this is compared to most processors. The Tansix cores does not have direct access to the DRAM. Data has to be moved via explicit DMA operations. There is no cache what so ever. Need to cache stuff? Do it in software. Each Tansix core too slow to finish a complex job? String them together like an FPGA. It might optimize the hack out of the memory access, but I see it as very difficult to program for.

Supposedly, the architecture is designed to remove two of the bottlenecks of GPUs - unnecessary data movement and unable to saturate compute. In most vision models, difficult for GPUs to fuse complex layers together. Something like U-net would require adding results form convolutional layers far apart from each other. With GPUs, the output must be written to DRAM, then read back in. And as earlier layers are computationally cheaper, it's wasted bandwidth. Tenstorrent's architecture is designed to avoid this. It runs one layer of convolutional layers on a set of Tansix cores, then the next layer on another set. The data is moved between the cores and seldom or never to the DRAM.

My thoughts

How Tenstorrent tries to solve the problem of data movement is interesting. I can see this working somewhat that popular vision and iamge generation models. Like YOLO and StableDifussion. Able to run SDXL at decent speed with just 75W would be really nice - cutting the power consumption. But I have reservations about how well it handles large language models. The main problem with LLMs is that even just streaming weights from DRAM into the processor is a bottleneck. Tenstorrent's architecture should enable high performance GEMV at all sizes. Since the unused compute can be perposed for other stuff. And GPUs tend to suffer when the vector is too small. But that's not the problem here. Even if it is and pipelining multiple GEMVs are implemented. The overall performance is still limited by the memory bandwidth. It's not like we magically gained bandwidth or significantly waste is reduced.

Tenstorrent's solution is to link up many Tenstorrent chips via 100G ethernet and distribute the compute across many chips. Which is a feature not available to the current Grayskull cards. But in the next generation Wormhole processor. The idea being if an entire network can be mapped on to a cluster of chips. There'll be no need to load weighs repeatedly. At least much less. Then the effective memory bandwidth is the sum of the SRAM bandwidth of all the chips. Pipelining can be used to further improve the throughput. Good concept, but it doesn't sit well with me. I don't have the budget to buy myself a cluster. I have to work with a single card. So thhat solution is a non-starter for me.

Their high-level toolchain looks quite good however. TT-BUDA can load pre-trained models from PyTorch or ONNX. And does all the complicated stuff for you. I don't think getting models to run on the hardware will be a problem. But I can't meaningfully contribute that way. Nor I have any business in serving models. Even if stuff works really well, building a business around it is hard as efficiency can be dealt with by throwing hardware and VC money at it.

I've mixed feeling right now. It's a cool concept, I see the potential and I know how I can contribute to the ecosystem. On the other hand, I don't have the capital to truly unlock the potential of the hardware. Will I buy one for development? I don't know. I've other projects that I want to work on too. Maybe some balcony solar system that reduces my power bill.

Responsible spending

I've been thinking about this for a while. I ought to reduce the consumption I make. Because it's good for the environment and my wallet. This however looks more like an investment then pure consumption to me. I do get the high of buying new hardware. But ultimately I will contribute something back to the ecosystem. At minimum a few posts here on my blog about my experiments. And if I'm lucky, I might be able to find pratical applications that'll replace the need for power hungry GPU. Which inches us closer to a more sustainable future. I feel like I'm pulled in two directions. One side of me wants to reduce my consumption. The other side knows that there's a chance that I can make a difference with this purchase. Just like me buying my RK3588 dev board and build some cool stuff with it. Any company or shartup adopting my solution at some sacle will quickly save more power than I'll made by purchasing the hardware.

Don't know. I'll sleep on it. Maybe I'll have a clearer mind tomorrow.