Getting the 1st token generated on Tenstorrent with GGML

I've been working on integrating Tenstorrent cards into GGML for a while, since early this year, on and off. I was at MOPCON 2024 in Kaohsiung during the weekends. I'm more to just meet people then attending the actual talks. Out of not knowing what else to do late night, after a meetup with the Open Culture Foundation (they are awesome guys!), I was downing coffee at the hotel's lobby hacking away. Error messages comes and go. They change, but that happens all the time. It wasn't until a stroke of skepticism hit me. Looking at the wall of text, "llama.cpp prints the prompt yes, but what's the thing next to it".. Wait a second, OMG, llama.cpp generated a token. I AM GOOOOOD!

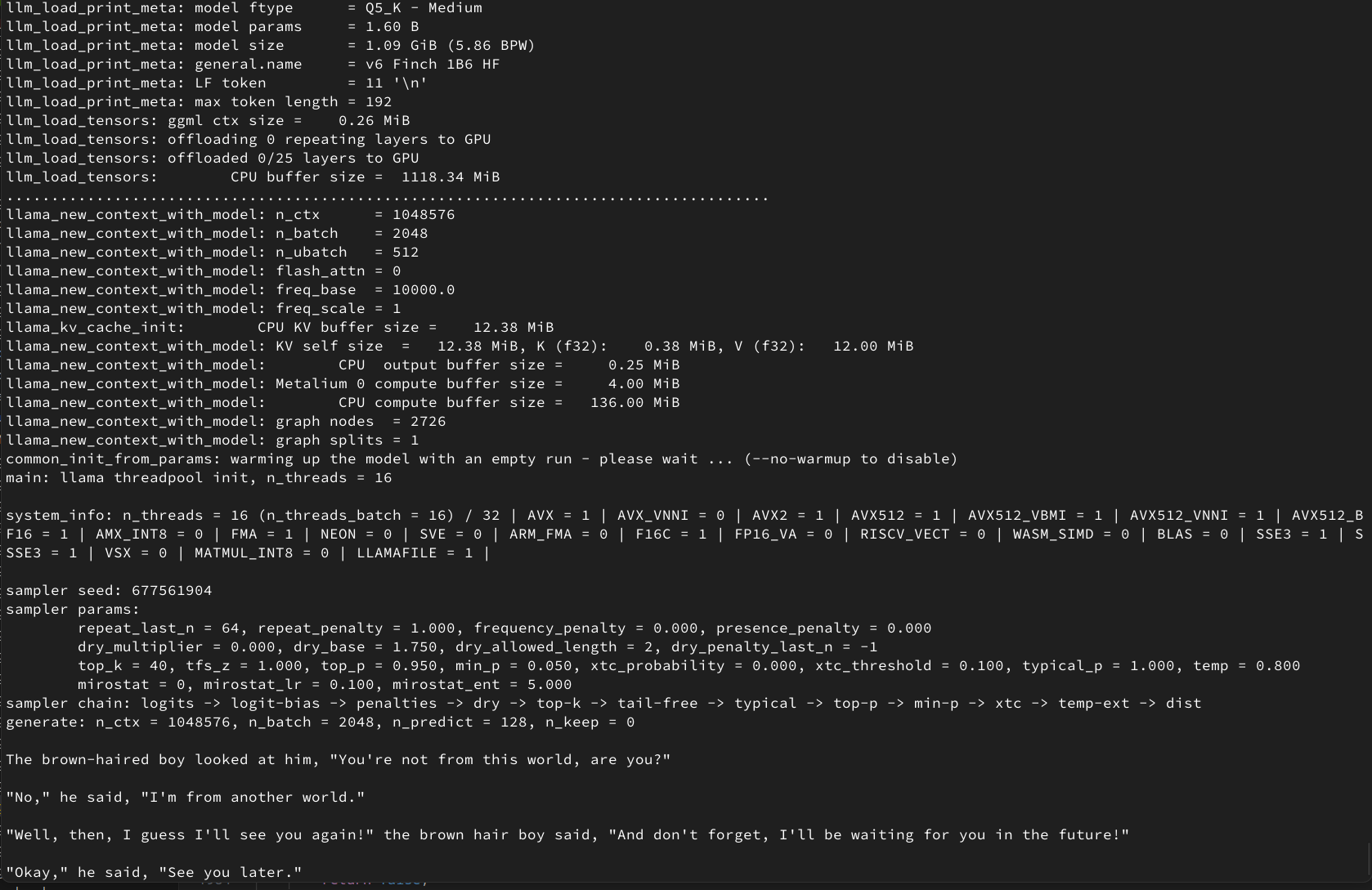

See the image below.

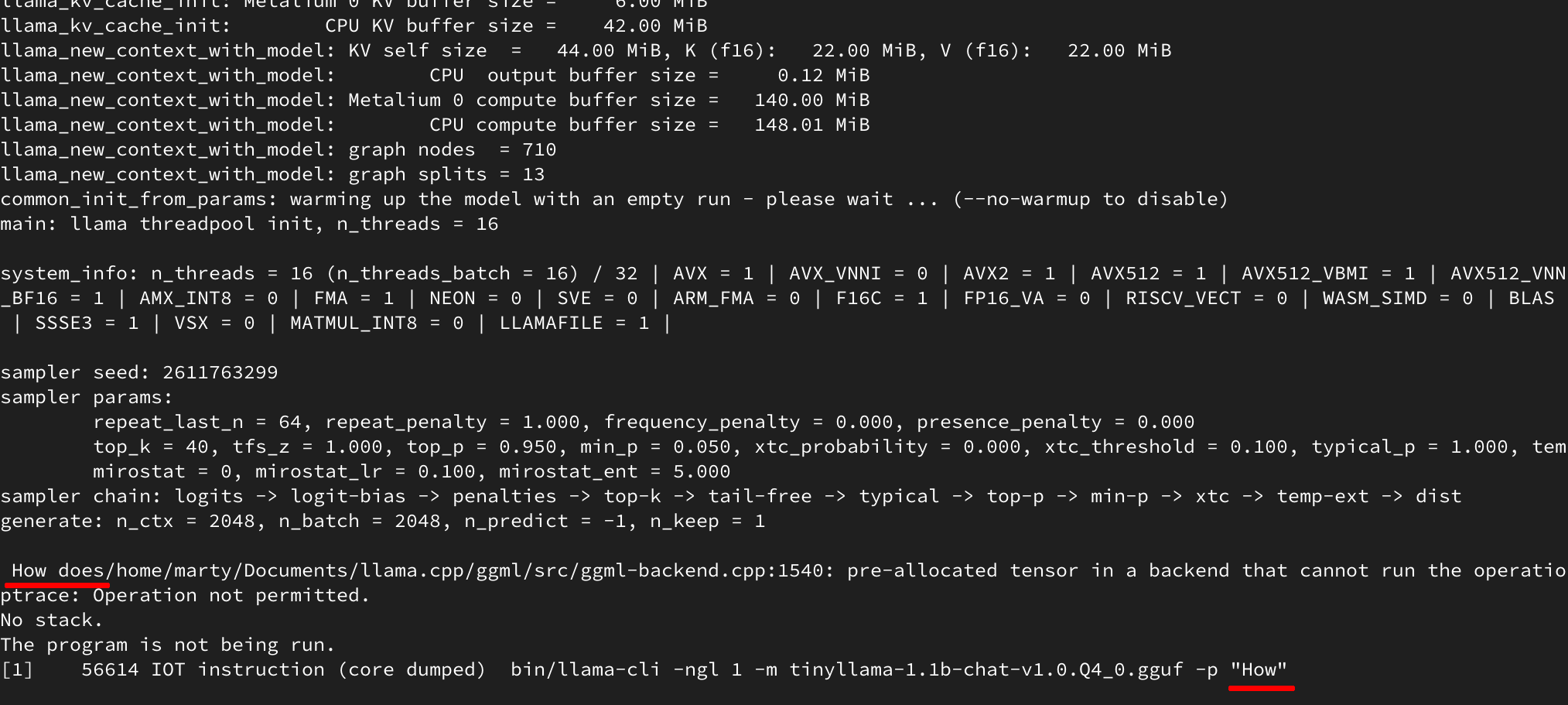

I got the first token generated on llama.cpp running a single layer of Tiny LLaMA 1.1B on a Tensorrent card (and rest on the CPU). This is such a huge milestone. The basics are there and behaving well enough. Then it crashed citing a a compute graph assertion failure. That's something I'll fix in the coming weeks. But not important right now. I want to make a write up of the journey so far. And what I might do next.

It is definitely not a single man effort. I've bugged Tenstorrent many, many times on their issue tracker and Discord. They have provided pronominal engineering support. I owe them my biggest gratitude. And to the entire community. Still, let my ego have this moment.

GGML integration and blurb on computation assumptions

Tenstorrent essentially provides 2 SDKs for their cards. a) tt-Metalium (often refered as Metal. I avoid this name due to conflict with Apple's Metal API), which is a low-level SDK providing OpenCL-like environment; to program kernels on the card. b) TTNN, a tensor and tensor operator library, like a combination of ATen and cuDNN. Built upon tt-Metalium. Initially I was looking at integrating Metalium directly. Thinking codegen can't be that hard... Took me 3 days to give up on that. The programming model of Metalium is too different from OpenCL/CUDA that porting their kernels won't work while writing a compiler that can generate good Metalium code would be a PhD thesis. So I decided to go with TTNN. And to completely disregard any performance implication until stuff runs. Getting it spitting out text is the only goal.

For those sharp eyed readers looking at above pictures, the backend is still named Matalium. I never got the urge to change it and I want the flexablity to write my own kernels. Not be limited to use TTNN only.

If you have followed my blog for a while, you know I started my journey with GGML by integrating RK3588 NPU support. GGML back then looks very different from what it is now. It was a lot simpler, plus the RK3588 NPU has direct access to the CPU's memory. Hacking the RK3588 NPU into GGML was really easy mode - hijack the matrix multiplication function, copy some data and invoke the NPU when appropriate. Today GGML is a complex beast, abstractions and all. Tenstorrent cards also don't have direct access to the CPU's memory nor uses a data layout that reduces to row major in special cases. End result is that the bare minimum to get GGML to recognize the Tenstorrent card, run something and not crash is like more then 200 lines of code.

Trying to squeeze the entire process of building a new backend would be too much for a single blog post. But here's a high level overview of the steps involved:

- Add a

ggml_backend_XXX_regfunction to register the backend with GGML. Then modify GGML to register the backend. Modify the build system to compile the backend. - For each device support by the backend, return a device API object.

- Implement the tensor input/output functions. To get the data in and out of the device.

- Implement the

supports_opfunction in the device API object. This function is called by GGML to check if the device supports a given operation and schedule around if it doesn't. - Implement the

graph_computefunction in the backend. This performs the actual computation. Attach the backend-specific data to the tensor object. - Try run existing unit tests and make your own test to see where your backend breaks.

- Repeat the above steps, adding more operations and devices as needed.

GGML was designed to run on CPUs and later on extended to GPUs. These are still conventional processors to some degree. AFAIK at no point GGML tried to support ASICs (there's the CANN backend now, but that uses row-major too). Thus, some assumptions GGML makes simply are not true on Tenstorrent. Nor they are obvious at first glance. Some on the top of my head:

- Tensors can be addressed by the CPU

- All tensors uses row-major layout

- Strides are a reasonable way perform lazy view operations (extension of above)

- All data is byte addressable

- There a pointer on the device that can be used to access the data

You would think those are reasonable assumptions. Just no. Non of the above holds on Tenstorrent cards. The use of non row-major layout the the main trouble maker. Many, many implications of it. Besides it making the classical method of implementing view via strides imposible. It also makes interacting with other backends from the Metalium one difficult. Everyone assumes each other speaks row major, so an adapting interface has to be made to create an illusion. IIRC AMD's XDNA backend has to deal with the same problem - they have wapper libraries to make it sane. And they haven't updated their public codebase in a while. I'm not sure if they are still using the same approach.

Let's take a look at one of the official example programs of TTNN.

import torch

import ttnn

device_id = 0

device = ttnn.open_device(device_id=device_id)

torch_input_tensor = torch.rand(2, 4, dtype=torch.float32)

input_tensor = ttnn.from_torch(torch_input_tensor, dtype=ttnn.bfloat16, layout=ttnn.TILE_LAYOUT, device=device)

output_tensor = ttnn.exp(input_tensor)

torch_output_tensor = ttnn.to_torch(output_tensor)

ttnn.close_device(device)

It doesn't matter if it's Python or C++. TTNN looks similar in both cases. See that layout=ttnn.TILE_LAYOUT? That's the bane of my existence. A tile is a 32x32 matrix. Tiled tensors will have it's data cut up into smaller matrices. This is done to make the hardware more in several ways. First by reducing the amount of DRAM read requests and turn many small reads into a single, large, linear read. And second by not needing a gigantic buffer to hold massive temporary data (I think, that's the experience I had designing NPUs in the past). The same reduction in DRAM read requests is also why scheduling on a GPU is such a pain and performance critical. The problem just does not exist with tiled tensors; the data is already in the right place.

You can read more about Tenstorrent's tile layout in the following link:

Anyway, that broke GGML's assumptions and extra cares are needed to be taken when sending data across backend boundaries. I opted to (for now) keep all tensors as tiled with in the Metalium backend as most TTNN operators demands tiled tensors. This may not be ideal in some cases, but good enough for now. TTNN used to really need all operations to be tile-aligned. A few months ago, you can't transpose a tensor of shape [12, 16] for example. Support for non-tile-aligned operations have been getting better by the day.

Talking about slicing. Most libraries, including GGML and PyTorch, uses strides to implement views into tensors. This is a very powerful feature and cheap (until you have to realize the view). I don't have a good introduction to strides so I hope you are already familiar with it.. Since Tenstorrent have gone with tiled tensors, they have 0 stride support. The following code in PyTorch would create 2 tensors and add a sub-view of the first tensor with the second tensor. In total 3 allocations are made. The first, the second and the third tensor.

import torch

a = torch.rand(4, 4) # allocation 1

b = torch.rand(4, 4) # allocation 2

c = a[1] + b[1] # allocation 3

The same could not be said with TTNN. It has to do 5 allocations. The once for each tensor. And 2 more for the sub-view. Creating 2 actual [4] vectors and copying the data over. This kind of operation is quite common in LLMs so a performance hit. There's ways around this. But it's going to be like writing an optimizer for a compiler. Finding where the fast path can be taken and where it won't have the same effect. Adding that to an already complex backend is going to be fun.

import ttnn

# Pesudo code. TTNN doesn't have the same API. Just illustrative.

a = ttnn.rand(4, 4) # allocation 1

b = ttnn.rand(4, 4) # allocation 2

c = a[1] + b[1] # allocation 5

# ^ ^

# | allocation 3

# +------allocation 4

To be fair, this kind of challenge is known, expected and a normal part of working with ASICs. So although me complaining, it's not Tenstorrent's fault. This kind of mismatch between the software and hardware is also why model compilers exists. We still program in a high level language, assuming row-major layout. That gets compiled down to whatever the hardware can do. Yet, AI compilers from every vendor has been notoriously bad. Rockchip's RKNN though how horrible it is, has been the best I've seen so far. The fact that most model compilers also targets various GPUs doesn't help a bit. They have a good reason to not ditch embedding assumptions and conventions that are not true on ASICs.

That brings up another question - which is the future? Inference engines or model compilers? I honestly don't know. Inference engines like GGML are great that they are almost always bounded to work. But they are also limited by their complexity and the ability to push the hardware. Compiler on the other hand can be as complex as you want it to be. But unlike compilers that compiles C code, model compilers are designed to target specific models instead of putting generalities in the 1st place. Maybe I'm asking too much, but I really wish to eat my cake and have it too. Something that pushes the hardware AND runs every model.

Maybe a hybrid is the approach. Apparently Rockchip took my RKNPU2 GGML hack, ran with it and wrote their own LLM compiler (I know, crazy). Worse, even though GGML is licensed in MIT, they never distributed the licensing information nor credited GGML or me. I can envision a hybrid approach making the Metalium backend work better. A graph scan phase to detect when shenanigans can work and when it has to be the proper way. That's for sometime in the future.

What's next?

I don't expect my GGML backend be the 1st working solution of using Tenstorrent cards to run LLMs in production. Tenstorrent's own vLLM fork would most likely be it - they have much smarter people, vLLM has a more suitable design (for ASICs like Tenstorrent's) and they have a team working full time on the problem. It also doesn't help that my backend is written in a way completely disregarding performance. That's also not the point. The point is to get there eventually, and in open source, projects ended up benefiting from each other. And I agree with Jim Keller on their approach to AI staks, basically "remove the abstractions so the hardware can run faster". So why vLLM? GGML is the way to go.

Anyway, back to GGML. The next order of things is to debug the crash and put more operators onto the card. I dumped the operators my backend rejected, up until it crashed into the following list. There's surprisingly not many kinds of them. After quick investigation, I find that more then half of the problem is that GGML expects more relaxed broadcasting rules then TTNN implemented. Which will need to be fixed in TTNN. ROPE is on me, haven't understood the details of it yet. And VIEW is where the backend currently crashes.

ROPE 30

MUL_MAT 30

GET_ROWS 33

SOFT_MAX 10

VIEW 1

I don't have any estimates on how long this will take. Nor timelines on supporting other LLMs. I'm sure things will come together as operator support matures. Performance optimizations would only come after I am able to get an entire LLM working.

Show me the code

As always, source code is publically avaliable on my GitHub. It is still a work in progress therefor I will not provide support for it. However, I'm happy to answer any questions and accept patches if you want to contribute. TTNN has been chaning APIs quite a bit, so you might need to patch stuff here and there to get it to work.

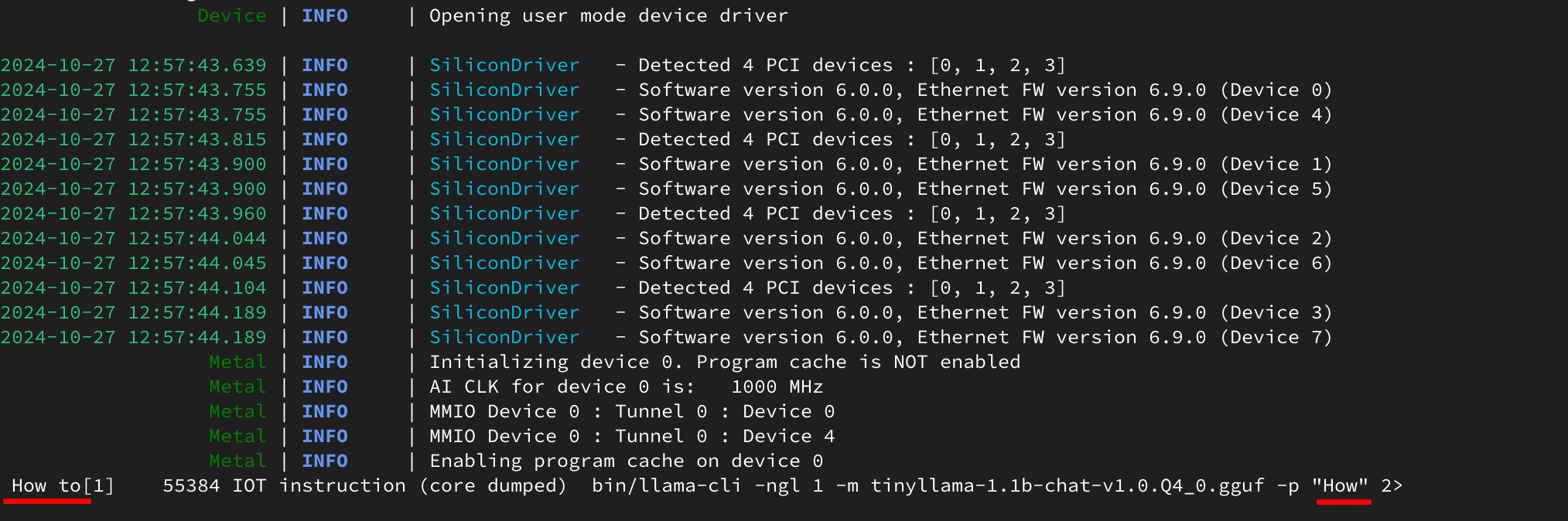

UPDATE: 2024-Oct-29 - Generating until End of Text!

I wasn't expecting such a swift success. After a small pile of hacks, I am able to get Tiny LLaMA to generate continously, up until EOT and exit gracegully. Still, only 1 layer runs on the card else it becomes incoherent and it's more a decelerator then anything. But things are lining up nicely. Next step: get all layers onto the card.

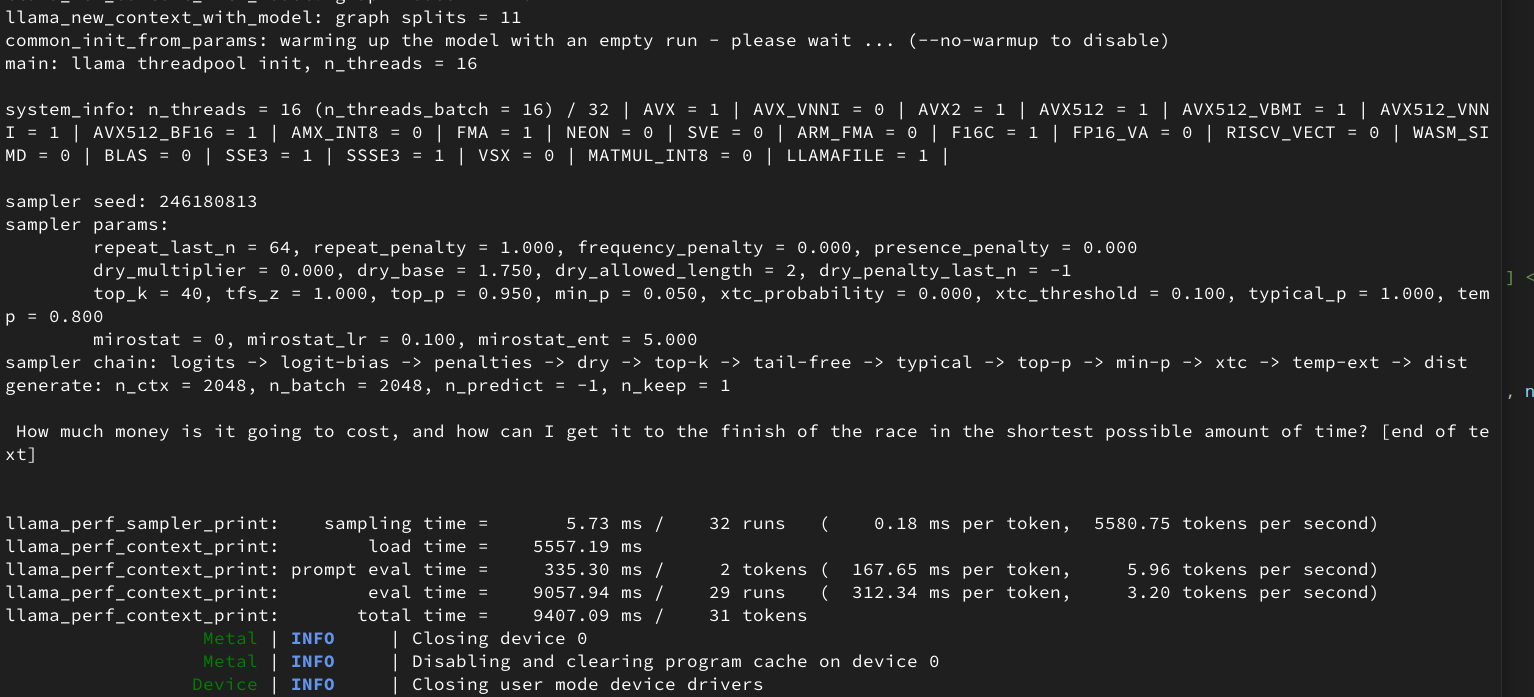

UPDATE: 2024-Oct-29 night - RWKV v6 Finch is running!

Wow, I wasn't expecting this. Wins are comming so quick. RWKV v6 1.5B is running on the Tenstorrent card. Funny I started out hacking BUDA to try and get RWKV v5 running. Now my GGML backend is able to run RWKV v6. Ley's gooooooooo!