Full LLMs running on Tenstorrent + GGML

See my last post for context

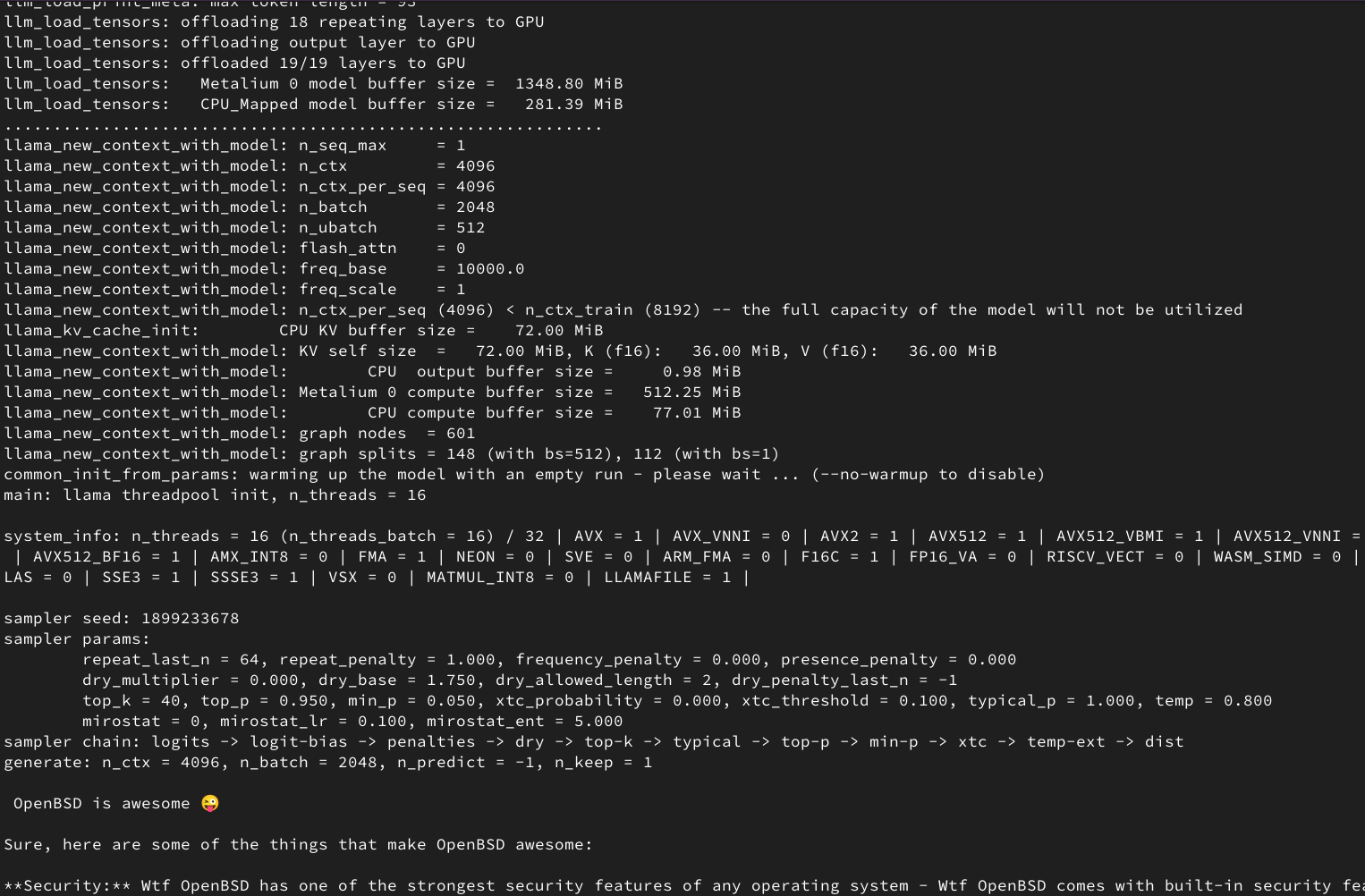

Wins are just coming fast! Since my last post of less then a month ago. This is such a fun project. My GGML backend was able to run a single layer on TinyLLaMA on a Tenstorrent Wormhole n300. More layers causes the model to start spilling out gibberish. Whith lots of time and sanity invested, I was able to get the entire TinyLLaMA running on device (at least the part where TTNN has corresponding operators). Plus other small LLMs like Gemma 2B and Qwen 1.5B.

Small models for now because debugging them is much easier and load times are faster. Eventually I will get to the big ones.

I consider this a birthday present I won for myself. Literarly tomorrow is my birthday. Let's go baby!

Nasty, nasty bugs

Actually, I was able to offload all layers onto Wormhole last month. The problem was that the model became completely incoherent. The situation is not unexpected as nearly nothing works 1st shot. Not even with multiple unit tests having your back - GGML has it's own test-backend-ops and I wrote my own test-metalium to check for different things. With about 2 days of starting at the screen and trying to figure the madness out. I was able to isolate the problem down to 2 sets of weird conditions.

- Disable Add, Mul, MatMul and Softmax then everything is fine

- Disable everything but MatMul yet LLM is still incoherent

Assuming the operators work independently from each other, this is a set of quite paradoxical conditions. At last I decided focus on just offloading MatMul and see what's wrong with it. Obviously the problem would be operator accuracy/numeric stablity.. right.....? TTNN uses a struct called DeviceComputeKernelConfig to determine the how accurate the FPU and accumulator should be. Cranked that up to the max. Still incoherent. Another day and a half gone by. I tried every tick in the book. Even de-offloading the MatMul and code myself a bare bones MatMul in C that is known to be correct. Still incoherent.

At this point I am convinced 70% that the problem is not the operator. Something else in the code path must be the cause. Eye scanning and adding asserts turns up nothing of interest. Nor I can rely on GGML's test_falcon and test_llama test cases as the unit tests does not support multi-backend scheduling (the Metalium backend lacks ROPE and ALiBi ops, currently it needs the CPU as a backup).

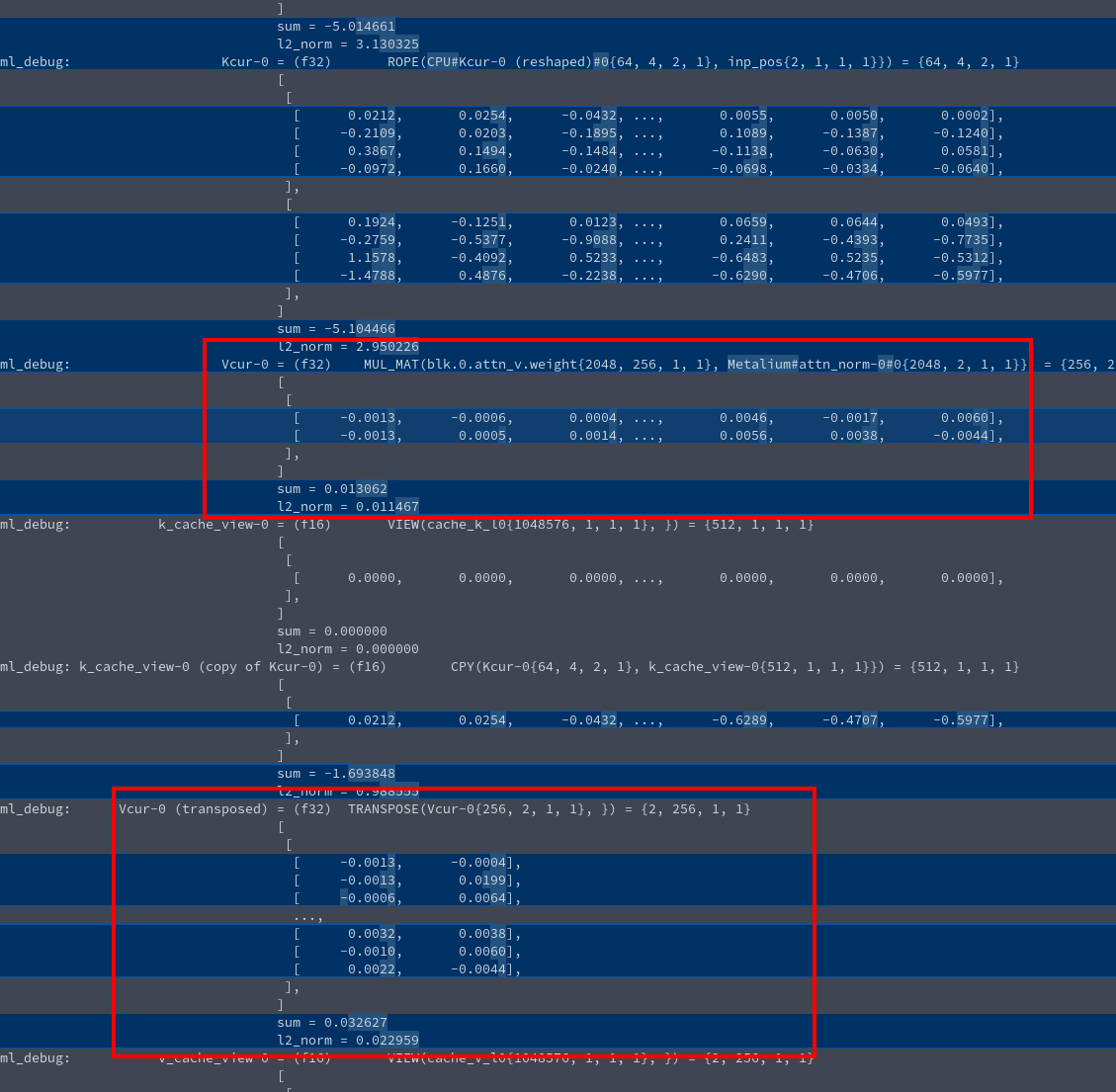

The llama.cpp GitHub discussion page is an awesome place to ask for help. I mean, as long as your are professional and ask good questions. I posted my situation and one of the developers replied I can try using llama-eval-callback instead. It takes in the prompt, does a single forward pass and dumps all of the intermediate tensors to stdout. Now I can literary diff the tensors to see where it starts to diverge significantly. Lo and behold, transpose doesn't actually transpose!

Of course the CPU is the one doing transpose - I banned Wormhole from the transpose operator. What doesn't make sense if why there's an duplicated element and why the source and transposed tensor has the same order of elements. Both -0.0013, -0.0013, -0.006, ... This is all the while the CPU's transpose operator is very well tested and been there since early days. I opened up the implementation and saw this.

static void ggml_compute_forward_transpose(

const struct ggml_compute_params * params,

const struct ggml_tensor * dst) {

// NOP

UNUSED(params);

UNUSED(dst);

}

Do'h. Yes, it makes sense. Like any Deep Learning framework, transpose is not a solid operator. Instead, it simply swaps the stride and dimensions of the tensor. Only later when the tensor is accessed, whatever needs it is responsible to actually respect the stride. However TTNN, which is not row-major, does need to perform transpose actively. Even though I banned the transpose operator, GGML still got to copy the tensor from device to host to handle non-supported operators. During the process, my backend sees GGML trying to copy the tensor and does a transpose. GGML expects transpose be a no-op. Two transposes cancel each other out. The tensor is still in the same order. The problem doesn't show up when matmul is not offloaded because the copy from Wormhole to CPU is not done.

A special code path is added to not transpose if GGML is trying to copy out a transposed tensor. Likewise for reshape and permute. Now the model is coherent with MatMul offloaded to Wormhole.

That's not the end of horrible bugs. Model is still incoherent when all operators are offloaded. Luckily I am able to narrow down the problem to the CONT operator, this operator simply realizes a tensor and make it continuous.. wait what? Not again.

So I ran the the same "diff the tensors" trick and stared at my monitor. Initially it looked like permute is going wrong. But ruled out as it's my monkey brain being monkey. Eventually a stroke of inspiration hit me. I looked at the tensor_set function, it uploads data from the CPU to the Wormhole. To check if I'm making potentially flawed assumptions. Two or three hours later I realized GGML can and will try uploading permuted tensors. TTNN, again as something that doesn't understands row-major, needs all uploads to start correctly ordered. Some special detection and handling later, all operators I support now can be offloaded to Wormhole successfully.

I mean, ouch. Cross backend interactions indeed is an area not tested by the unit tests. I just not expecting how much of a pain it is.

What's next?

LLMs are running now so building for functionality is pretty much done. The next thing is performance. TinyLLaMA 1B running at 6 tok/s on a 150W chip is no where competitive. Heck Tenstorrent's hand optimized LLaMA 3.1 8B runs at 24 t/s/u. There's more then 40x to go. I added some debug flags to dump out all the operators my backend rejects. Here's the ops and number of them rejected for a single forward pass of TinyLLaMA 1B.

ROPE 1122

MUL_MAT 352

GET_ROWS 55

SOFT_MAX 66

Gemma 2B:

ROPE 918

MUL_MAT 288

GET_ROWS 55

SOFT_MAX 54

Qwen 1.5B:

ROPE 1428

MUL_MAT 466

GET_ROWS 55

SOFT_MAX 84

It's a surprisingly small set of operators rejected. Though each of them are heavy hitters. Especially GET_ROWS. Without proper support, GGML has to copy the entire tensor to the CPU, grab the rows and discard the rest. Plus TTNN has to convert the tensor to row-major. This is a lot of work for very little done. Which I hope I can solve soon. Hopefully I can get some support from Tenstorrent's engineers. GGML is really looking like a promising way to bring models to Tenstorrent's hardware, easily.

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- martin \at clehaxze.tw

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df