Memory on Tenstorrent

This post assumes you are somewhat familiar with the Tenstorrent software stack. And you are somewhat familiar with basic computer architecture concepts. Basically aimed at software developers who are interested in programming Tenstorrent's hardware, whether it be via TTNN or directly using Metalium but is lost with the memory magic.

When I first started programming Metalium. Memory was a "you do this and that then it works" kind of thing. Which doesn't sit well with me - at that point I understand each Tensix core only has direct access to it's own SRAM. But never figured out how all the magic parameters in DRAM allocation works.

std::shared_ptr<Buffer> MakeBuffer(IDevice* device, uint32_t size, uint32_t page_size, bool sram) {

InterleavedBufferConfig config{

.device = device,

.size = size,

.page_size = page_size,

.buffer_type = (sram ? BufferType::L1 : BufferType::DRAM)};

return CreateBuffer(config);

}

std::shared_ptr<Buffer> MakeBufferBFP16(IDevice* device, uint32_t n_tiles, bool sram) {

constexpr uint32_t tile_size = sizeof(bfloat16) * TILE_WIDTH * TILE_HEIGHT;

// For simplicity, all DRAM buffers have page size = tile size.

const uint32_t page_tiles = sram ? n_tiles : 1;

return MakeBuffer(device, tile_size * n_tiles, page_tiles * tile_size, sram);

}

Also in the kernels there's this InterleavedAddressGenFast that somehow knows how to access the DRAM while needing less information then noc_async_read. Why?

const InterleavedAddrGenFast<true> c = {

.bank_base_address = c_addr,

.page_size = tile_size_bytes, // free, known at compile time

.data_format = DataFormat::Float16_b, // free, known at compile time

};

noc_async_write_tile(idx, c, l1_buffer_addr);

// vs

noc_async_read(get_noc_addr_from_bank_id<true>(

// none of the below are compile time info

dram_buffer_bank, dram_buffer_addr),

l1_buffer_addr,

dram_buffer_size);

I only fully understood what's going on after reading several tech reports followed by me completely rewriting their memory allocator for speed needs. I also recommend Corsix's FOSDEM talk as a very good complementary material, which I truly wish I had while writing the allocator. This post woule be a summary and aims to help people understand how exactly memory works on Tenstorrent. Especially focusing on it's use in TTNN as that's the primary consumer of Metalium.

Prerequisite knowledge

RISC-V address space

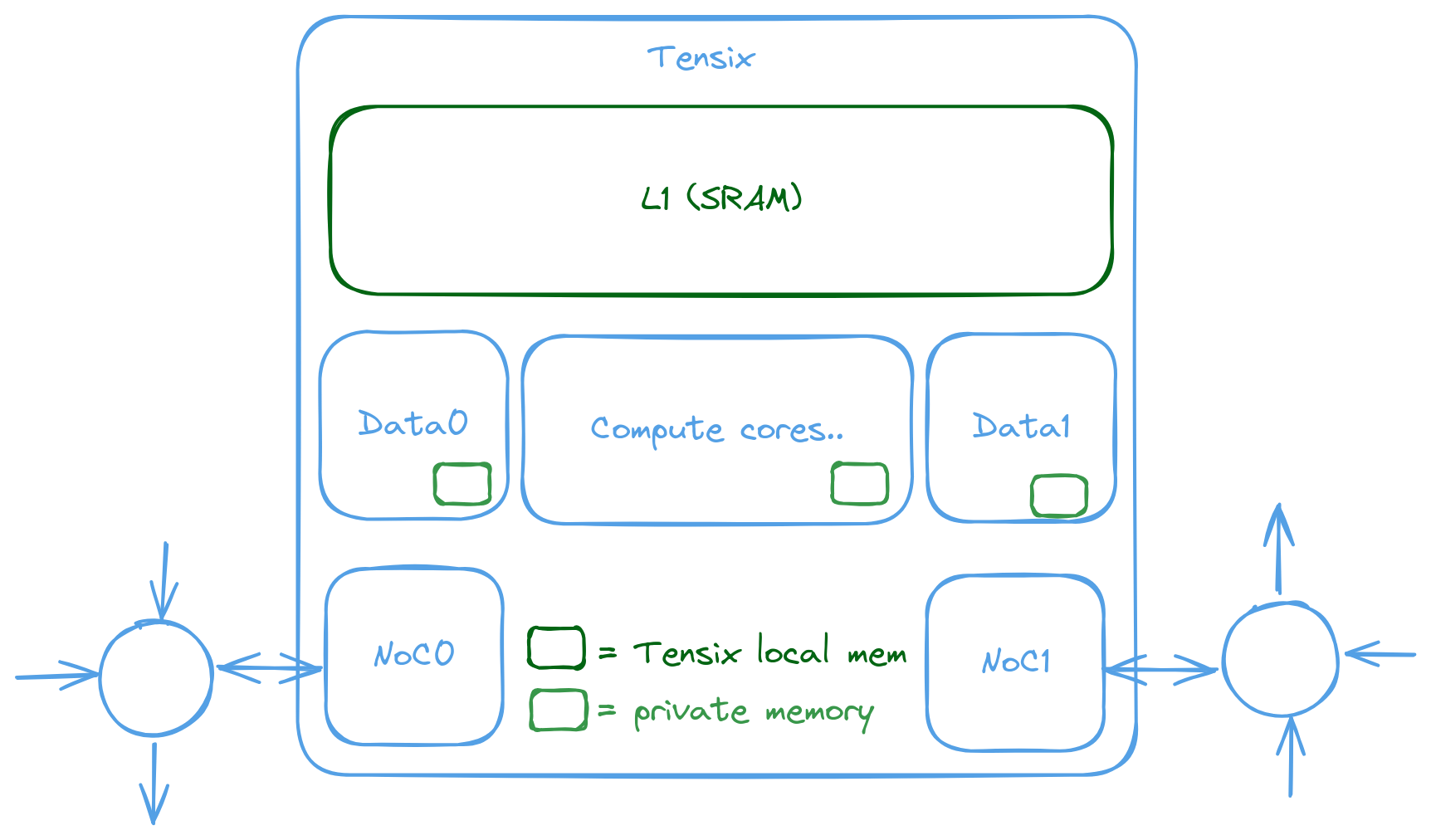

Let's start from the very basics. When the following kernel ran on data movement core 0. That code is stored on the Tensix's shared SRAM (Tenstorrent calls them L1, but welp) and there's a per-core private memory holding content on the stack. This reduces the SRAM pressure from all 5 cores running and trying to access the same memory (there's more the just the 5 RISC-V want access to it. And a true N port SRAM is very expensive). All the RISC-V cores have the L1 and the private memory accessable to it and all cores have L1 mapped on the same address.

// the function (machine code) lives on L1

void kernel_main() {

int a = 0; // lives on per-core private memory

// variable itself in per-core private memory but points to a circular buffer in L1

// Stupid, but because L1 is mapped in the core's address space, you can cast it to a pointer

// and do whatever you want with it. It's just slow (the core is called baby for a reason)

cb_wait_front(tt::CBIndex::c_16, 1);

uint32_t cb_idx = get_read_ptr(tt::CBIndex::c_16);

// this is passed by the host and "points" to DRAM (not the right term but close enough)

uint32_t dram_addr = get_arg_val<uint32_t>(0);

}

The memory blocks within the Tensix can be visualized with the following diagram. I've only shown blocks relevant to memory as compute is not the focus of this post. The L1 is a shared block of SRAM that all cores have access to. Each core has it's own private memory. And there's NoC interfaces that allow data movement cores to receive and issue NoC requests.

DRAM tiles

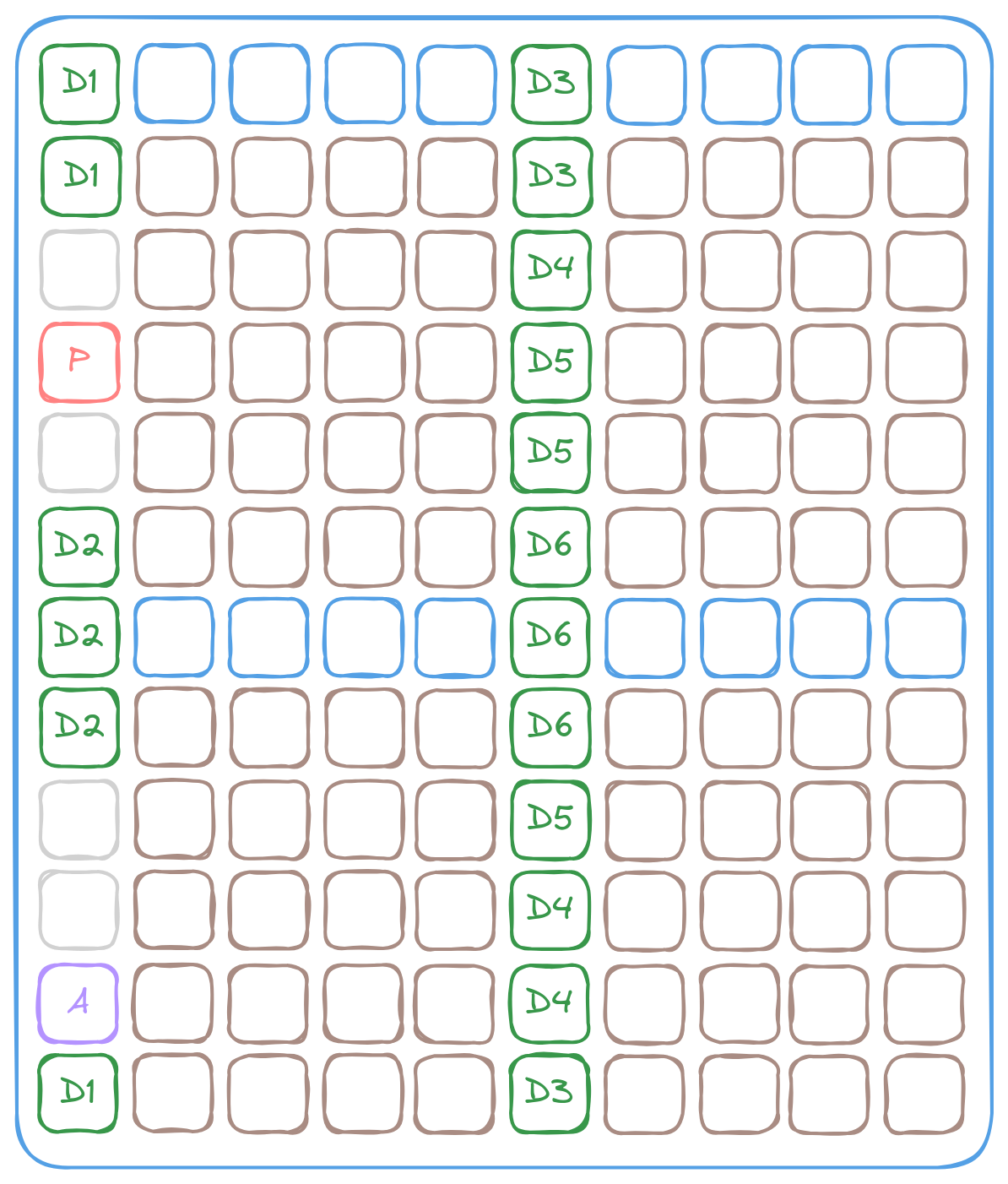

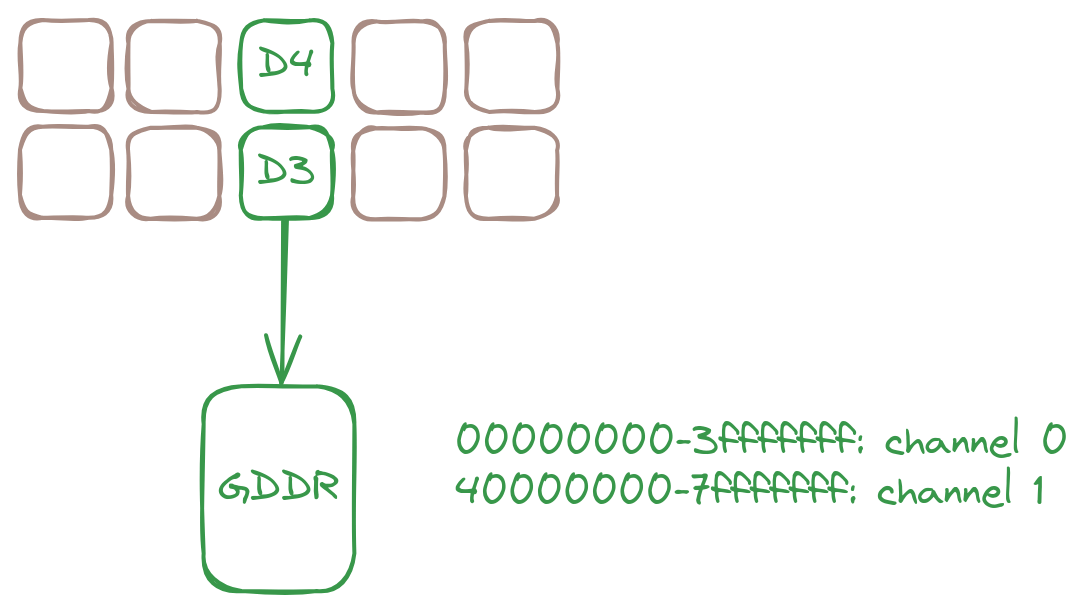

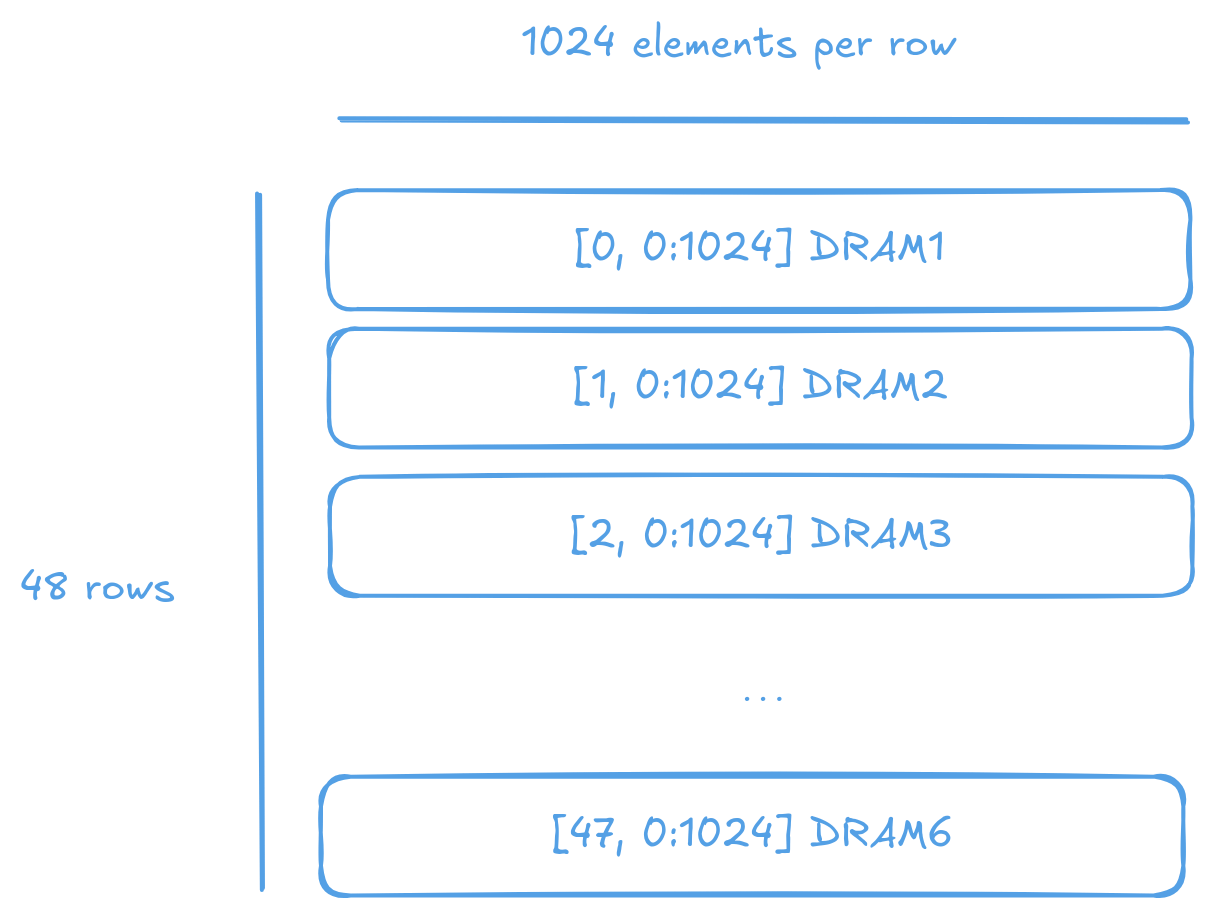

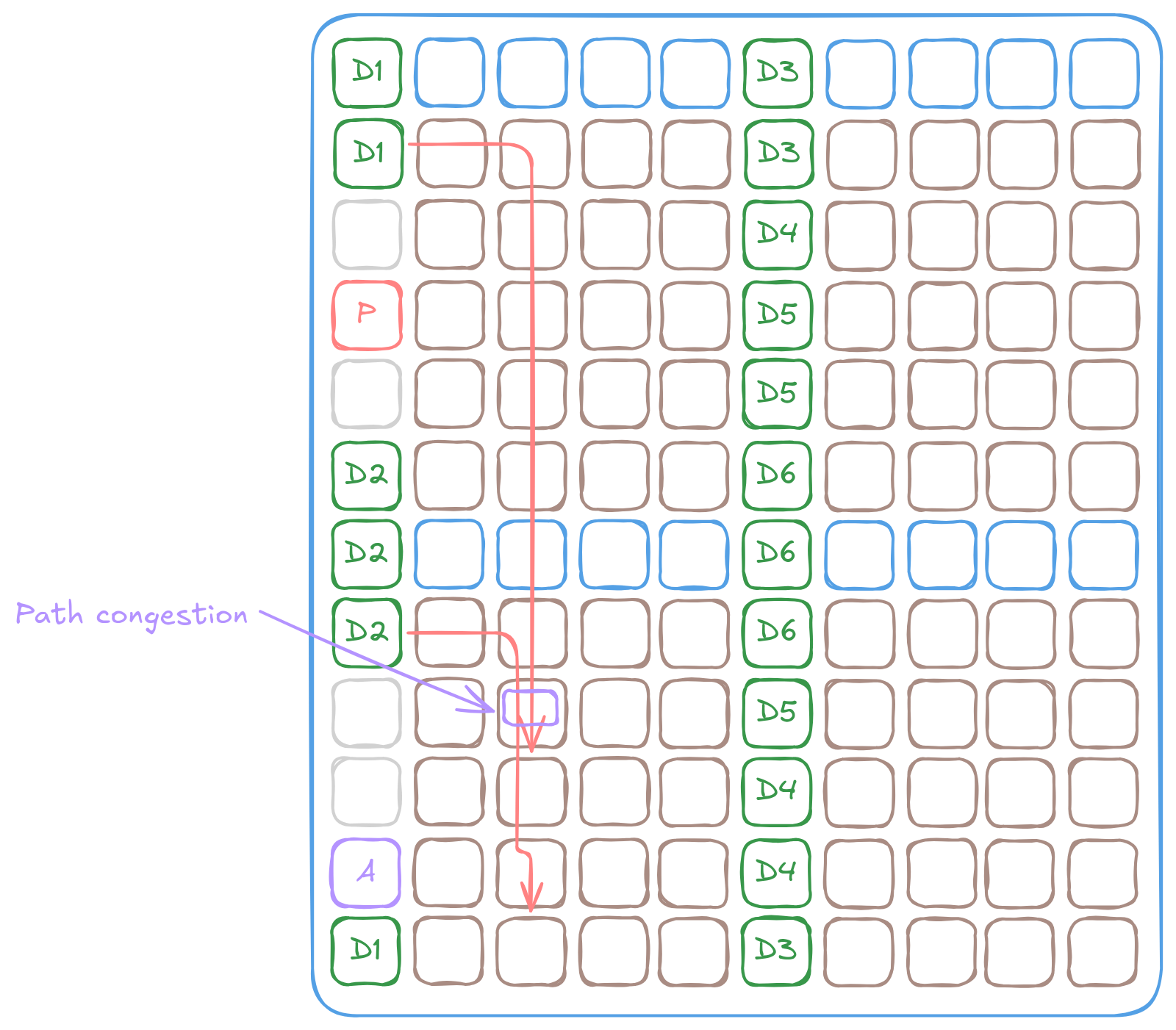

Wormhole has 6 DRAM controllers. Each connected to a 2G GDDR6 chip (2 channels, 1G per channel). The following image shows Wormhole's NoC grid with the DRAM tiles labeled D1 to D6 connecting to controller 1~6. Note that multiple DRAM tiles connects to the same DRAM controller to increase NoC connectivity and the distribution of DRAM tiles is not uniform. They are roughly based on the ease of physical routing.

And each DRAM controller connects to a GDDR chip with 2 channels. 1 GB per channel. The 1st channel is mapped to address 0 and the 2nd channel is mapped from address 1GB. Making math during DRAM access really easy.

NoC requests

The above is about what the RISC-V can see using points - it's own private memory and the shared L1. There needs to be a way to access the L1 of other Tensix and the DRAM. That's where NoC requests come in. The NoC allows you to DMA into and out of any tile on the NoC. You just specify the x, y coordinate pair and, address within the tile, where you want to read or write to. The NoC will take care of the rest.

uint64_t noc_addr = get_noc_addr(x, y, addr_on_target_tile);

noc_async_read(noc_addr, l1_buffer_addr, dram_buffer_size);

// for writing

noc_async_write(l1_buffer_addr, noc_addr, dram_buffer_size);

This works universally for all tiles on the NoC (as long as the address is mapped to valid memory). If the NoC request goes to a Tensix or Ethernet tile, it reads/writes L1, if to a DRAM tile runs against DRAM, to the PCIe controller and you poked the peripheral. Instead of a flat, linear address space. On Tensix, the real address is a tuple of (x, y, addr).

Accessing DRAM should be obvious now. The following step will create a read request of size 0x100 to the DRAM tile D1 at address 0x1000.

uint64_t noc_addr = get_noc_addr(0, 0, 0x1000);

noc_async_read(noc_addr, l1_buffer_addr, 0x100);

As a matter of fact, the following can be inferred from the information above:

- All 3 D1 tiles are connected to the same DRAM controller

- Changing the x, y coordinate pair to point to another D1 tile and read, the same data will be read

- Address 0x1000 is within the first 1GB. So the 1st channel of the GDDR chip is used

- Even though there's only 2GB per DRAM controller, using all 6 DRAM controllers at the same time gives you the full 12GB

Memory layout

Due to the lack of an unified address space, putting data in memory is not as simple as dumping it into a buffer. Which memory chip and how to distribute the data becomes a problem the software has to decide. There are several ways to approach this problem. Tenstorrent chose a few that makes sense for their target workload (ML). There's several parameters going into the decision of where to put what part of the data.

- How to decide which DRAM chip stores which data?

- How to partition the data?

- How large a partition should be?

- Pointers work because they are unique values denoting objects. Now it's not so simple as there's 6 DRAM banks. How to program the thing?

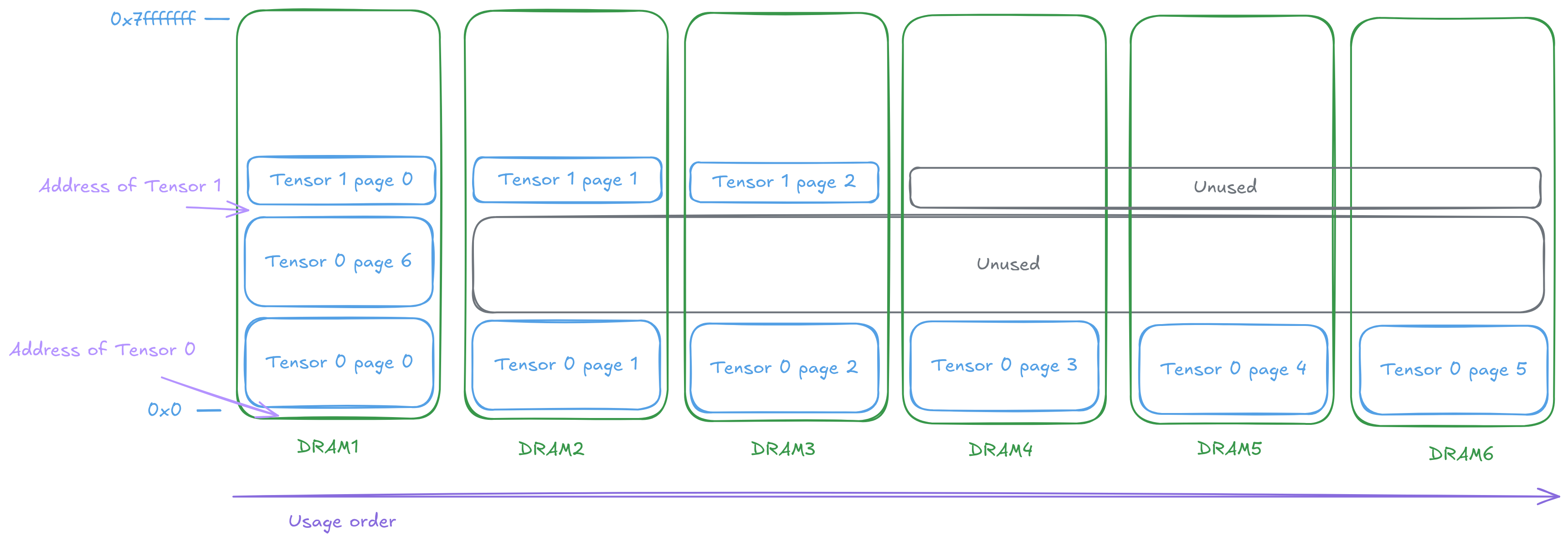

Lock step allocation

Let's solve the last question first. Imagine you have a tensor. Stored across D1 to D6. But each living on a different address. How exactly are you supposed to access it? Pass 6 pointers to the kernel? But future architectures may have more DRAM controllers. This approach make kernel programming not scalable. Tenstorrent's solution is brute force but effective - lock-step allocation - during allocation, we divide (then round up) the tensor size by the number of DRAM controllers. Allocate assuming the processor only has 1/N memory. Then share the resulting address with all DRAM controllers, multiplying the space back. This way pointers keeps being unique and a single pointer is enough to denote unique objects.

Obviously there is trade off. If you ever need to allocate X amount of memory on a single DRAM controller, all other DRAM controllers will have to allocate X as well. Or the allocated amount not being cleanly divisible by the number of DRAM controllers, then some banks will have unused space. Still, the trade off is worth it for the simplified of the programming model. Instead of passing N pointers to the kernel, where N is dependent on the hardware architecture, you only need to pass 1 at the cost of some wasted space.

Row major vs Tile layout

The next question is how to partition the data. Tenstorrent supports row major. You might already be familiar with it, row major is the default for almost everything like C, C++, Rust and Python. Where the data is stored in a row by row fashion, one dimension at a time.

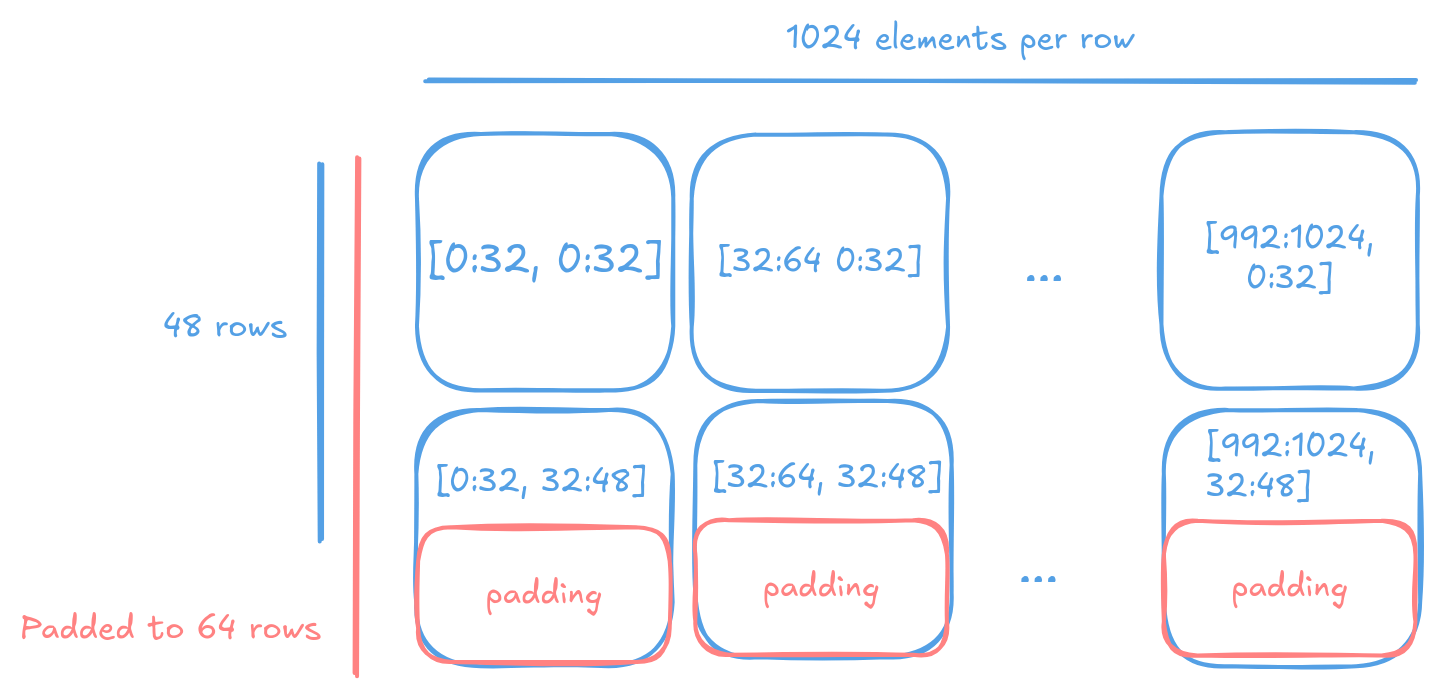

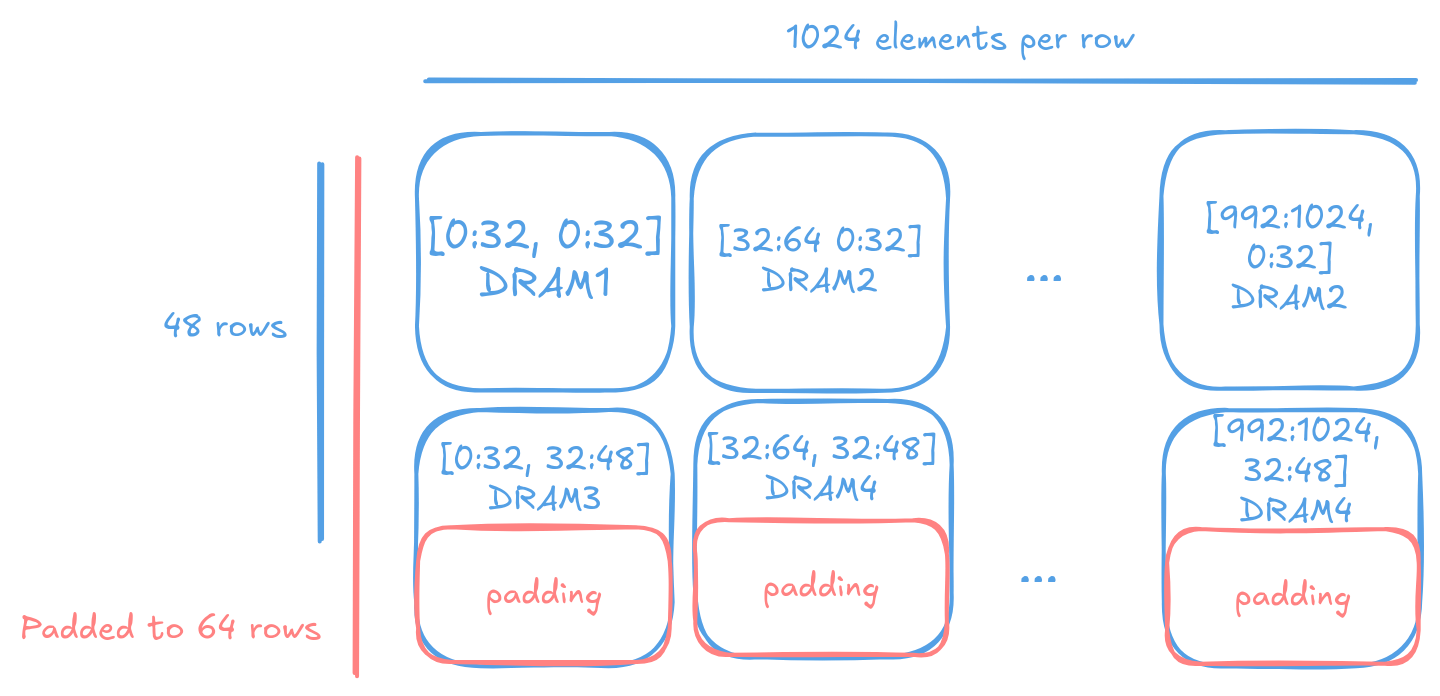

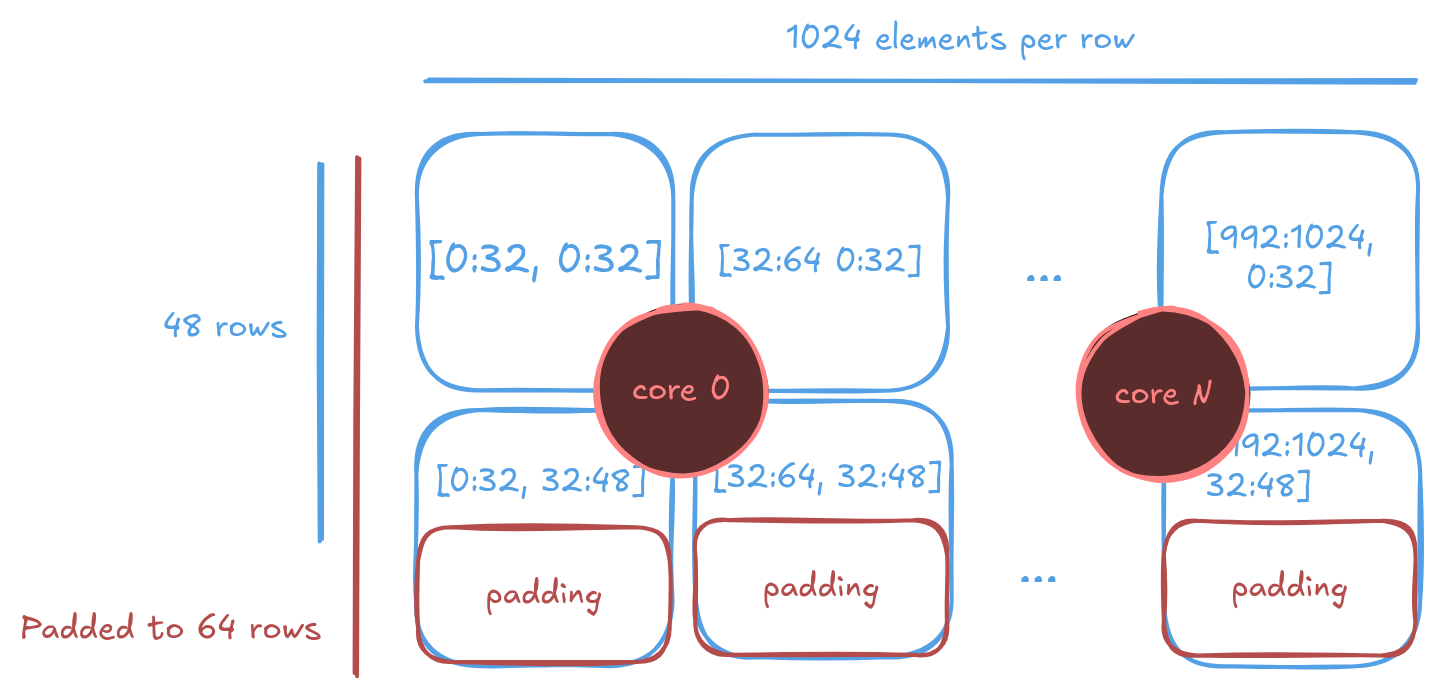

However, the tiled layout is the preferred layout for Tenstorrent's operator library. Any N dimensional tensor has it's last 2 dimensions tiled into 32x32 tiles, which non-multiple of 32 dimensions padded with zeros. This layout closely matches the hardware's capabilities and allows for better memory access patterns and performance.

This is really a difference on the TTNN layer. Then by default a tensor tensor on device is stored in row major (as frameworks like Torch does) then converted to tiled layout upon user request.

import ttnn

import torch

device = ttnn.open_device(device_id=0)

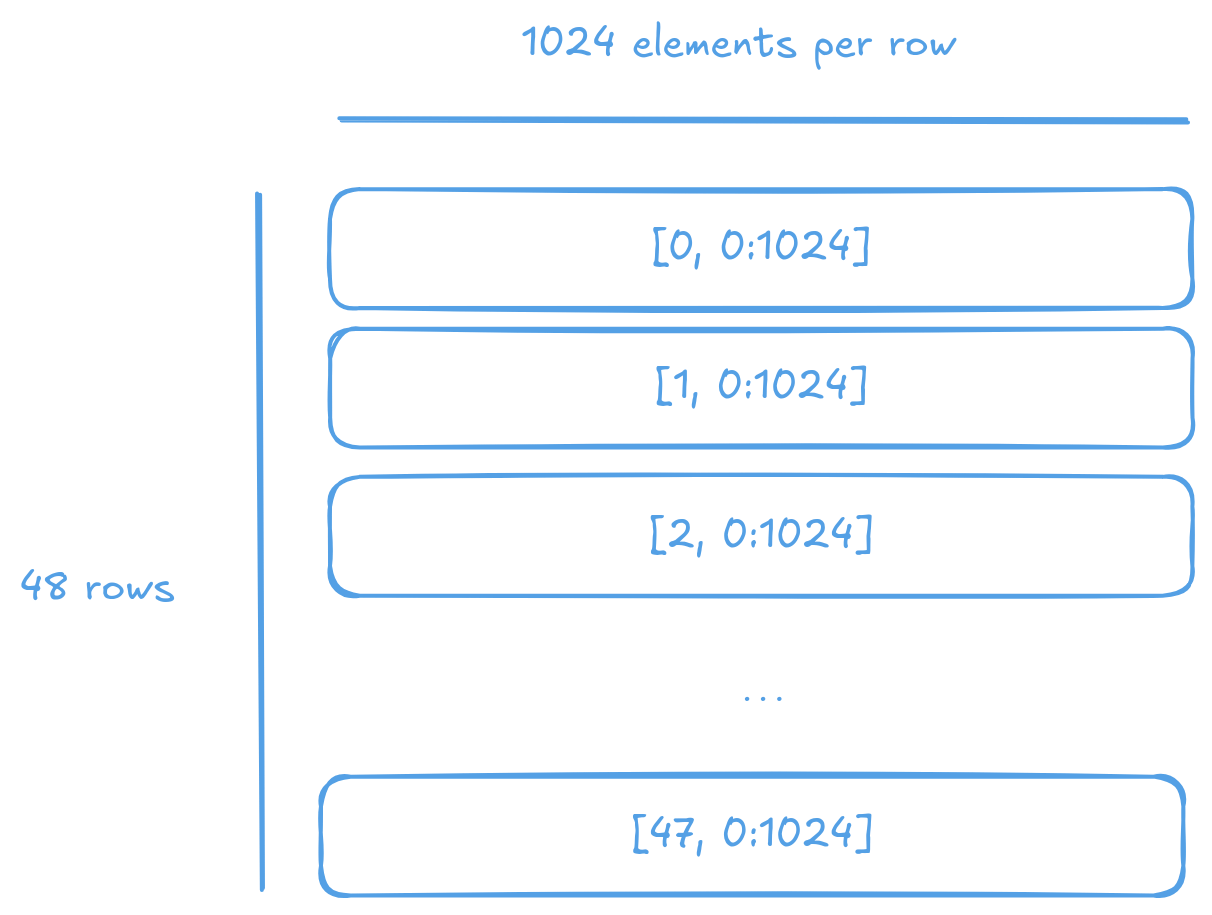

tensor = torch.rand(48, 1024)

t = ttnn.from_torch(tensor).to(device) # At this point the tensor is in row major

t = ttnn.tilize_with_zero_padding(t) # Now the tensor is in tiled layout

Interleaved memory

"Interleaved" just means round-robin. But what's the granularity for the round-robin? How much data is stored on each DRAM chip before moving on to the next one? This is what page_size does in the allocation API, that is the size of the round-robin. Say if the page size is 1024 bytes and you allocate 2048 bytes. The first 1024 bytes will be stored on D1 and the next 1024 bytes will be stored on D2 (then nothing on D3 to D6, as a result of lock-step allocation).

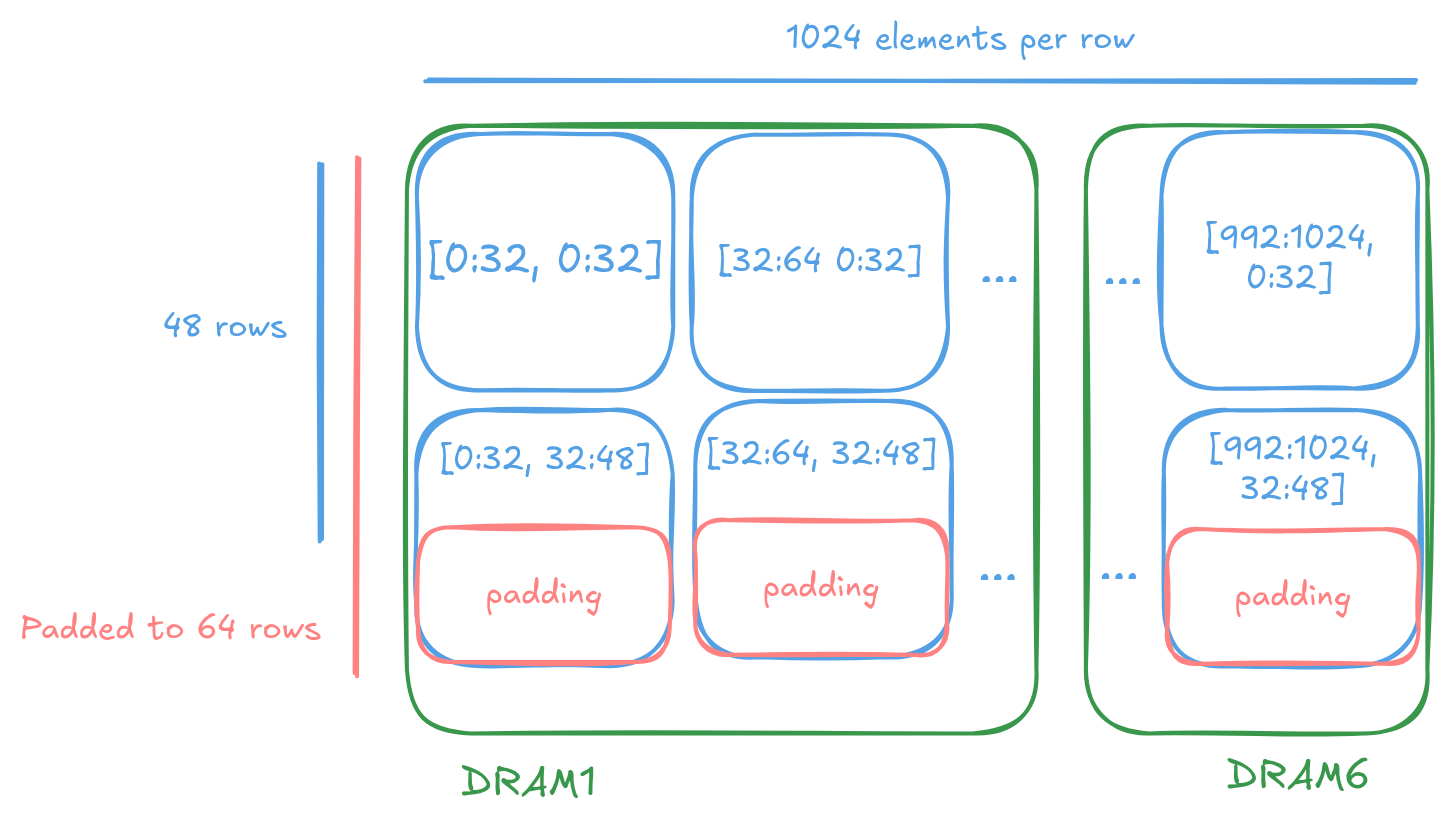

Tenstorrent's operator library, TTNN, supports 2 data layout modes. The classic row major and a tiled layout. Under interleaved mode, in row major, each full row is stored on a single DRAM controller. While in tiled mode, each 32x32 tile is stored on a single DRAM controller. Both then round-robin to the next DRAM controller. Like so

Likewise tiled tensors can also be interleaved. This time each tile is round-robin'd to the next DRAM controller.

Again, by default tensors are interleaved no matter the layout.

import ttnn

import torch

device = ttnn.open_device(device_id=0)

tensor = torch.rand(48, 1024)

t = ttnn.from_torch(tensor).to(device) # Row major, interleaved

t = ttnn.tilize_with_zero_padding(t) # Tiled, interleaved

Shared memory

Interleaved memory closely resambles how memory works on CPU/GPU and is very generic. Thus used in most operators. But it has a problem - it is not NoC friendly. It is not a problem when performance is bound by compute instead of memory. However when it does, cores round-robinning to all the DRAM controllers is bounded to cause contention. The following image shows a very simple case of NoC collision where 2 cores are trying to read from 2 different DRAM controllers. But their traffic partially shares the path. Since a hop in the NoC and only carry one piece of data per cycle. Forcing one to wait for extra cycles.

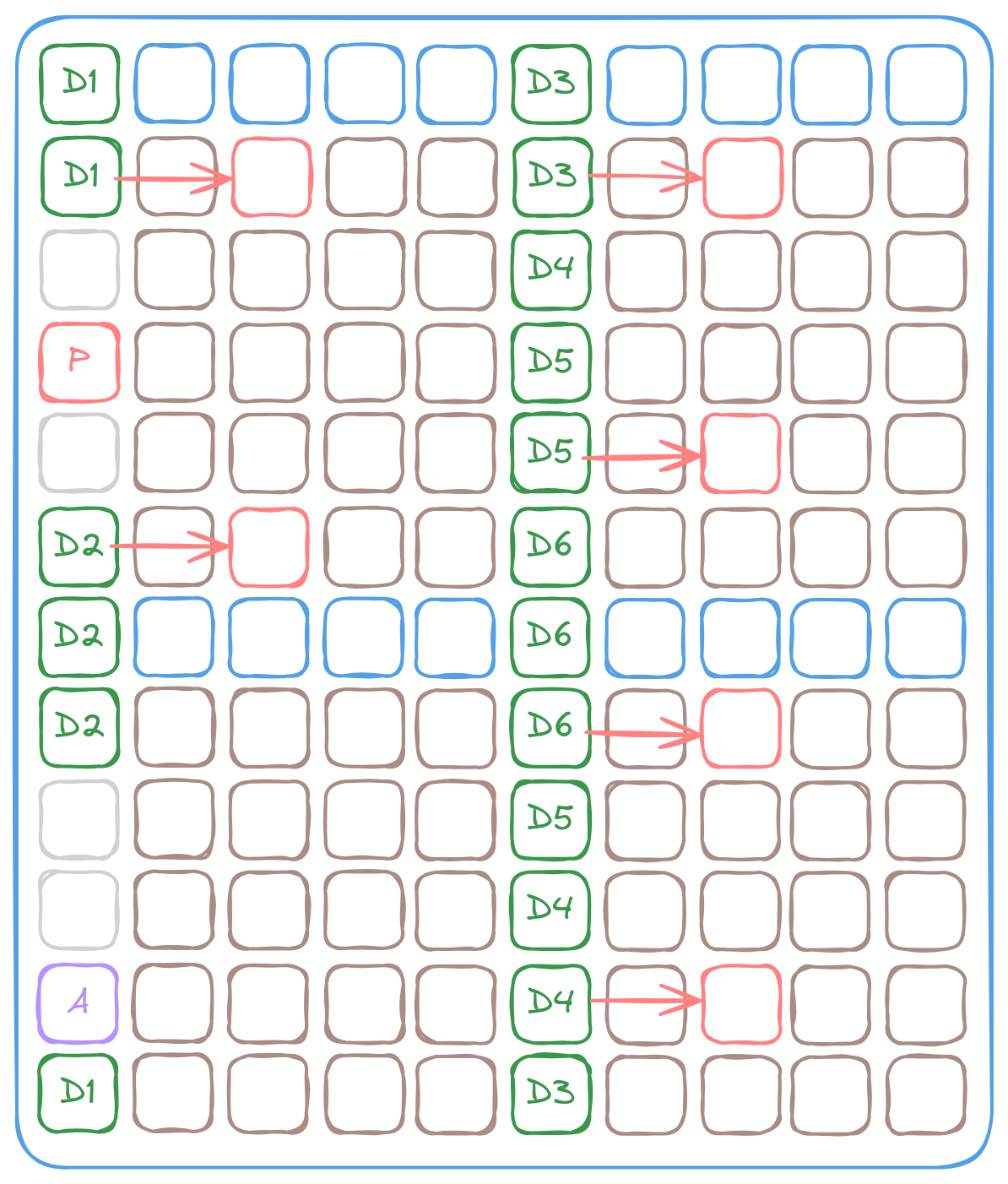

That's not great for performance. Luckily we can trade some flexibility to resolve this. Instead of round-robinning the data to all DRAM controllers. We can chunk the data along the x or y axis (width or height) or both into N parts (N = number of DRAM controllers). This way each DRAM controller holds data that is spatially close to each other. Which in deep learning workloads, is likely to be accessed together. Thus for operators that can take advantage of this, Tenstorrent provides a shared memory mode.

The following image shows the same (48, 1024) tensor as before but this time in width shared mode.

This way cores near the sharded data can access directly. Imagine an image is sharded in the height dimension. Then say summing across the width of the image would then be very fast. As the data is next to the core and the NoC is congestion free.

There is so convenience in the API to shard memory on DRAM so bear with me (most ops that uses DRAM needs interleaved anyway). It's there.

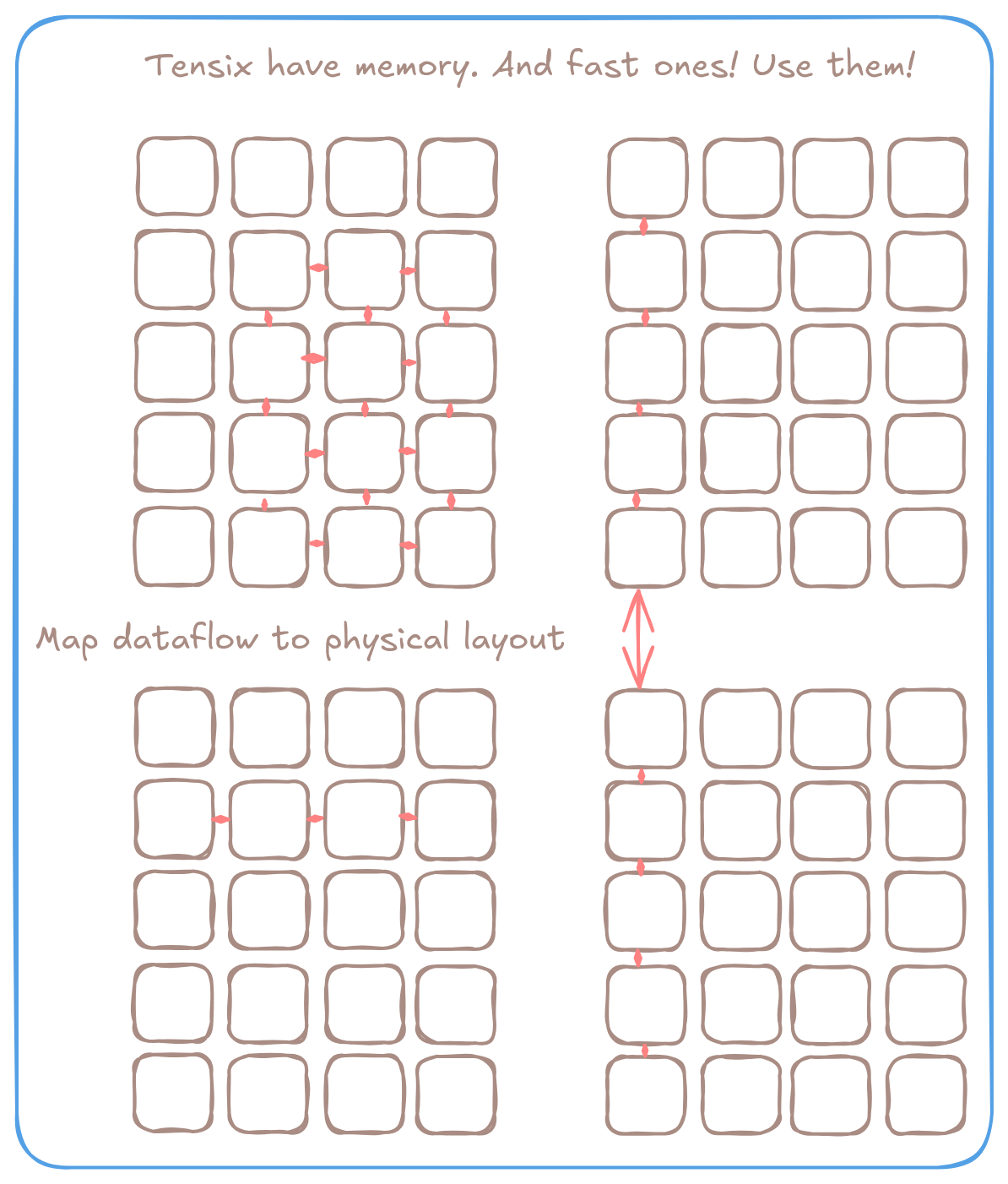

Storing data on L1

As alluded to before, each Tensix core has a small amount of SRAM (on Wormhole, 1.5MB) called L1. And the NoC does not differentiate between accessing DRAM or L1. So it's also possible to store data in L1. Which is much faster then DRAM. This is useful for small tensors that are intermediate results to following operations. Like the output of a convolutional layer that is about to be passed into another convolutional layer. This way the intermediate result doesn't have to be stored back to DRAM and read back in. Eliminating parts of the bandwidth bottleneck of deep learning workloads.

Even better, when sharded correctly. Cores can hold only the data it needs and only requiring access to nearby cores. This maximizes the bandwidth of the NoC and minimizes the latency of the data access.

import ttnn

import torch

device = ttnn.open_device(device_id=0)

tensor = torch.rand(48, 1024)

# Put tensor in L1 as interleaved

t = ttnn.from_torch(tensor, memory_config=ttnn.L1_MEMORY_CONFIG, device=device)

# Put tensor in L1 as block sharded

t = ttnn.from_torch(tensor, memory_config=ttnn.L1_BLOCK_SHARDED_MEMORY_CONFIG, device=device)

Back to the initial code

Returning to the initial code, we can now understand what's going on. page_size is the size of the round-robin chunk. sram is a boolean that decides if the buffer is stored in L1 or DRAM. Since the concept of row-major and tiled layout is purely on the layer of TTNN, the buffer creation API doesn't need to know about it.

std::shared_ptr<Buffer> MakeBuffer(IDevice* device, uint32_t size, uint32_t page_size, bool sram) {

// Creating an interleaved buffer

InterleavedBufferConfig config{

.device = device,

.size = size,

// size of the round-robin chunk

.page_size = page_size,

// L1 or DRAM

.buffer_type = (sram ? BufferType::L1 : BufferType::DRAM)};

return CreateBuffer(config);

}

And why doesn't InterleavedAddressGenFast doesn't need a NoC coordinate to send DMA requests? Because it round-robin's all DRAM controllers. And the NoC coordinates are known at compile time. The kernel library can just grab that and fire off the requests.

const InterleavedAddrGenFast<true> c = {

.bank_base_address = c_addr, // Don't need NoC coordiniate as it is round-robbin!

.page_size = tile_size_bytes,

.data_format = DataFormat::Float16_b,

};

noc_async_write_tile(idx, c, l1_buffer_addr);

Implications

With a decent understanding of how memory works on Tenstorrent. Now we can undersatd some baffling API and software choises that makes no sense at first sight

tensor.deallocate()

Obviously Python has CG and C++ has RAII. Why in the would would anyone need to manually deallocate a tensor? Simple! There is no guarantee when GC on Python runs and L1 is small, so small that for optimal performance you need to manage it yourself and attempt to squeeze as much tensor into L1 as possible. So you need the ability to deallocate tensors exactly when you need it to die.

import ttnn

l1_tensor = ttnn.Tensor(...)

l1_tensor.deallocate() # deallocates the tensor from L1

It is less useful in C++ obviously. But still an option.

Sending/receiving data from L1

This started out looking as magic. Looking at Metalium kernels it feels it it needs buffers to have the same address on the current core and remote cores. Which is What? Yes! That is exactly right. And yes, the lock step allocation makes that guarantee. This is also why the following code found in reader_bmm_tile_layout_in0_receiver_in1_sender.cpp works.

// Create a multicast (write to many cores)

uint64_t in1_multicast_data_addr = get_noc_multicast_addr(

in1_mcast_dest_noc_start_x,

in1_mcast_dest_noc_start_y,

in1_mcast_dest_noc_end_x,

in1_mcast_dest_noc_end_y,

in1_start_address); // write to in1_start_address

// num_dests must not include source, since we are NOT really doing a local copy!

noc_async_write_multicast(

in1_start_address, in1_multicast_data_addr, in1_block_size_bytes, in1_mcast_num_dests);

// ^^^^^^^^^^^^^^^

// Read from local `in1_start_address`. They are the same!

Lack of multitenancy

L1 is a very limited resource and most applications will use up a significant amount of it. Which makes multitenancy not viable as processes would just OOM from L1 allocation and blow each other up. Also due the same fact. Tenstorrent put the allocator in userspace instead of kernel space. This made allocation faster as effectively the application has a entire L1 and DRAM as it's memory pool. But also other processes wouldn't know which part of memory is in use. They'll clash, crash and burn.

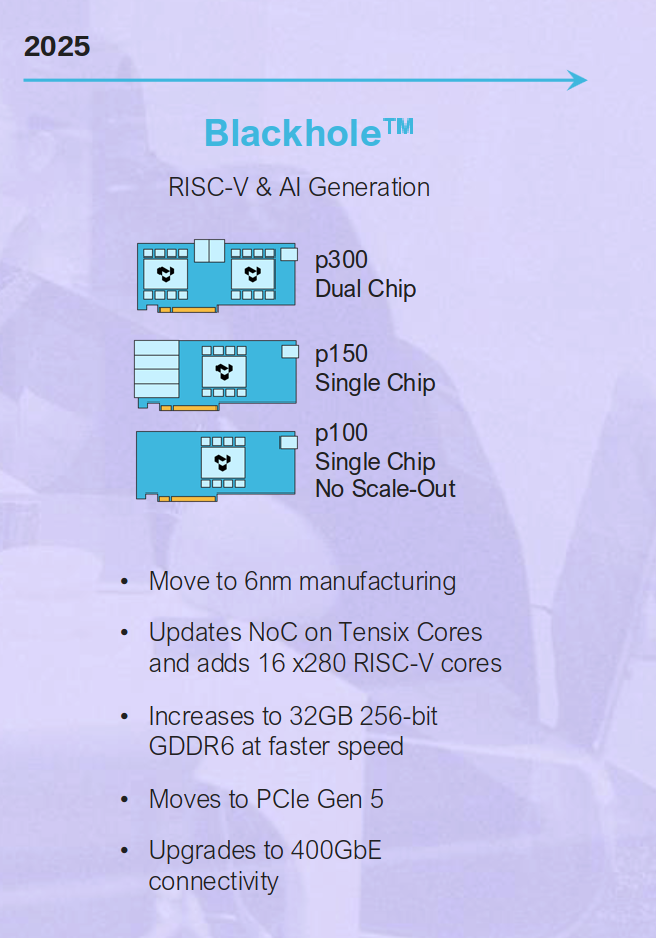

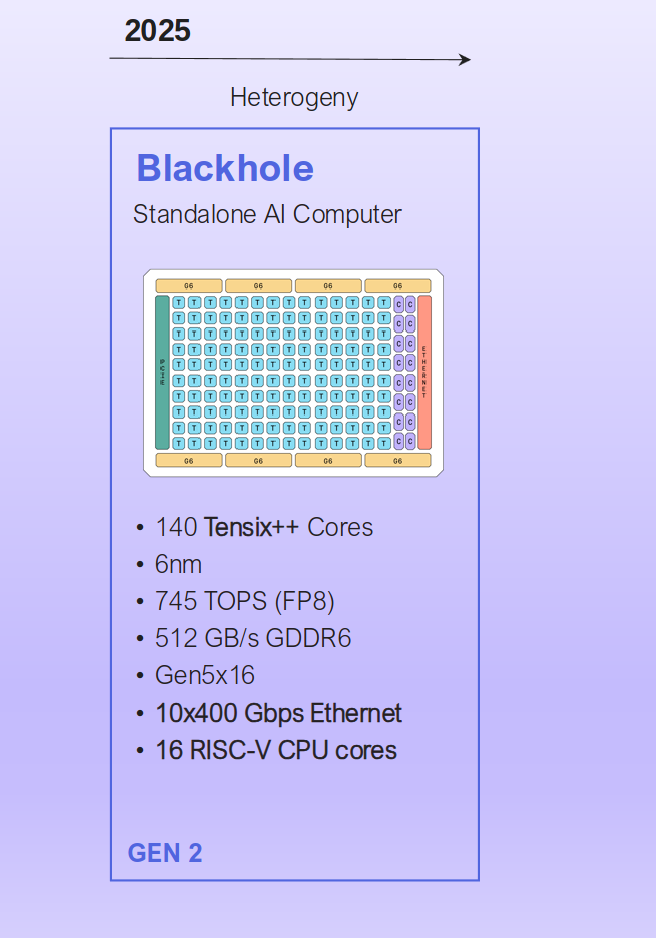

Maxing out memory tile address space in API

Public slides from Tenstorrent shows that per Blackhole has 32GB of memory per chip and 8 memory controllers. So that nicely divides into 4GB per controller. Which fills the entire address 32bit address space. Luckly if you dig into the Blackhole code, the NoC already supports 64bit addresses. But the NoC APIs still relies on 32bit values for address. Which is likely going to be a pain to fix.

See the following images

Thanks to Corsix, Evolution101, claez_ake_kassel_fran_danderyd, Icarus and other for proofreading and sharing feedback.

Hopefully this post has helped you understand how memory works on Tenstorrent. It's involved and complicated, especially that the hardware itself is involved in the memory format. But it's also very powerful and allows for very high performance. Gosh, that a lot of diagrams. I hope they help you understand the concepts better. See ya!

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- martin \at clehaxze.tw

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df