Benchmarking RK3588 NPU matrix multiplication performance EP3

Today is the last day of CNY and being honest, I have nothing to do. Out of nowhere, I decided to look deeper into RK3588's NPU performance characteristics. To figure out what it actually needs to be performant. Like how batch size and native/normal layout affects the performance. I haven't done any of these before, as llama.cpp only supports one configuration, the normal layout with batch size of 1. But knowing more could unlock more potential right?

You can find the previous posts on the topic here:

The source code for the benchmarking tool is available here:

Some concepts for the Rockchip matrix multiplication API

The matrix multiplication API specifies quite many things. The list includes:

- The shape of the two input matrices (M, N, K)

- The type of the input matrices (float16, int8, int4)

- The layout of the input matrix A and output matrix C (normal/native)

- The layout of the input matrix B (normal/native)

Matrix shapes and types are quite self-explanatory. Matrix layout needs some explanation. The normal layout is the row-major layout that we are all used to. The native layout is specific to the NPU and and basically interleaves the rows of the matrix. The header file not the documents specifies how it impacts the performance. Which I find out is it has major impact.

Results

The benchmarking tool generates a 11MB CSV that I ran some analysis on. The results are quite informative. The analysis script is written in C++ and the ROOT data analysis framework. In case you are wondering.

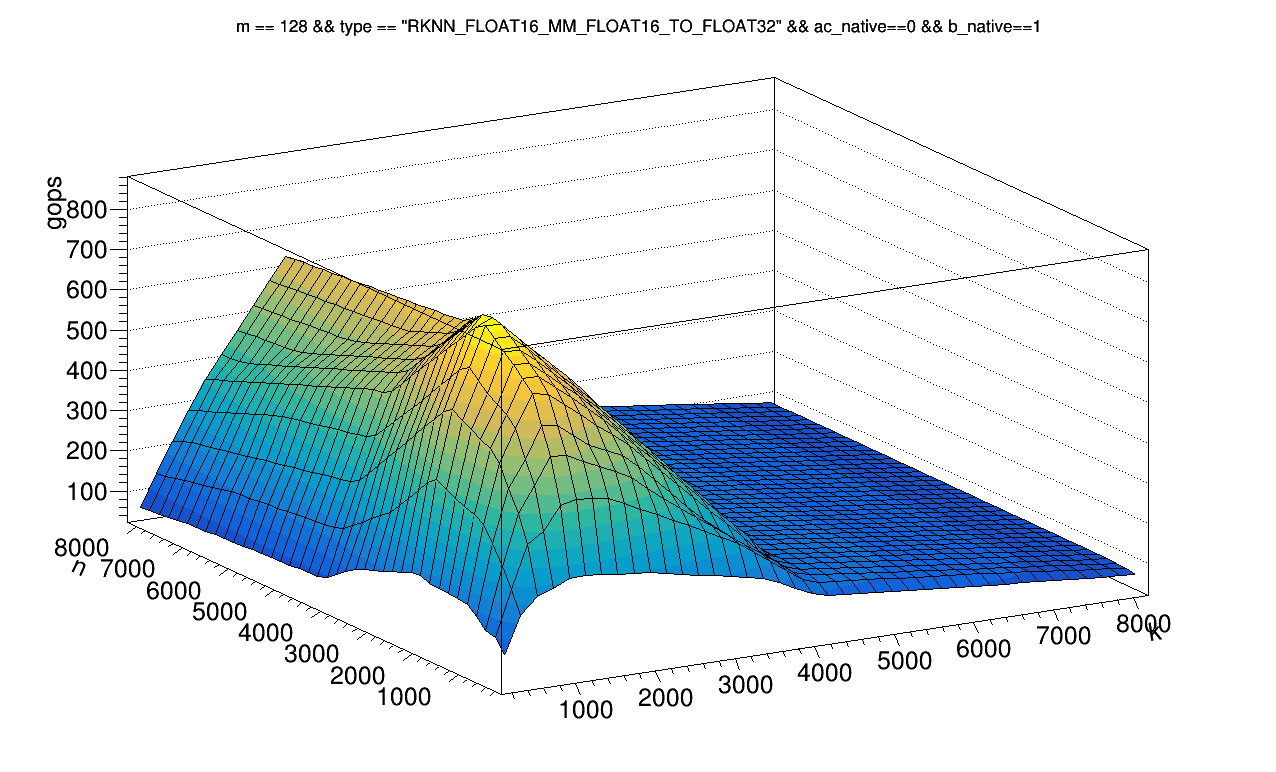

Best case performance

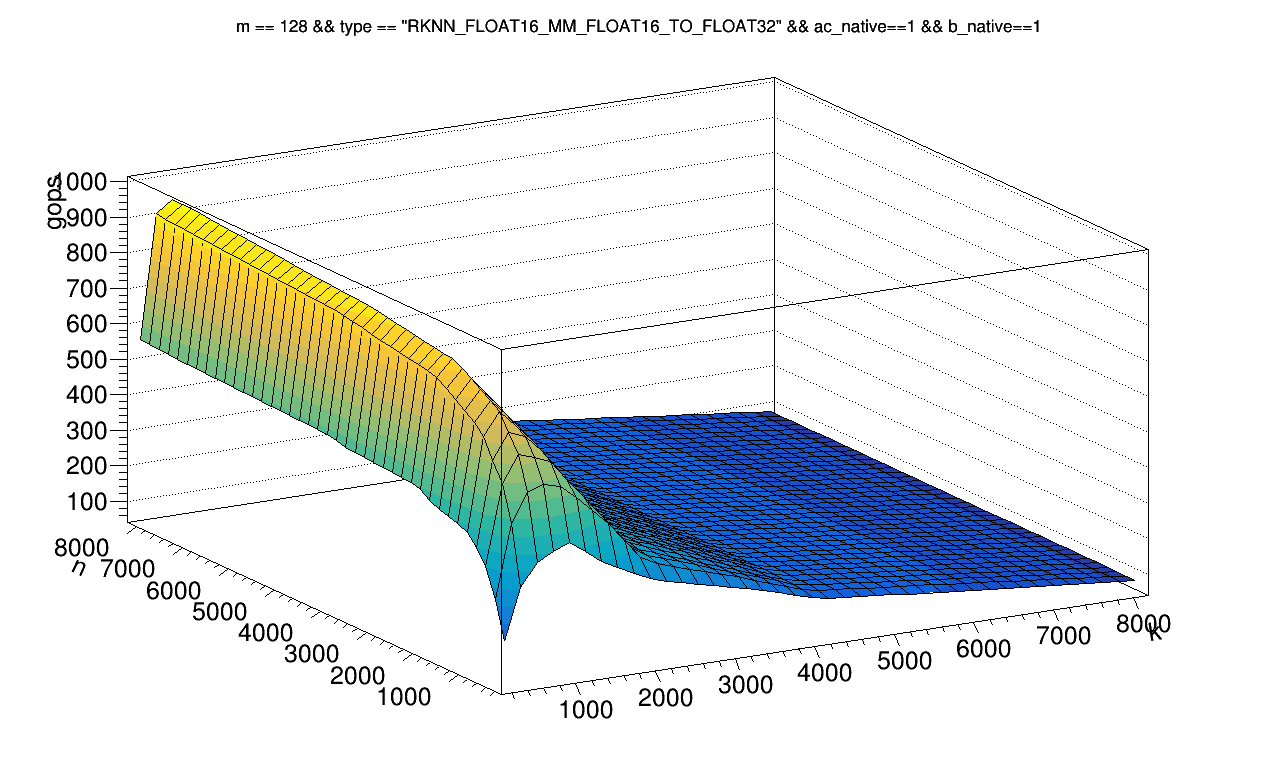

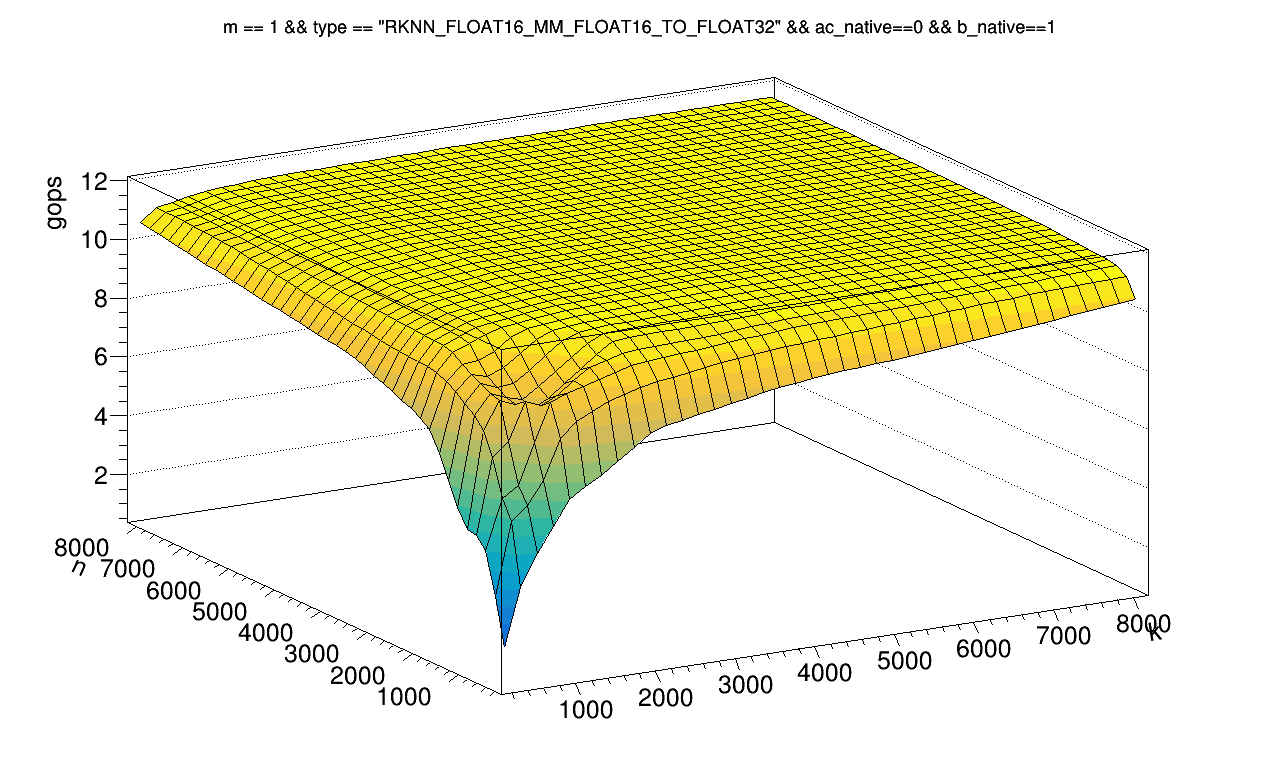

My first question coming into mind is how fast exactly is the NPU. I got bit by the NPU's performance, as of now I can't get llama.cpp to run meaningfully faster on the NPU vs the CPU. And I've seen reports saying the NPU tops at 10GFLOPS FP16 in practice. How fast is it really? Turns out the NPU can get to 900 GFLOPS FP16 doing an 128x1024x8192 matrix multiplication. That's close to the theoretical peak of 1 TFLOPS. But the performance quickly drops with K getting larger and large.

I suspect the NPU can only hold so many data in its SRAM. And the performance drop off is due spilling to the DDR.

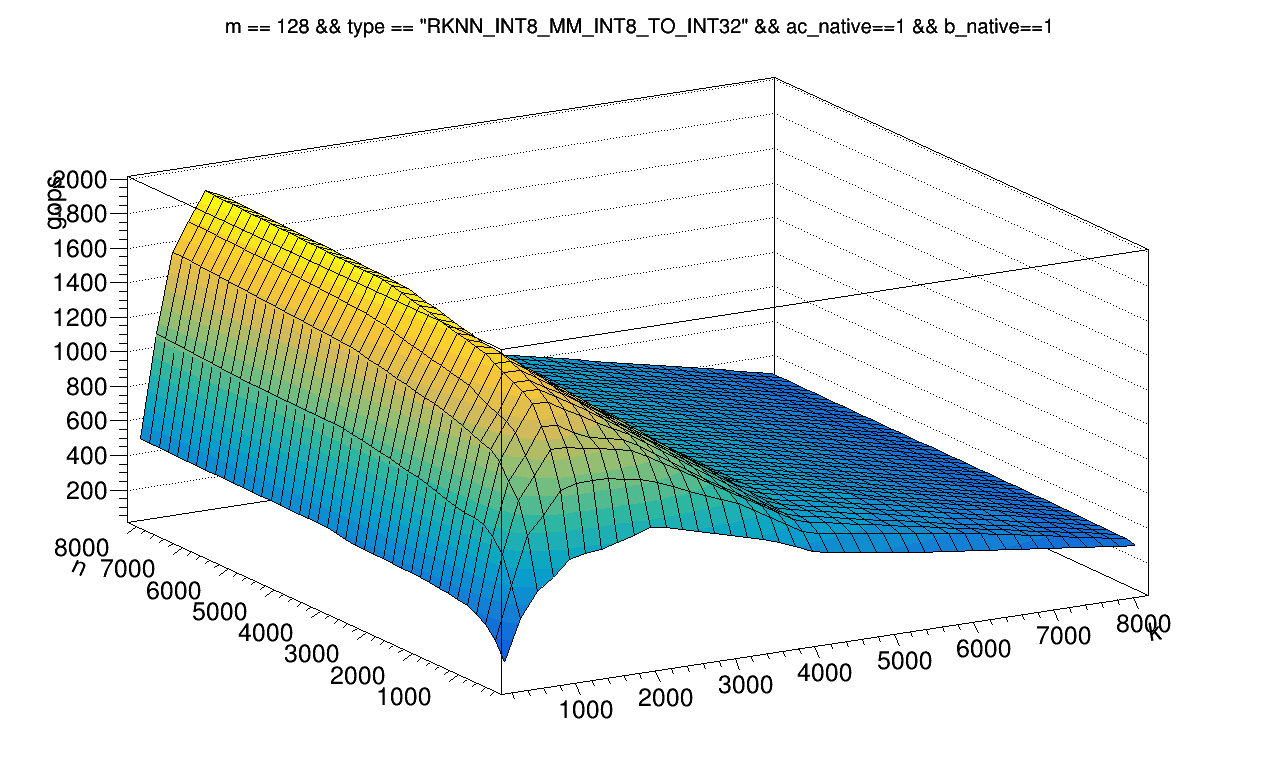

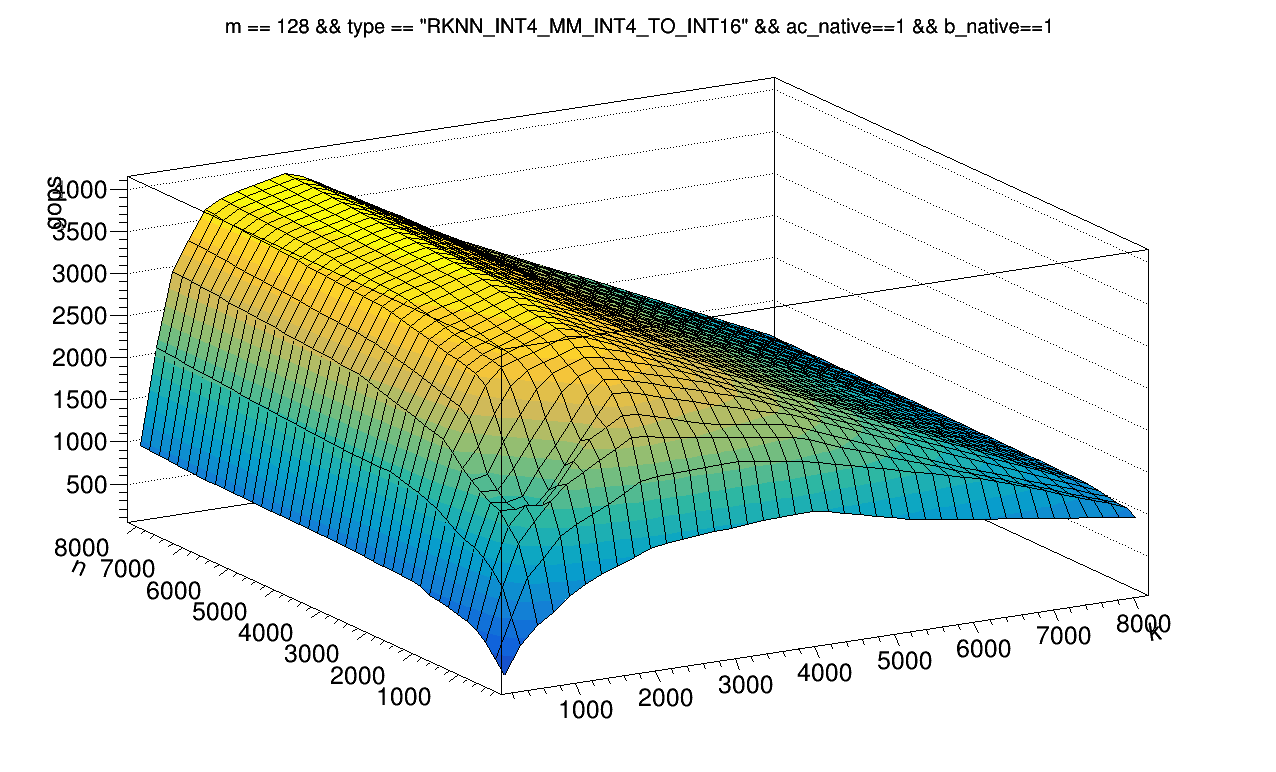

INT8 performance is as expected, 2x of FP16. And the performance drop off along the K axis is not as severe. This time peaking at ~1.8 TOPS around 128x1024x8192. INT4 is another 2x of INT8, peaking at almost 4 TOPS.

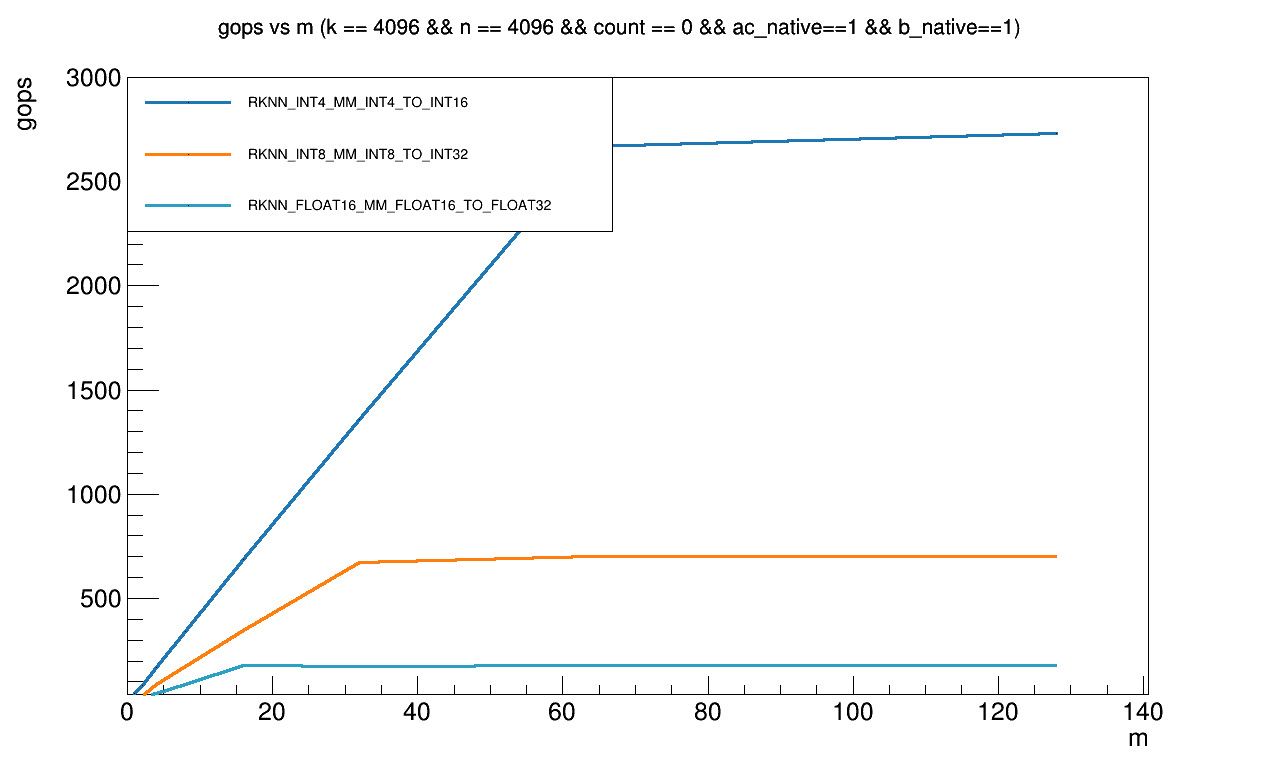

Suffering from low M and memory bound

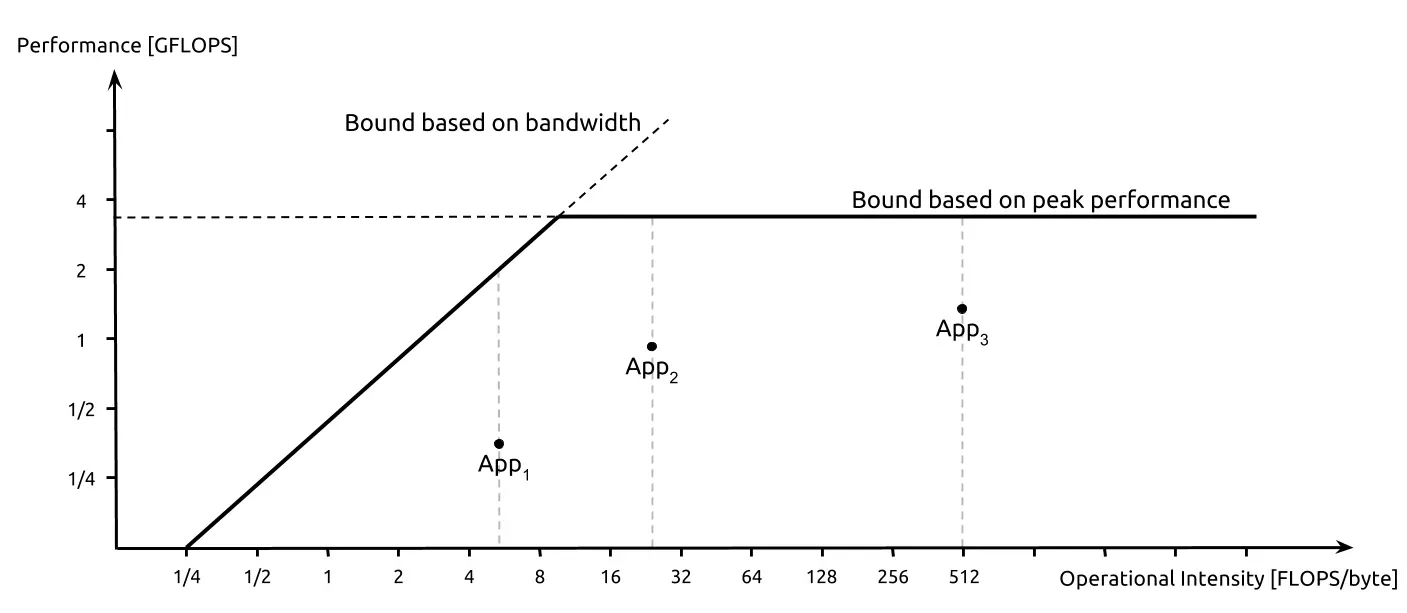

In the majority of the cases, llama.cpp will be doing GEMV instead of GEMM. Where the M dimension is 1. I suspect the NPU is going to suffer from this. And the results are as expected. The performance drops off significantly when M is low. The M==1 data point isn't visible on all 3 lines.

Interestingly, the performance graph looks exactly like the compute bound vs memory bound graph. The performance first increases linearly, then flattens out. However the ratios between the lines doesn't match what memory bounded would look like. I suspect there are inefficiencies in the NPU's memory access pattern. Or compute units are not fully utilized.

However, the prformance does not drop any bit if matrix A is native or not when M == 1. I suspect this is a special case in their SDK since for this specific case, the actual data remains the same no matter the layout.

Doing further digging. I find that the SDK reorders matrix A during rknn_matmul_set_io_mem if the layout is normal. But it does spend time converting matrix C from native to normal layout. Applications will want to change matrix A often, so the performance impact will become even more significant.

Native matrix A, C is critical to performance

The NPU's performance is significantly impacted by the layout of the input matrix A (and by extension, the output matrix C). As seen above, the NPU can reach 900 GFLOPS FP16 with native layout. But the peak performance drops to ~750 GFLOPS with normal layout. And the peak shifted.

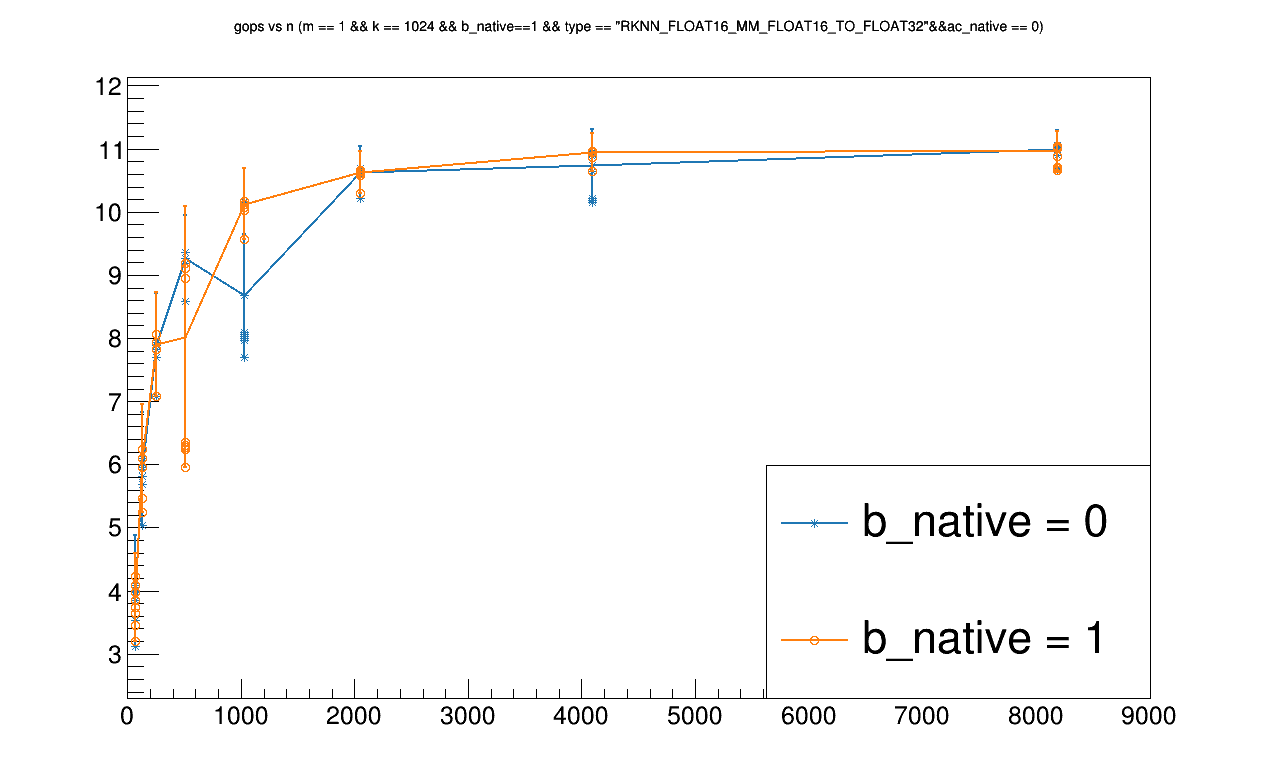

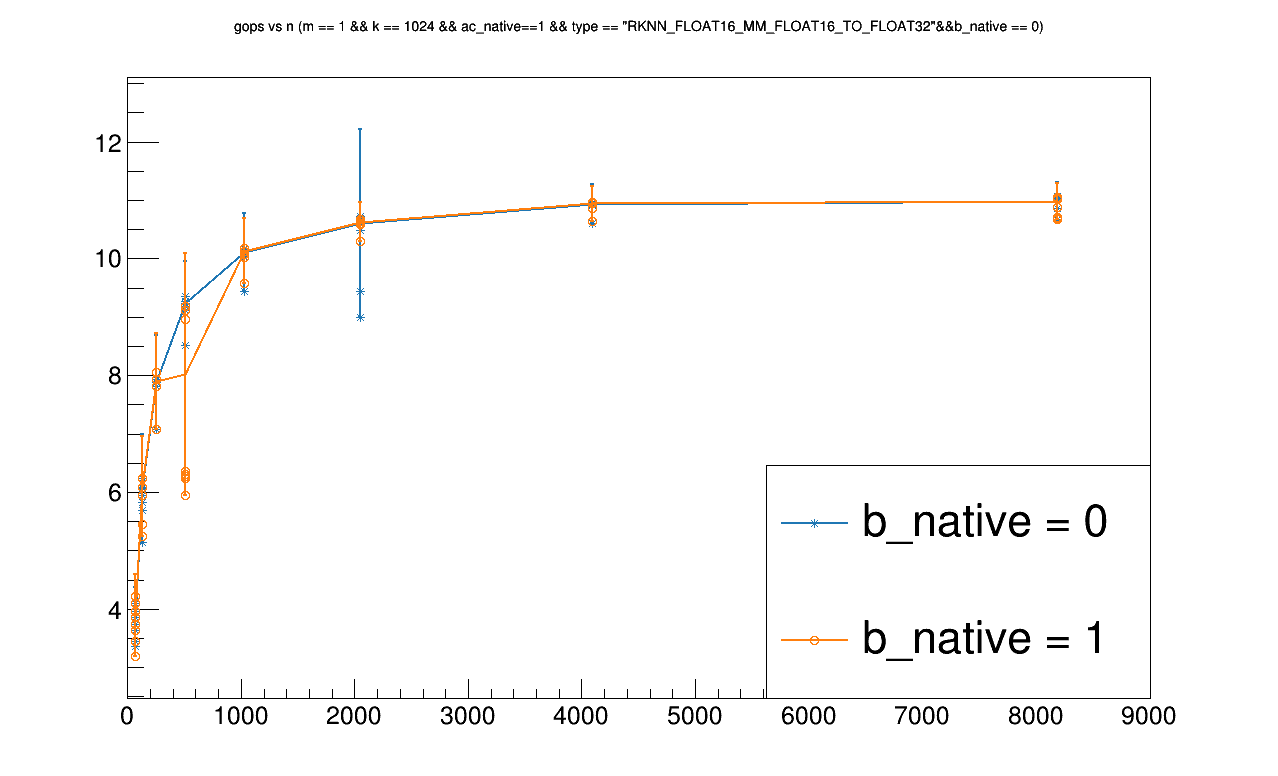

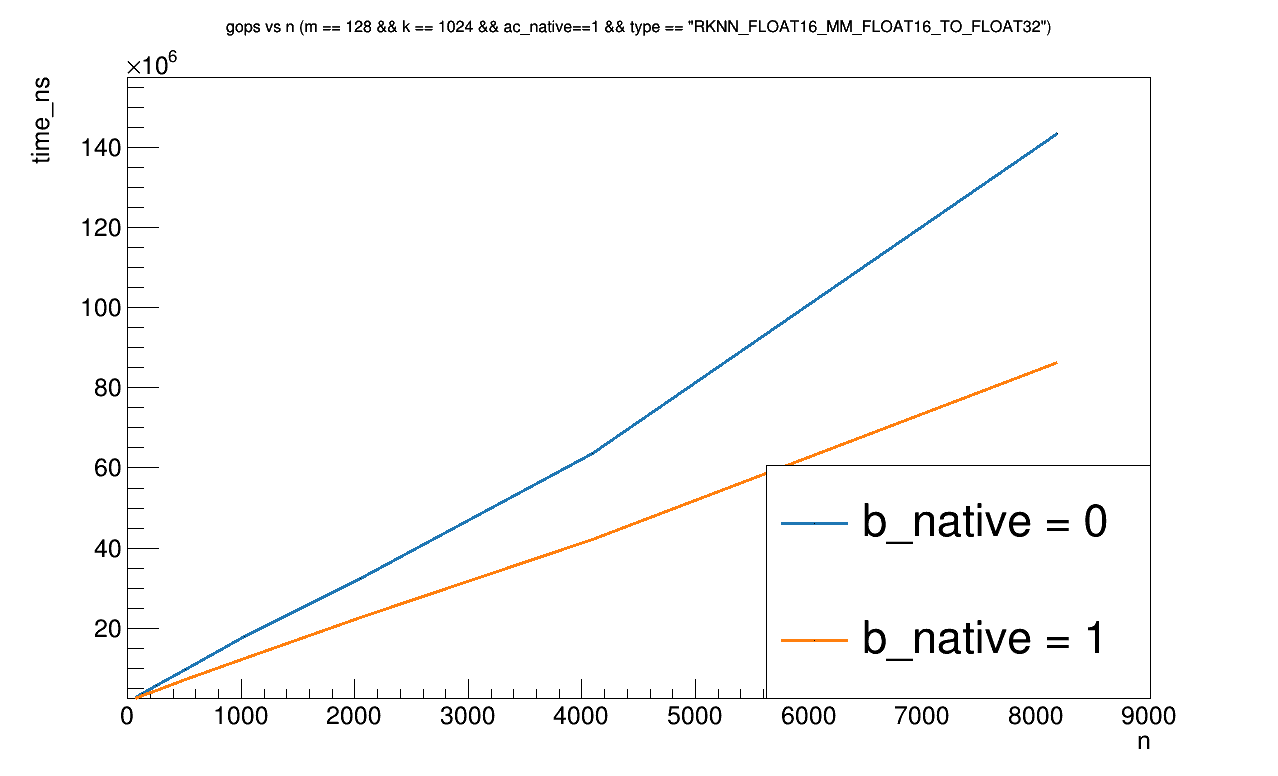

Matrix B being normal/native does not affect matrix multiplication, but impacts initialization time

Finally, the layout of matrix B does not impact the performance of the matrix multiplication. But it does impact the initialization time. Same reason as using the normal layout for matrix A. The SDK reorders matrix B during rknn_set_io_mem. However, most applications will happily use the same matrix B for many matrix multiplications. So only the initialization time is impacted.

Conclusion

I don't know what would be helpful advice based on this data. Hardware vendors should optimize GEMV I guess. Pushing GEMV to also run at 900 GFLOPS would be a huge win. But there's nothing much I can do with current hardware. There's generally a few things I can do. But non of them apply to LLaMA or GGML without major effort.

- Develop network architectures that uses 1024x8192 matrix multiplications for every batch.

- Optimize and work around the input reordering. This is done by useful-transformers now. But it's a major pain to do.

I'll see what I come up with. But I don't like the picture I'm seeing. Hopefully Rockchip can fix this with some driver magic.

Martin Chang

Systems software, HPC, GPGPU and AI. I mostly write stupid C++ code. Sometimes does AI research. Chronic VRChat addict

I run TLGS, a major search engine on Gemini. Used by Buran by default.

- martin \at clehaxze.tw

- Matrix: @clehaxze:matrix.clehaxze.tw

- Jami: a72b62ac04a958ca57739247aa1ed4fe0d11d2df